Pavel Izmailov

@Pavel_IzmailovResearcher @AnthropicAI 🤖 Incoming Assistant Professor @nyuniversity 🏙️ Previously @OpenAI #StopWar 🇺🇦

Similar User

@ZoubinGhahrama1

@wellingmax

@TheGregYang

@zicokolter

@TacoCohen

@bschoelkopf

@bneyshabur

@FrancescoLocat8

@RogerGrosse

@emiel_hoogeboom

@polkirichenko

@andrewgwils

@jmhernandez233

@gkdziugaite

@Luke_Metz

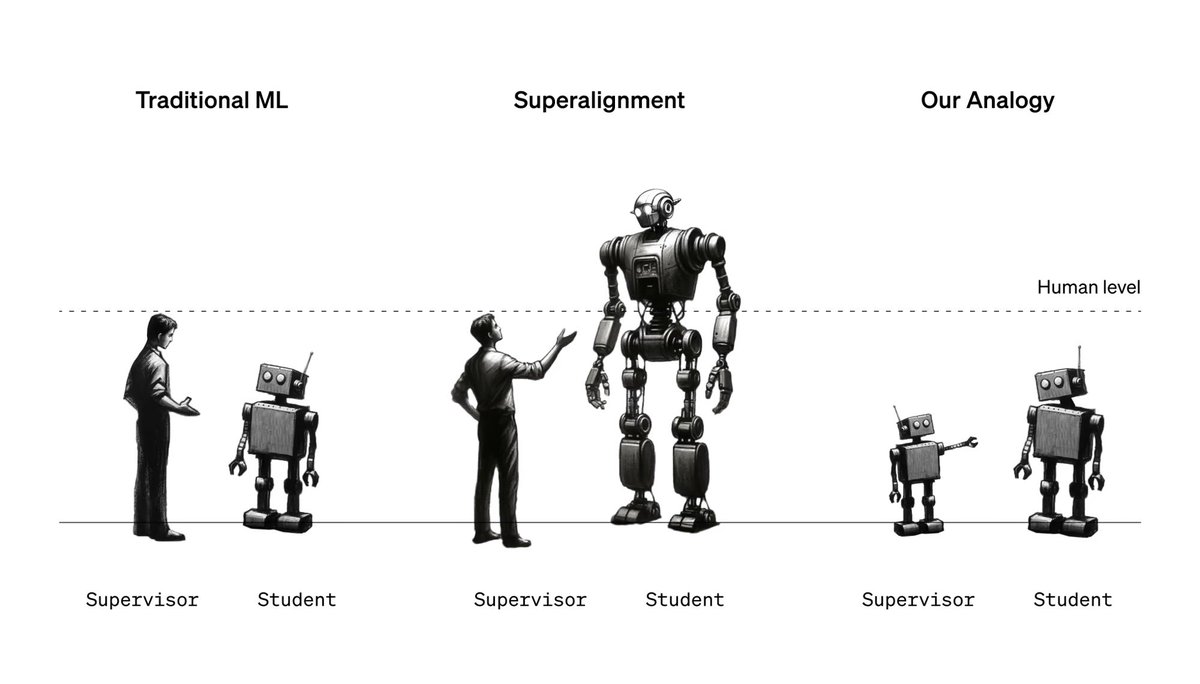

Extremely excited to have this work out, the first paper from the Superalignment team! We study how large models can generalize from supervision of much weaker models. twitter.com/OpenAI/status/…

In the future, humans will need to supervise AI systems much smarter than them. We study an analogy: small models supervising large models. Read the Superalignment team's first paper showing progress on a new approach, weak-to-strong generalization: openai.com/research/weak-…

Excited to give an invited talk tomorrow Sep 30 at the #ECCV workshop on Uncertainty Quantification in Computer Vision at 12:25pm CET in room Brown3! I will present our research on spurious correlations and geographic biases in large-scale vision and multi-modal models!

Don't miss our workshop on Uncertainty Quantification in Computer Vision @eccvconf on Mon 30 Sep AM in room Brown3. We have an exciting speaker panel @glouppe @polkirichenko @JishnuMukhoti, fun papers & posters, the reveal of BRAVO challenge results. Join us! #ECCV2024 #UNCV2024

Don't miss our tutorial A Bayesian Odyssey in Uncertainty: from Theoretical Foundations to Real-World Applications @eccvconf on Mon AM (08:45-13:00) #ECCV2024 In the line-up we have: @Pavel_Izmailov @GianniFranchi10 @a1mmer @_olivierlaurent @alafage_ uqtutorial.github.io

If you're coming to @eccvconf consider our freshly accepted tutorial on: A Bayesian Odyssey in Uncertainty: from Theoretical Foundations 📝 to Real-World Applications 🚀 w/ the amazing @GianniFranchi10 @_olivierlaurent @a1mmer @Pavel_Izmailov More info coming soon #eccv2024

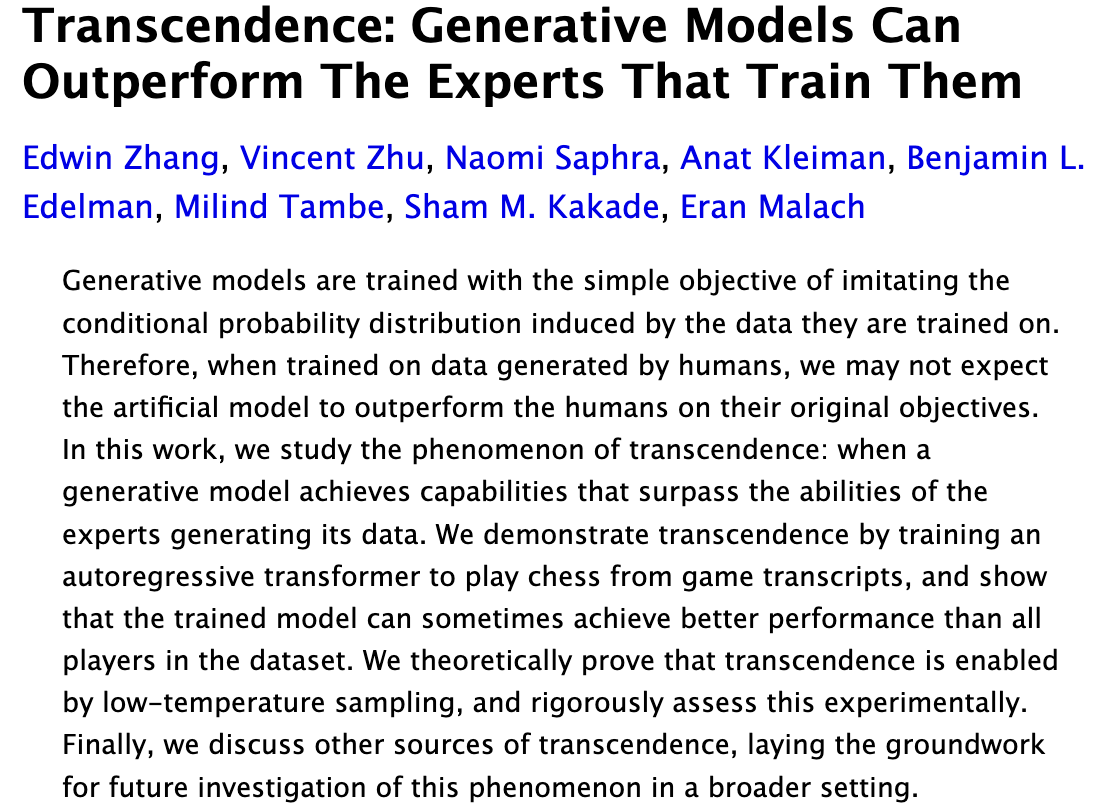

Modern generative models are trained to imitate human experts, but can they actually beat those experts? Our new paper uses imitative chess agents to explore when a model can "transcend" its training distribution and outperform every human it's trained on. arxiv.org/abs/2406.11741

Whether LLMs can reliably be used for decision making and benefit society depends on whether they can reliably represent uncertainty over the correctness of their outputs. There's anything but consensus. In new work we find LLMs must be taught to know what they don't know. 1/6

Predictions without reliable confidence are not actionable and potentially dangerous. In new work, we deeply investigate uncertainty calibration of large language models. We find LLMs must be taught to know what they don’t know: arxiv.org/abs/2406.08391 w/ @psiyumm et al. 1/8

Excited to share what I've been working on as part of the former Superalignment team! We introduce a SOTA training stack for SAEs. To demonstrate that our methods scale, we train a 16M latent SAE on GPT-4. Because MSE/L0 is not the final goal, we also introduce new SAE metrics.

We're sharing progress toward understanding the neural activity of language models. We improved methods for training sparse autoencoders at scale, disentangling GPT-4’s internal representations into 16 million features—which often appear to correspond to understandable concepts.…

I'm excited to join @AnthropicAI to continue the superalignment mission! My new team will work on scalable oversight, weak-to-strong generalization, and automated alignment research. If you're interested in joining, my dms are open.

I’m excited to announce that I’ll start as an assistant professor at Columbia University this summer! Interview season was fun, I met so many amazing people, but I’m happy to finally close the loop.

Please submit your work on robustness / privacy / trustworthiness / alignment / ... with multimodal foundation models to our ICML workshop!

🚀 Excited to announce the Trustworthy Multimodal Foundation Models and AI Agents (TiFA) workshop @ICML2024, Vienna! Submission deadline: May 30 EOD AoE icml-tifa.github.io

New Anthropic research paper: Scaling Monosemanticity. The first ever detailed look inside a leading large language model. Read the blog post here: anthropic.com/research/mappi…

An image is worth more than one caption! In our #ICML2024 paper “Modeling Caption Diversity in Vision-Language Pretraining” we explicitly bake in that observation in our VLM called Llip and condition the visual representations on the latent context. arxiv.org/abs/2405.00740 🧵1/6

📢Workshop on Reliable and Responsible Foundation Models will happen today (8:50am - 5:00pm). Join us at #ICLR2024 room Halle A 3 for a wonderful lineup of speakers, along with 63 amazing posters and 4 contributed talks! Schedule: iclr-r2fm.github.io/#program.

Excited to announce the Workshop on Reliable and Responsible Foundation Models at @iclr_conf 2024 (hybrid workshop). We welcome submissions! Please consider submitting your work here: iclr-r2fm.github.io (deadline: Fed 3, 2024, AOE) Hope to see you in Vienna or…

As part of our commitment to open science, I'm excited to share that an alpha version of our protein design code is available! Check out the tutorials to learn how to designs proteins yourself with guided discrete diffusion, just like the pros github.com/prescient-desi…

Excited to share our #ICLR2024 work led by @megan_richards_ on geographical fairness of vision models! 🌍 We show that even the SOTA vision models have large disparities in accuracy between different geographic regions. openreview.net/pdf?id=rhaQbS3…

Does Progress on Object Recognition Benchmarks Improve Generalization on Crowdsourced, Global Data? In our #ICLR2024 paper, we find vast gaps (~40%!) between the field’s progress on ImageNet-based benchmarks and crowdsourced, globally representative data. w/ @polkirichenko,…

If you're coming to @eccvconf consider our freshly accepted tutorial on: A Bayesian Odyssey in Uncertainty: from Theoretical Foundations 📝 to Real-World Applications 🚀 w/ the amazing @GianniFranchi10 @_olivierlaurent @a1mmer @Pavel_Izmailov More info coming soon #eccv2024

Another fascinating discussion this morning on the future of generative AI in physics, including the insight that "we'll be like cats...is that so bad?" (But in all seriousness, a lot of compelling back and forth on what it means to do science).

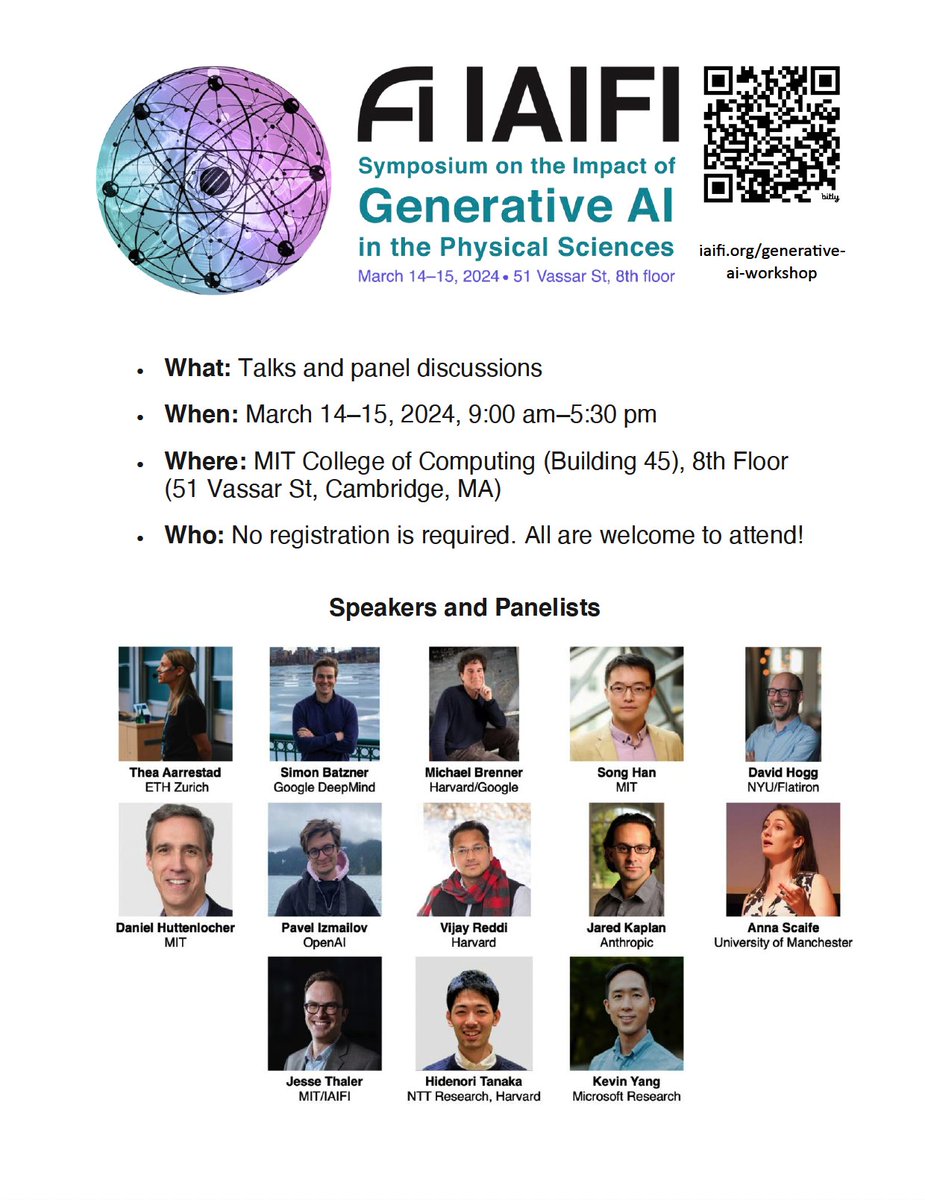

We are excited to be organizing a Symposium on the Impact of Generative AI in the Physical Sciences next Thursday, March 14 and Friday, March 15! Join us on the 8th Floor of @MIT_SCC for a great lineup of speakers and panelists. Zoom link available soon. iaifi.org/generative-ai-…

I'm excited to be speaking tomorrow at Boston University, as part of their distinguished speaker series. My talk will be on prescriptive foundations for building autonomous intelligent systems. Talk details: bu.edu/hic/air-distin…

I was very impressed by @martinmarek1999 in this project, look out for more exciting research from him!

We introduce a prior distribution to control the aleatoric (data) uncertainty of a Bayesian neural network, nearly matching the accuracy of cold posteriors 🥶 arxiv.org/abs/2403.01272 w/ Brooks Paige and @Pavel_Izmailov 🧵1/8

Have you ever done a dense grid search over neural network hyperparameters? Like a *really dense* grid search? It looks like this (!!). Blueish colors correspond to hyperparameters for which training converges, redish colors to hyperparameters for which training diverges.

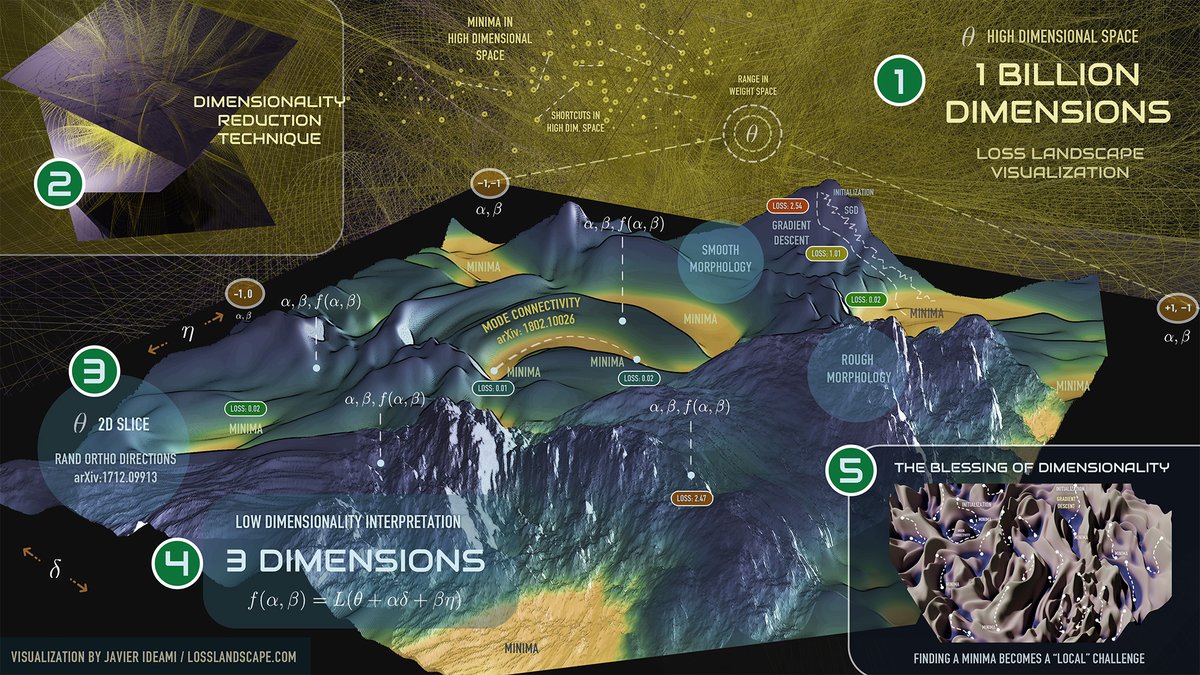

I'm glad to see losslandscape.com is still going strong. @ideami has beautiful visualizations. The geometric properties of neural network training objectives, such as mode connectivity, make deep learning truly distinct.

United States Trends

- 1. Tyson 563 B posts

- 2. Cedric 5.107 posts

- 3. #SmackDown 11,2 B posts

- 4. Rosie Perez N/A

- 5. Cam Thomas 1.614 posts

- 6. Karoline Leavitt 26,7 B posts

- 7. Paige 9.334 posts

- 8. Kash 111 B posts

- 9. #wompwomp 7.827 posts

- 10. Syracuse 24,7 B posts

- 11. Hukporti N/A

- 12. Fight Night 11,7 B posts

- 13. #Boxing 22,3 B posts

- 14. Kiyan 29,9 B posts

- 15. The FBI 268 B posts

- 16. Michin 2.865 posts

- 17. Debbie 37,4 B posts

- 18. Frankie Collins N/A

- 19. Bayley 3.239 posts

- 20. Jarry N/A

Who to follow

-

Zoubin Ghahramani

Zoubin Ghahramani

@ZoubinGhahrama1 -

Max Welling

Max Welling

@wellingmax -

Greg Yang

Greg Yang

@TheGregYang -

Zico Kolter

Zico Kolter

@zicokolter -

Taco Cohen

Taco Cohen

@TacoCohen -

Bernhard Schölkopf

Bernhard Schölkopf

@bschoelkopf -

Behnam Neyshabur

Behnam Neyshabur

@bneyshabur -

Francesco Locatello

Francesco Locatello

@FrancescoLocat8 -

Roger Grosse

Roger Grosse

@RogerGrosse -

Emiel Hoogeboom

Emiel Hoogeboom

@emiel_hoogeboom -

Polina Kirichenko

Polina Kirichenko

@polkirichenko -

Andrew Gordon Wilson

Andrew Gordon Wilson

@andrewgwils -

Jose Miguel Hernández-Lobato

Jose Miguel Hernández-Lobato

@jmhernandez233 -

Gintare Karolina Dziugaite

Gintare Karolina Dziugaite

@gkdziugaite -

Luke Metz

Luke Metz

@Luke_Metz

Something went wrong.

Something went wrong.