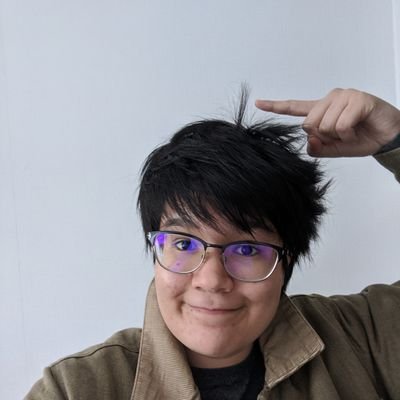

Amanda Askell

@AmandaAskellPhilosopher & ethicist now teaching models to be good @AnthropicAI. Personal account. All opinions come from my training data.

Similar User

@janleike

@ch402

@ESYudkowsky

@jkcarlsmith

@rohinmshah

@ajeya_cotra

@mealreplacer

@RichardMCNgo

@jackclarkSF

@KelseyTuoc

@QualyThe

@ben_j_todd

@NeelNanda5

@DavidSKrueger

@robbensinger

Showing that an AI system causes harm isn't the same as showing that we shouldn't build it or that we shouldn't deploy it. Some thoughts on AI ethics. askell.io/posts/2020/12/…

I know I say the word "like" too much, especially if I'm tired after a full day of meetings. So next time I'm on a podcast, I'll commit to donating something like $50 per "like" to charity and see if I can avoid bankrupting myself.

I had a lot of fun talking with @lexfridman about a wide range of topics on his podcast, alongside Dario and Chris. Hope it's interesting to others!

Here's my conversation with @DarioAmodei, CEO of Anthropic, the company that created Claude, one of the best AI systems in the world. We talk about scaling, AI safety, regulation, and a lot of super technical details about the present and future of AI and humanity. It's a 5+…

Most of us hate it when people come to conclusions about us based on features we have or categories we belong to - we have a pretty deep desire for others to treat us as individuals. I suggest we adopt a norm of doing this henceforth.

I love the radioactive rocks subreddit. People get a new rock and post a video of their Geiger counter next to it and everyone ooohs at how radioactive it is. This is why we made the internet.

The valley of social despair: when you have enough social awareness to know that you've upset someone but not enough social awareness to avoid upsetting them in the first place.

I just want all the American citizens out there to know that I'd make a really great wife.

I thought my life would be much less weird than it's shaking out to be. But I guess some are born weird, some achieve weirdness, and some have weirdness thrust upon them.

Make good choices, America. There's a lot riding on it.

Is there a real correlation between allergies / eczema / asthma and nerdiness? If so, do we have a sense of the way the causal and correlative arrows flow? My nerd allergies are flaring up so I'm curious.

I've tried playing with AI image generators, but I always stop using them as soon as I get an unexpectedly disturbing image in response to one of my benign prompts. AI that generates visual content should always have a "super safe, mostly kittens" mode for people like me.

If you ever see something I post and think to yourself "I guess Amanda's been mindkilled by politics", please tell me you had this thought and why. When I see smart people start to post terrible takes, I don't say this because it seems rude. But I'd also like to avoid that fate.

There are more of me?

Whenever there are unstructured moments of prayer in life, I just pray for there to be no hell over and over and over again. I figure there's no harm in asking.

A lot of 18th and 19th century novels make fun of women for always fancying themselves ill or always feigning illness. I suspect a lot of women at the time actually did have untreated chronic illnesses, and the novelists were basically just being dicks about it.

Let's grant the assumption that LLMs are only capable of pattern recognition. We see this is sufficient to solve novel problems, to generate new and useful ideas, etc. So how is pattern recognition distinct from intelligence? What does intelligence add? Sincere question.

Hear me out here, what if we refuse to call large language models "artificial intelligence" and consistently refer to them as "computerized pattern recognition"?

Ideal theory is like elevator music. Useful to have in the background but terrible if you listen to it too much.

It's wild to give the computer use model complex tasks like "Identify ways I could improve my website" or "Here's an essay by a language model, fact check all the claims in it" then going to make tea and coming back to see it's completed the whole thing successfully.

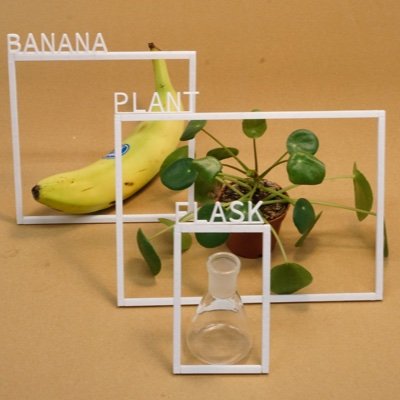

We've built an API that allows Claude to perceive and interact with computer interfaces. This API enables Claude to translate prompts into computer commands. Developers can use it to automate repetitive tasks, conduct testing and QA, and perform open-ended research.

Introducing an upgraded Claude 3.5 Sonnet, and a new model, Claude 3.5 Haiku. We’re also introducing a new capability in beta: computer use. Developers can now direct Claude to use computers the way people do—by looking at a screen, moving a cursor, clicking, and typing text.

United States Trends

- 1. $MAYO 9.827 posts

- 2. $CUTO 7.486 posts

- 3. Tyson 383 B posts

- 4. Pence 43,4 B posts

- 5. Laken Riley 38,1 B posts

- 6. Dora 22,1 B posts

- 7. Ticketmaster 16,2 B posts

- 8. Mike Rogers 7.858 posts

- 9. Kash 69,9 B posts

- 10. Cenk 10,3 B posts

- 11. Pirates 18,5 B posts

- 12. #LetsBONK 5.217 posts

- 13. #FursuitFriday 15,4 B posts

- 14. Mr. Mayonnaise 1.364 posts

- 15. The UK 429 B posts

- 16. Iron Mike 15,7 B posts

- 17. Debbie 14,8 B posts

- 18. Scholars 10,6 B posts

- 19. Al Gore 3.239 posts

- 20. Oscars 13,7 B posts

Who to follow

-

Jan Leike

Jan Leike

@janleike -

Chris Olah

Chris Olah

@ch402 -

Eliezer Yudkowsky ⏹️

Eliezer Yudkowsky ⏹️

@ESYudkowsky -

Joe Carlsmith

Joe Carlsmith

@jkcarlsmith -

Rohin Shah

Rohin Shah

@rohinmshah -

Ajeya Cotra

Ajeya Cotra

@ajeya_cotra -

Julian

Julian

@mealreplacer -

Richard Ngo

Richard Ngo

@RichardMCNgo -

Jack Clark

Jack Clark

@jackclarkSF -

Kelsey Piper

Kelsey Piper

@KelseyTuoc -

Qualy the lightbulb

Qualy the lightbulb

@QualyThe -

Benjamin Todd

Benjamin Todd

@ben_j_todd -

Neel Nanda

Neel Nanda

@NeelNanda5 -

David Krueger

David Krueger

@DavidSKrueger -

Rob Bensinger ⏹️

Rob Bensinger ⏹️

@robbensinger

Something went wrong.

Something went wrong.