François Fleuret

@francoisfleuretResearch Scientist @meta (FAIR), Prof. @Unige_en, co-founder @nc_shape. I like reality.

Similar User

@lilianweng

@SchmidhuberAI

@SebastienBubeck

@giffmana

@percyliang

@srush_nlp

@ykilcher

@wellingmax

@sirbayes

@Tim_Dettmers

@julien_c

@ericjang11

@zacharylipton

@PetarV_93

@alfcnz

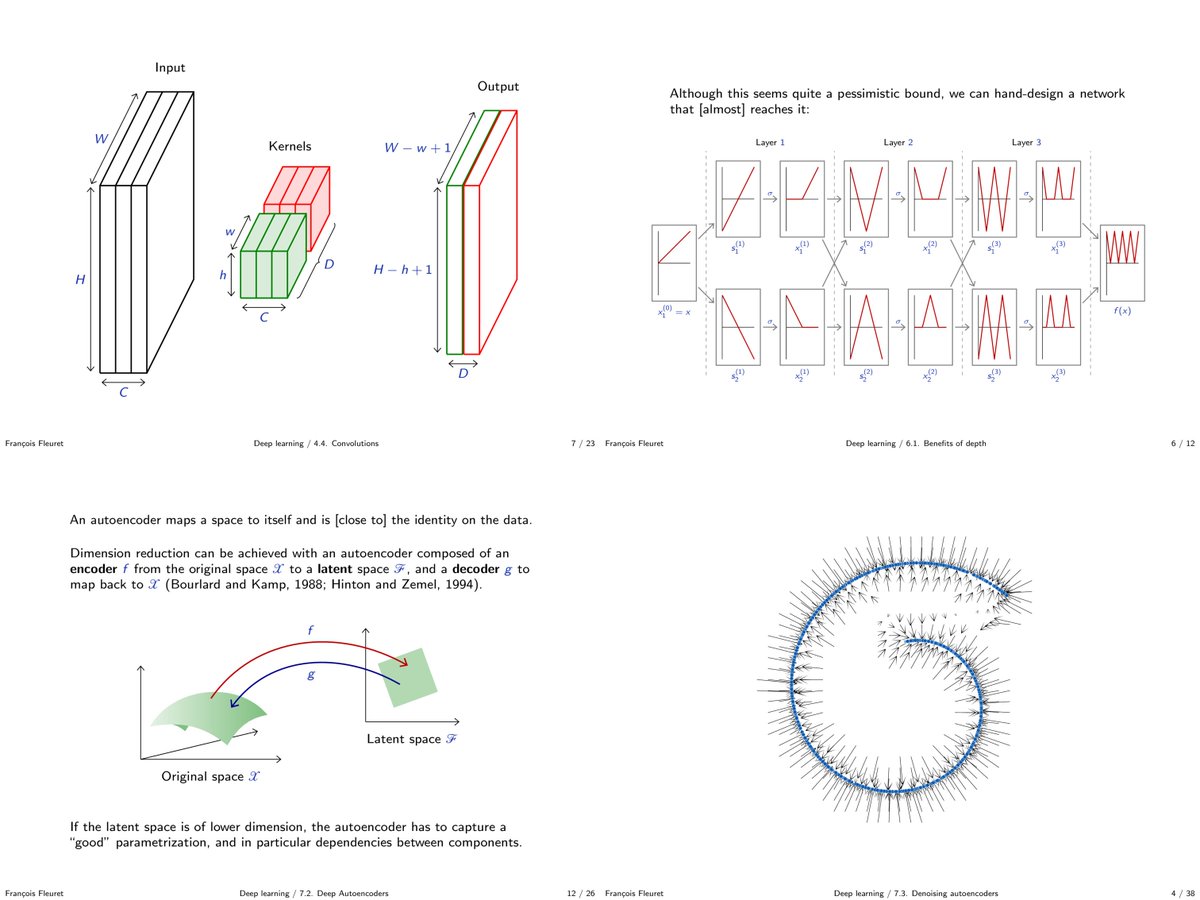

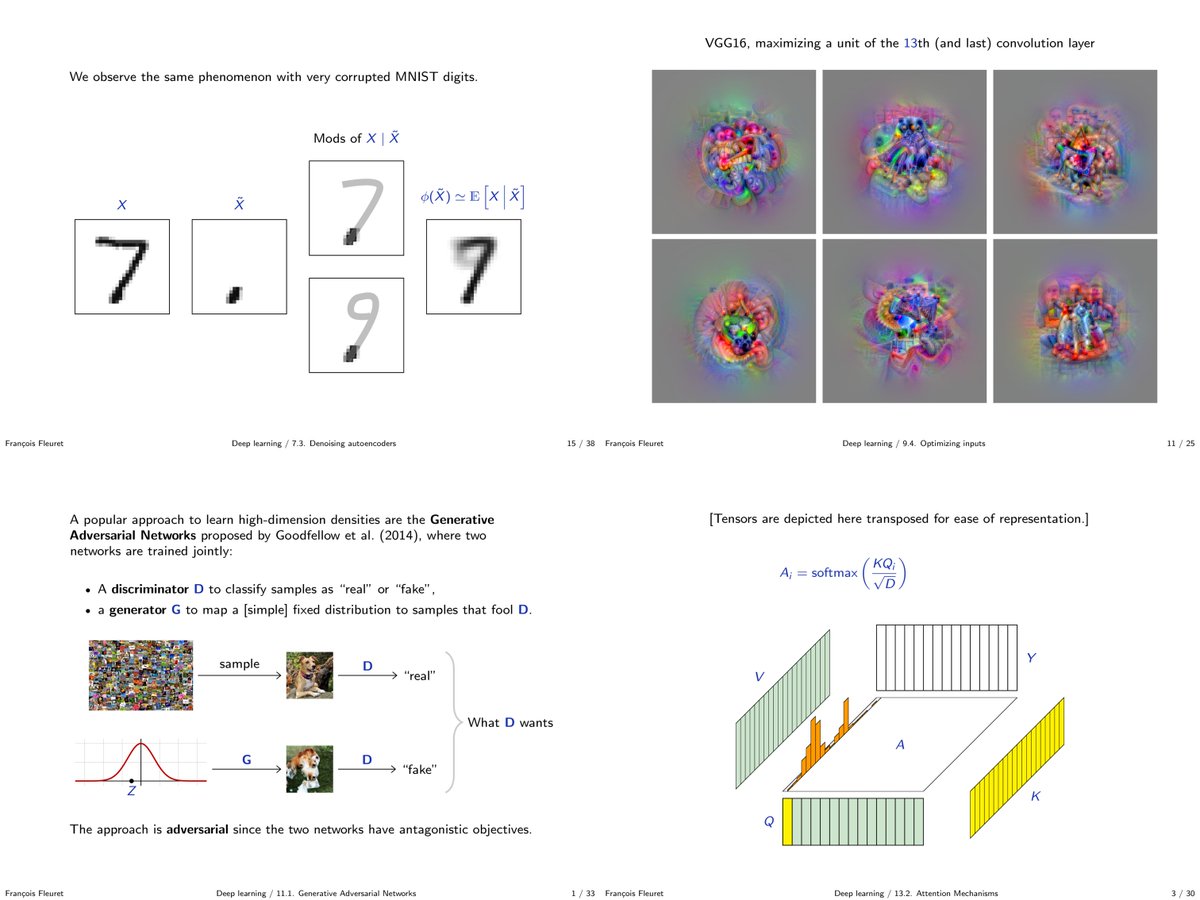

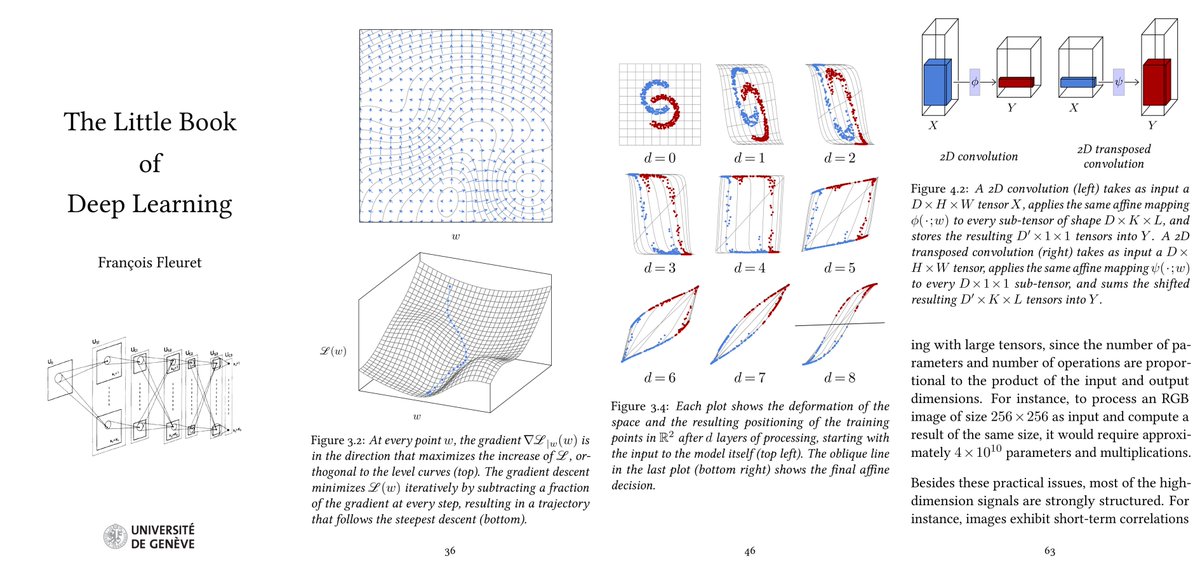

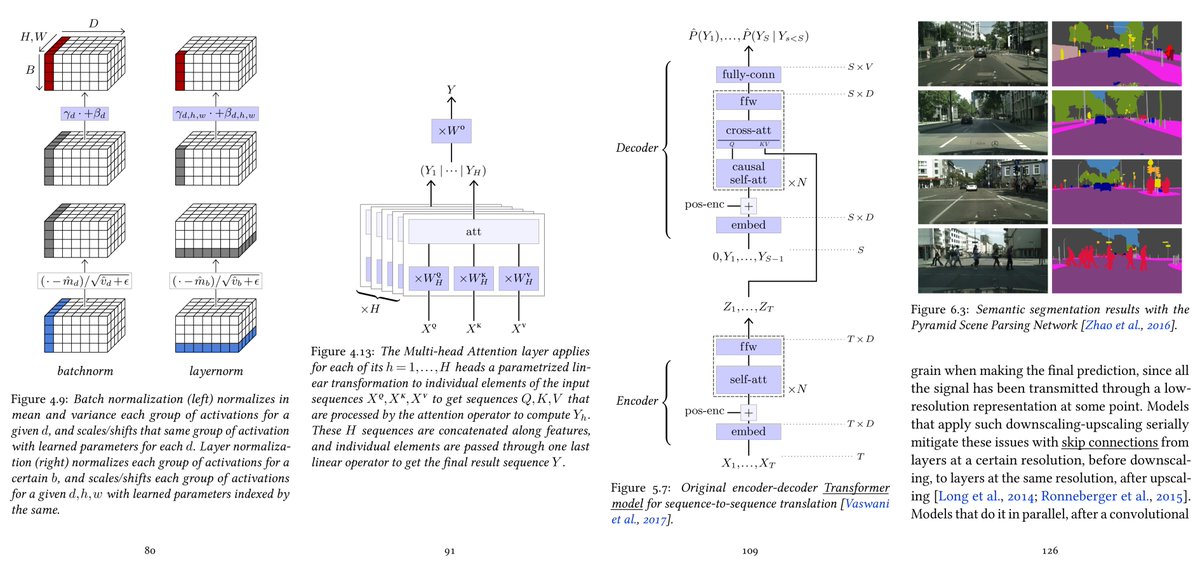

My deep learning course @unige_en is available on-line. 1000+ slides, ~20h of screen-casts. Full of examples in @PyTorch fleuret.org/dlc/ And my "Little Book of Deep Learning" is available as a phone-formatted pdf (400k downloads!) fleuret.org/lbdl/

When non-idiots are saying that "scaling" is enough to make the AGI-kraken they mean scaling

I stand by this, but remains the data problem. Without any type of "non-verbal embodiment" to get a sens of what is reality by "direct observation", such a model would have to be spoon-fed tons and tons of (synthetic?) boring texts about spatiality, temporality and causality.

If I remember correctly, a couple months after GPT-4 came out your hot take was that scaling a GPT + some kind of smart scratchpad / internal monologue technique may be all we need. I think that was probably spot on, because today everybody is rushing for the latter (o1, etc.).

People are like "@sama believes this", while @openai is pouring resources to develop sora and reasoning models.

I'm a bit confused by the "scaling is over" thing. Nobody really believed we'll get the full-fledged reasoning and creative AI god machine by training a ginormous 2019 GPT on 100x more data, right?

GPT is the A-bomb announcing--and possibly functionally at the core of--the H-bomb. "What a positive and cheerful analogy François !"

Gaetz is a distraction. Tulsi is their biggest priority. That's why the two were announced together.

Of the two nominees announced today, Tulsi is the big threat to America, not Gaetz. Wish more people understood that.

I'm a bit confused by the "scaling is over" thing. Nobody really believed we'll get the full-fledged reasoning and creative AI god machine by training a ginormous 2019 GPT on 100x more data, right?

@francoisfleuret Seen on the François Chollet AMA news.ycombinator.com/item?id=421308… ☺️

"It's actually a plateau"

We are PSEUDO random generators, with the same seed. And this also explains why LLMs are so good: any fancy question you make up is already one hundred times in the data set.

It's hard to grasp how our complicated cognitive process ends up generating a very predictable outcome. Those jokes are to this waitress what hiding spots are to a professional burglar: they know exactly what is the [low entropy] result distribution.

It's hard to grasp how our complicated cognitive process ends up generating a very predictable outcome. Those jokes are to this waitress what hiding spots are to a professional burglar: they know exactly what is the [low entropy] result distribution.

There was a defining moment for me once when I was twenty something. I made a joke with a waitress (I am a funny man) nothing rude or disrespectful, just a light joke that I was happy with. And for some reason I immediately asked her "is it a standard dumb patron's joke?" 1/2

The notion of "power differential" and the principle that you should never "punch down" are IMO excellent and clarify how some behaviors are the sign of a terrible character.

United States Trends

- 1. $MAYO 9.936 posts

- 2. Tyson 388 B posts

- 3. Pence 44,1 B posts

- 4. Laken Riley 39,5 B posts

- 5. Dora 22,3 B posts

- 6. Ticketmaster 16,6 B posts

- 7. Kash 72 B posts

- 8. Mike Rogers 8.415 posts

- 9. #LetsBONK 5.993 posts

- 10. Cenk 10,8 B posts

- 11. Pirates 18,8 B posts

- 12. #FursuitFriday 15,5 B posts

- 13. Debbie 15,8 B posts

- 14. Mr. Mayonnaise 1.376 posts

- 15. Iron Mike 16,1 B posts

- 16. The UK 436 B posts

- 17. Gabrielle Union N/A

- 18. Scholars 10,7 B posts

- 19. Oscars 13,9 B posts

- 20. Al Gore 3.306 posts

Who to follow

-

Lilian Weng

Lilian Weng

@lilianweng -

Jürgen Schmidhuber

Jürgen Schmidhuber

@SchmidhuberAI -

Sebastien Bubeck

Sebastien Bubeck

@SebastienBubeck -

Lucas Beyer (bl16)

Lucas Beyer (bl16)

@giffmana -

Percy Liang

Percy Liang

@percyliang -

Sasha Rush

Sasha Rush

@srush_nlp -

Yannic Kilcher 🇸🇨

Yannic Kilcher 🇸🇨

@ykilcher -

Max Welling

Max Welling

@wellingmax -

Kevin Patrick Murphy

Kevin Patrick Murphy

@sirbayes -

Tim Dettmers

Tim Dettmers

@Tim_Dettmers -

Julien Chaumond

Julien Chaumond

@julien_c -

Eric Jang

Eric Jang

@ericjang11 -

Zachary Lipton

Zachary Lipton

@zacharylipton -

Petar Veličković

Petar Veličković

@PetarV_93 -

Alfredo Canziani

Alfredo Canziani

@alfcnz

Something went wrong.

Something went wrong.