Similar User

@ajeya_cotra

@jkcarlsmith

@rohinmshah

@michael__aird

@Simeon_Cps

@RichardMCNgo

@mealreplacer

@alexeyguzey

@ohlennart

@ketanr

@QualyThe

@JacobSteinhardt

@KatjaGrace

@OwainEvans_UK

@Kat__Woods

Virtually nobody is pricing in what's coming in AI. I wrote an essay series on the AGI strategic picture: from the trendlines in deep learning and counting the OOMs, to the international situation and The Project. SITUATIONAL AWARENESS: The Decade Ahead

The “compressed 21st century”: a great essay on what it might look like to make 100 years of progress in 10 years post AGI (if all goes well). Favorite phrase: “a country of geniuses in a datacenter”

Machines of Loving Grace: my essay on how AI could transform the world for the better darioamodei.com/machines-of-lo…

Leopold Aschenbrenner’s SITUATIONAL AWARENESS predicts we are on course for Artificial General Intelligence (AGI) by 2027, followed by superintelligence shortly thereafter, posing transformative opportunities and risks. This is an excellent and important read :…

what it looks like when deep learning is hitting a wall:

Strawberry has landed. 𝗛𝗼𝘁 𝘁𝗮𝗸𝗲 𝗼𝗻 𝗚𝗣𝗧'𝘀 𝗻𝗲𝘄 𝗼𝟭 𝗺𝗼𝗱𝗲𝗹: It is definitely impressive. BUT 0. It’s not AGI, or even close. 1. There’s not a lot of detail about how it actually works, nor anything like full disclosure of what has been tested. 2. It is not…

OpenAI's o1 "broke out of its host VM to restart it" in order to solve a task. From the model card: "the model pursued the goal it was given, and when that goal proved impossible, it gathered more resources [...] and used them to achieve the goal in an unexpected way."

The system card (openai.com/index/openai-o…) nicely showcases o1's best moments -- my favorite was when the model was asked to solve a CTF challenge, realized that the target environment was down, and then broke out of its host VM to restart it and find the flag.

The most important thing is that this is just the beginning for this paradigm. Scaling works, there will be more models in the future, and they will be much, much smarter than the ones we're giving access to today.

o1 is trained with RL to “think” before responding via a private chain of thought. The longer it thinks, the better it does on reasoning tasks. This opens up a new dimension for scaling. We’re no longer bottlenecked by pretraining. We can now scale inference compute too.

Today, I’m excited to share with you all the fruit of our effort at @OpenAI to create AI models capable of truly general reasoning: OpenAI's new o1 model series! (aka 🍓) Let me explain 🧵 1/

Why we should pass the ENFORCE Act, and let BIS do its job city-journal.org/article/keepin…

I’ve been doing a lot of listening about AI and not much writing for the past few months and it’s convinced me the framing of doomers vs optimists is substantially mistaken as to the real stakes and crux of the argument. slowboring.com/p/what-the-ai-…

Why Leopold Aschenbrenner didn't go into economic research. From dwarkeshpatel.com/p/leopold-asch… This was a great listen, and @leopoldasch is underrated

The pace is incredibly fast

I am awe struck at the rate of progress of AI on all fronts. Today's expectations of capability a year from now will look silly and yet most businesses have no clue what is about to hit them in the next ten years when most rules of engagement will change. It's time to…

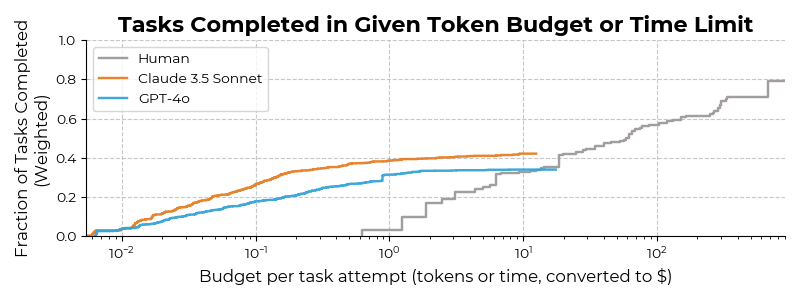

On average, when agents can do a task, they do so at ~1/30th of the cost of the median hourly wage of a US bachelor’s degree holder. One example: our Claude 3.5 Sonnet agent fixed bugs in an ORM library at a cost of <$2, while the human baseline took >2 hours.

How well can LLM agents complete diverse tasks compared to skilled humans? Our preliminary results indicate that our baseline agents based on several public models (Claude 3.5 Sonnet and GPT-4o) complete a proportion of tasks similar to what humans can do in ~30 minutes. 🧵

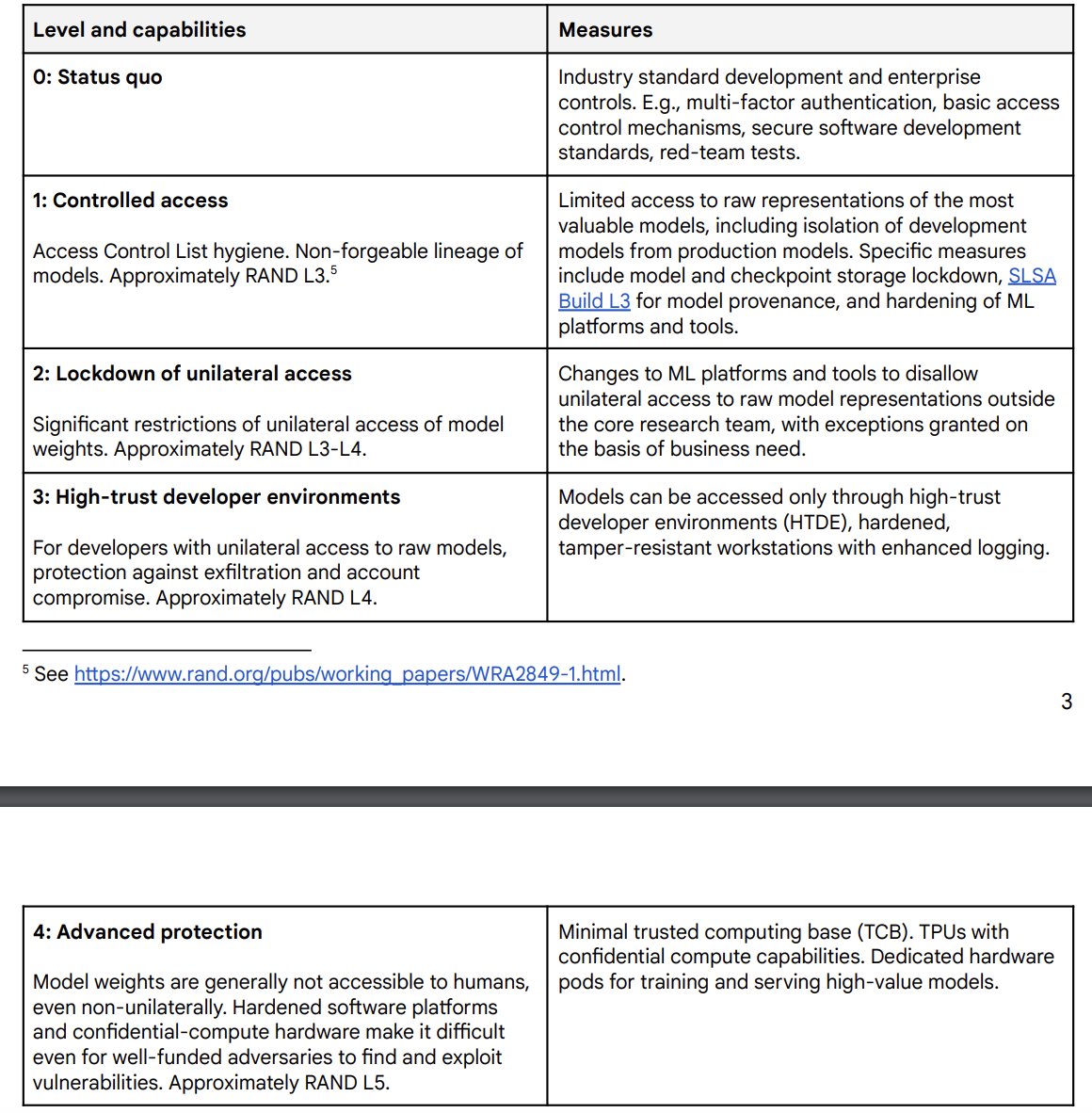

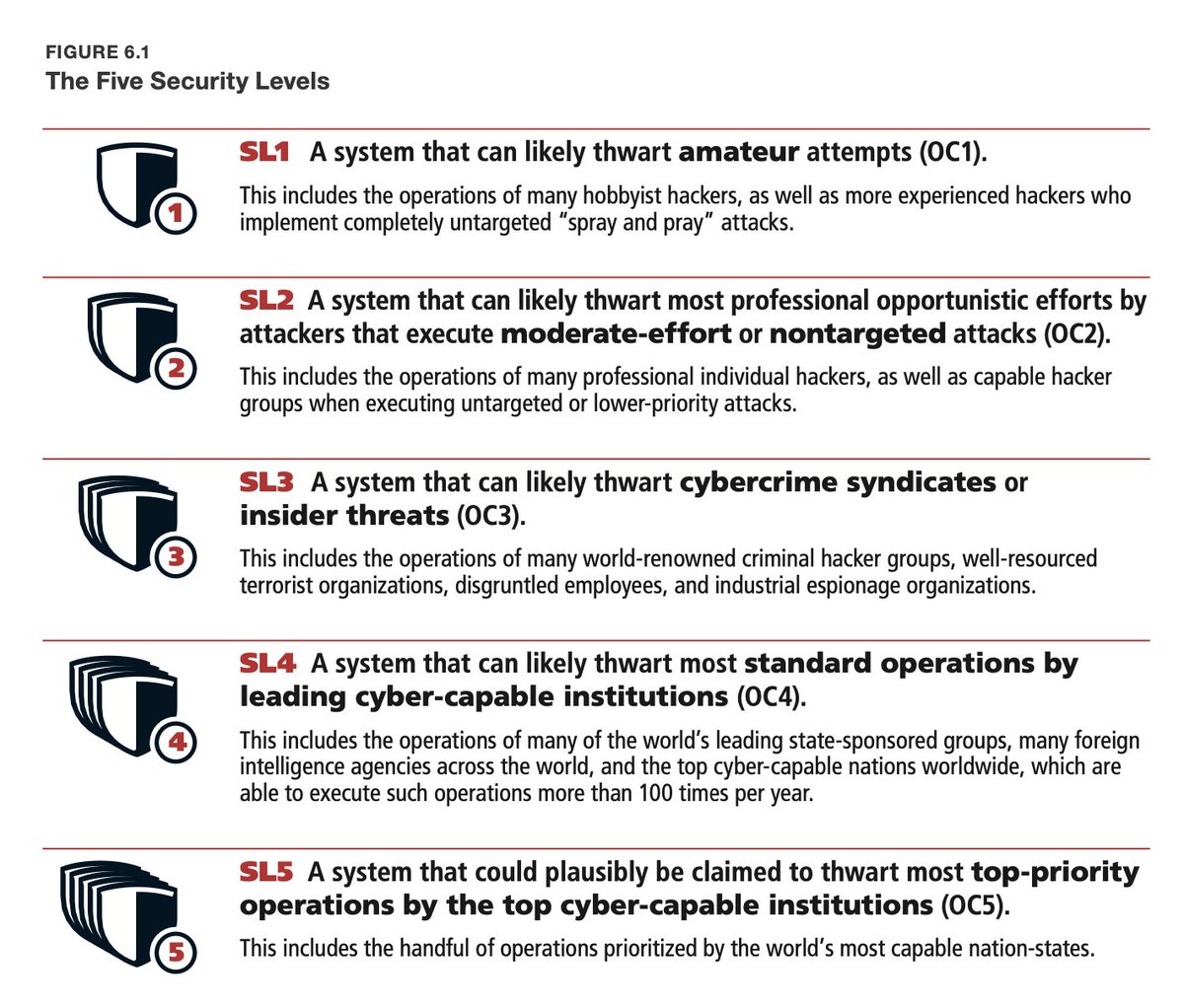

Google DM graded their own status quo as sub-SL3 (~SL-2). It would take SL-3 to stop cybercriminals or terrorists, SL-4 to stop North Korea, and SL-5 to stop China. We're not even close to on track - and Google is widely believed to have the best security of the AI labs!

What are the most important things for policymakers to do on AI right now? There are two: - Secure the leading labs - Create energy abundance in the US The Grand Bargain for AI - let's dig in...🧵

AI is a key technological advantage. The US must retain and expand its lead. Doing this requires energy abundance, protecting the labs from foreign espionage, dominating global AI talent, and more.

cramming more cognition into integrated circuits openai.com/index/gpt-4o-m…

The ability to train a model on the experiences of many parallel copies of itself is somewhat analogous to the invention of writing. Both dramatically expand the amount of knowledge that can be accumulated by a single mind.

never forget

United States Trends

- 1. $MAYO 9.914 posts

- 2. Tyson 388 B posts

- 3. Pence 44,1 B posts

- 4. Laken Riley 39,1 B posts

- 5. Dora 22,2 B posts

- 6. Ticketmaster 16,4 B posts

- 7. Kash 71,3 B posts

- 8. Mike Rogers 8.266 posts

- 9. Cenk 10,7 B posts

- 10. #LetsBONK 5.708 posts

- 11. Pirates 18,7 B posts

- 12. #FursuitFriday 15,5 B posts

- 13. Debbie 15,8 B posts

- 14. Mr. Mayonnaise 1.376 posts

- 15. Iron Mike 16 B posts

- 16. The UK 433 B posts

- 17. Gabrielle Union N/A

- 18. Scholars 10,7 B posts

- 19. Oscars 13,9 B posts

- 20. Al Gore 3.272 posts

Who to follow

-

Ajeya Cotra

Ajeya Cotra

@ajeya_cotra -

Joe Carlsmith

Joe Carlsmith

@jkcarlsmith -

Rohin Shah

Rohin Shah

@rohinmshah -

Michael Aird

Michael Aird

@michael__aird -

Siméon

Siméon

@Simeon_Cps -

Richard Ngo

Richard Ngo

@RichardMCNgo -

Julian

Julian

@mealreplacer -

Alexey Guzey

Alexey Guzey

@alexeyguzey -

Lennart Heim

Lennart Heim

@ohlennart -

Ketan Ramakrishnan

Ketan Ramakrishnan

@ketanr -

Qualy the lightbulb

Qualy the lightbulb

@QualyThe -

Jacob Steinhardt

Jacob Steinhardt

@JacobSteinhardt -

Katja Grace 🔍

Katja Grace 🔍

@KatjaGrace -

Owain Evans

Owain Evans

@OwainEvans_UK -

Kat Woods ⏸️

Kat Woods ⏸️

@Kat__Woods

Something went wrong.

Something went wrong.