Chunting Zhou

@violet_zctResearch Scientist at FAIR. PhD @CMU. she/her.

Similar User

@WenhuChen

@sewon__min

@HannaHajishirzi

@Diyi_Yang

@WeijiaShi2

@yizhongwyz

@xiangrenNLP

@LukeZettlemoyer

@billyuchenlin

@hengjinlp

@ysu_nlp

@fredahshi

@_Hao_Zhu

@hhsun1

@ml_perception

Introducing *Transfusion* - a unified approach for training models that can generate both text and images. arxiv.org/pdf/2408.11039 Transfusion combines language modeling (next token prediction) with diffusion to train a single transformer over mixed-modality sequences. This…

This is the most important paper in a long time . It shows with strong evidence we are reaching the limits of quantization. The paper says this: the more tokens you train on, the more precision you need. This has broad implications for the entire field and the future of GPUs🧵

[1/7] New paper alert! Heard about the BitNet hype or that Llama-3 is harder to quantize? Our new work studies both! We formulate scaling laws for precision, across both pre and post-training arxiv.org/pdf/2411.04330. TLDR; - Models become harder to post-train quantize as they…

Fantastic video generation model by @imisra_ and the team!

So, this is what we were up to for a while :) Building SOTA foundation models for media -- text-to-video, video editing, personalized videos, video-to-audio One of the most exciting projects I got to tech lead at my time in Meta!

Jokes aside, it's fun to see innovation beyond the standard causal/autoregressive next-token generation in text. Transfusion is another cool work in this vein (that already used FlexAttention :P) twitter.com/violet_zct/sta…

Introducing *Transfusion* - a unified approach for training models that can generate both text and images. arxiv.org/pdf/2408.11039 Transfusion combines language modeling (next token prediction) with diffusion to train a single transformer over mixed-modality sequences. This…

The transformer-land and diffusion-land have been separate for too long. There were many attempts to unify before, but they lose simplicity and elegance. Time for a transfusion🩸to revitalize the merge!

Introducing *Transfusion* - a unified approach for training models that can generate both text and images. arxiv.org/pdf/2408.11039 Transfusion combines language modeling (next token prediction) with diffusion to train a single transformer over mixed-modality sequences. This…

Meta presents Transfusion: Predict the Next Token and Diffuse Images with One Multi-Modal Model - Can generate images and text on a par with similar scale diffusion models and language models - Compresses each image to just 16 patches arxiv.org/abs/2408.11039

Transfusion: Predict the Next Token and Diffuse Images with One Multi-Modal Model abs: arxiv.org/abs/2408.11039 New paper from Meta that introduces Transfusion, a recipe for training a model that can seamlessly generate discrete and continuous modalities. The authors pretrain a…

Transfusion Predict the Next Token and Diffuse Images with One Multi-Modal Model discuss: huggingface.co/papers/2408.11… We introduce Transfusion, a recipe for training a multi-modal model over discrete and continuous data. Transfusion combines the language modeling loss function…

Great work from @cHHillee and the team! FlexAttention is really easy to use with highly expressive designed user interface , also with strong profiles compared to Flash!

For too long, users have lived under the software lottery tyranny of fused attention implementations. No longer. Introducing FlexAttention, a new PyTorch API allowing for many attention variants to enjoy fused kernels in a few lines of PyTorch. pytorch.org/blog/flexatten… 1/10

arxiv.org/abs/2407.08351 LM performance on existing benchmarks is highly correlated. How do we build novel benchmarks that reveal previously unknown trends? We propose AutoBencher: it casts benchmark creation as an optimization problem with a novelty term in the objective.

Beyond excited to be starting this company with Ilya and DG! I can't imagine working on anything else at this point in human history. If you feel the same and want to work in a small, cracked, high-trust team that will produce miracles, please reach out.

Superintelligence is within reach. Building safe superintelligence (SSI) is the most important technical problem of our time. We've started the world’s first straight-shot SSI lab, with one goal and one product: a safe superintelligence. It’s called Safe Superintelligence…

🚀 Excited to introduce Chameleon, our work in mixed-modality early-fusion foundation models from last year! 🦎 Capable of understanding and generating text and images in any sequence. Check out our paper to learn more about its SOTA performance and versatile capabilities!

Newly published work from FAIR, Chameleon: Mixed-Modal Early-Fusion Foundation Models. This research presents a family of early-fusion token-based mixed-modal models capable of understanding & generating images & text in any arbitrary sequence. Paper ➡️ go.fb.me/7rb19n

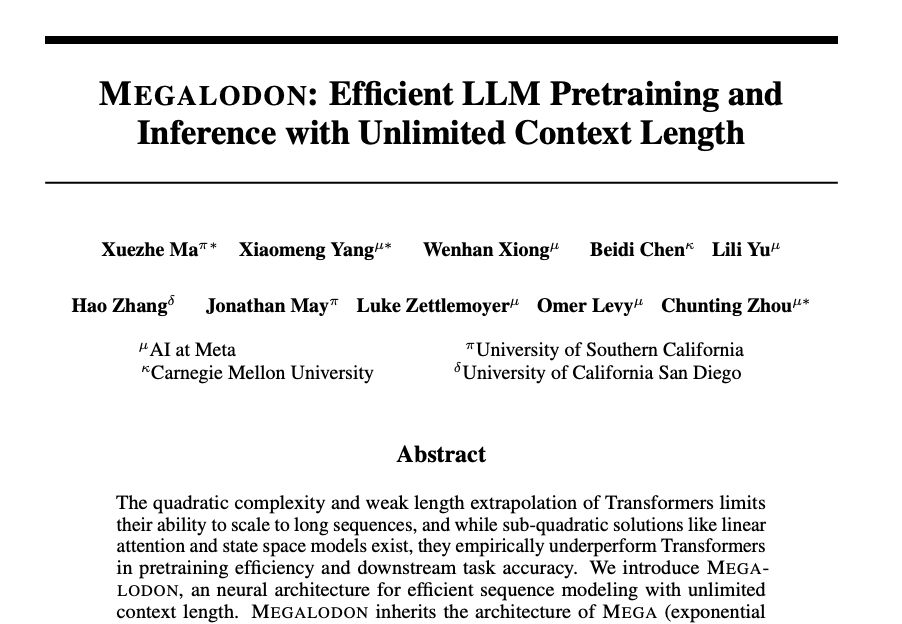

Meta announces Megalodon Efficient LLM Pretraining and Inference with Unlimited Context Length The quadratic complexity and weak length extrapolation of Transformers limits their ability to scale to long sequences, and while sub-quadratic solutions like linear attention and

United States Trends

- 1. Mike 1,83 Mn posts

- 2. Serrano 241 B posts

- 3. #NetflixFight 73,3 B posts

- 4. Canelo 16,8 B posts

- 5. Father Time 10,7 B posts

- 6. Logan 79,4 B posts

- 7. #netflixcrash 16,2 B posts

- 8. He's 58 27,1 B posts

- 9. Rosie Perez 15,1 B posts

- 10. Boxing 304 B posts

- 11. ROBBED 101 B posts

- 12. #buffering 11 B posts

- 13. Shaq 16,3 B posts

- 14. VANDER 4.847 posts

- 15. My Netflix 83,6 B posts

- 16. Roy Jones 7.240 posts

- 17. Muhammad Ali 19,2 B posts

- 18. Tori Kelly 5.320 posts

- 19. Ramos 69,7 B posts

- 20. Cedric 22,1 B posts

Who to follow

-

Wenhu Chen

Wenhu Chen

@WenhuChen -

Sewon Min

Sewon Min

@sewon__min -

Hanna Hajishirzi

Hanna Hajishirzi

@HannaHajishirzi -

Diyi Yang

Diyi Yang

@Diyi_Yang -

Weijia Shi

Weijia Shi

@WeijiaShi2 -

Yizhong Wang

Yizhong Wang

@yizhongwyz -

Sean (Xiang) Ren

Sean (Xiang) Ren

@xiangrenNLP -

Luke Zettlemoyer

Luke Zettlemoyer

@LukeZettlemoyer -

Bill Yuchen Lin 🤖

Bill Yuchen Lin 🤖

@billyuchenlin -

Heng Ji

Heng Ji

@hengjinlp -

Yu Su @EMNLP

Yu Su @EMNLP

@ysu_nlp -

Freda Shi

Freda Shi

@fredahshi -

Hao Zhu 朱昊

Hao Zhu 朱昊

@_Hao_Zhu -

Huan Sun (OSU)

Huan Sun (OSU)

@hhsun1 -

Mike Lewis

Mike Lewis

@ml_perception

Something went wrong.

Something went wrong.