Hao Zhang

@haozhangmlAsst. Prof. @HDSIUCSD and @ucsd_cse running @haoailab. Cofounder and runs @lmsysorg. 20% with @SnowflakeDB

Similar User

@zhuohan123

@tri_dao

@skypilot_org

@lm_zheng

@lmarena_ai

@p_nawrot

@woosuk_k

@xinyun_chen_

@profjoeyg

@zicokolter

@ericxing

@ying11231

@DachengLi177

@tqchenml

@lileics

Check out our latest blogpost discussing a better metric -- goodput (throughput s.t. latency constraints) -- for LLM serving, and our new technique prefill-decoding disaggregation that optimizes goodput and achieves lower cost-per-query and high service quality at the same time!

Still optimizing throughput for LLM Serving? Think again: Goodput might be a better choice! Splitting prefill from decode to different GPUs yields - up to 4.48x goodput - up to 10.2x stricter latency criteria Blog: hao-ai-lab.github.io/blogs/distserv… Paper: arxiv.org/abs/2401.09670

📈 more to come

Glad to see both projects we have been doing since last year get recognitions. More to come ✊🤟

Thank you @sequoia for supporting the open source community! We believe openness is the way for the future infrastructure of AI, and humbled by our amazing users, contributors, and supporters! ❤️

I will be at this event in person tonight -- happy to chat about anything about AI/LLMs! 🙂

The last in-person vLLM meetup of the year is happening in two weeks, on November 13, at @SnowflakeDB HQ! Join the vLLM developers and engineers from Snowflake AI Research to chat about the latest LLM inference optimizations and your 2025 vLLM wishlist! lu.ma/h0qvrajz

It's official! We are now an incubation project @LFAIDataFdn We firmly believe in open governance and are committed to ensuring that vLLM remains a shared community project. ❤️

🚀 Introducing vLLM, LF AI & Data’s newest incubation project! vLLM is a high-throughput, memory-efficient engine for LLM inference & serving, solving the challenge of slow model serving. Read the full announcement ➡️ hubs.la/Q02V_5270 #opensource #oss

Speculative decoding is really interesting problem; the community has published many papers in a short amount of time, but very few of them have really discussed how it can be made really useful in a real serving system (they mostly assume batch size = 1). This is a nice step.…

exciting new development!!

🔥New benchmark: Preference Proxy Evaluations (PPE) Can reward models guide RLHF? Can LLM judge replace real human evals? PPE addresses these questions! Highlights: - Real-world human preference from Chatbot Arena💬 - 16,000+ prompts and 32,000+ diverse model responses🗿 -…

Congrats @_parasj @ajayj_ . This is huge to the community and my students are all excited about what the model can enable!!!

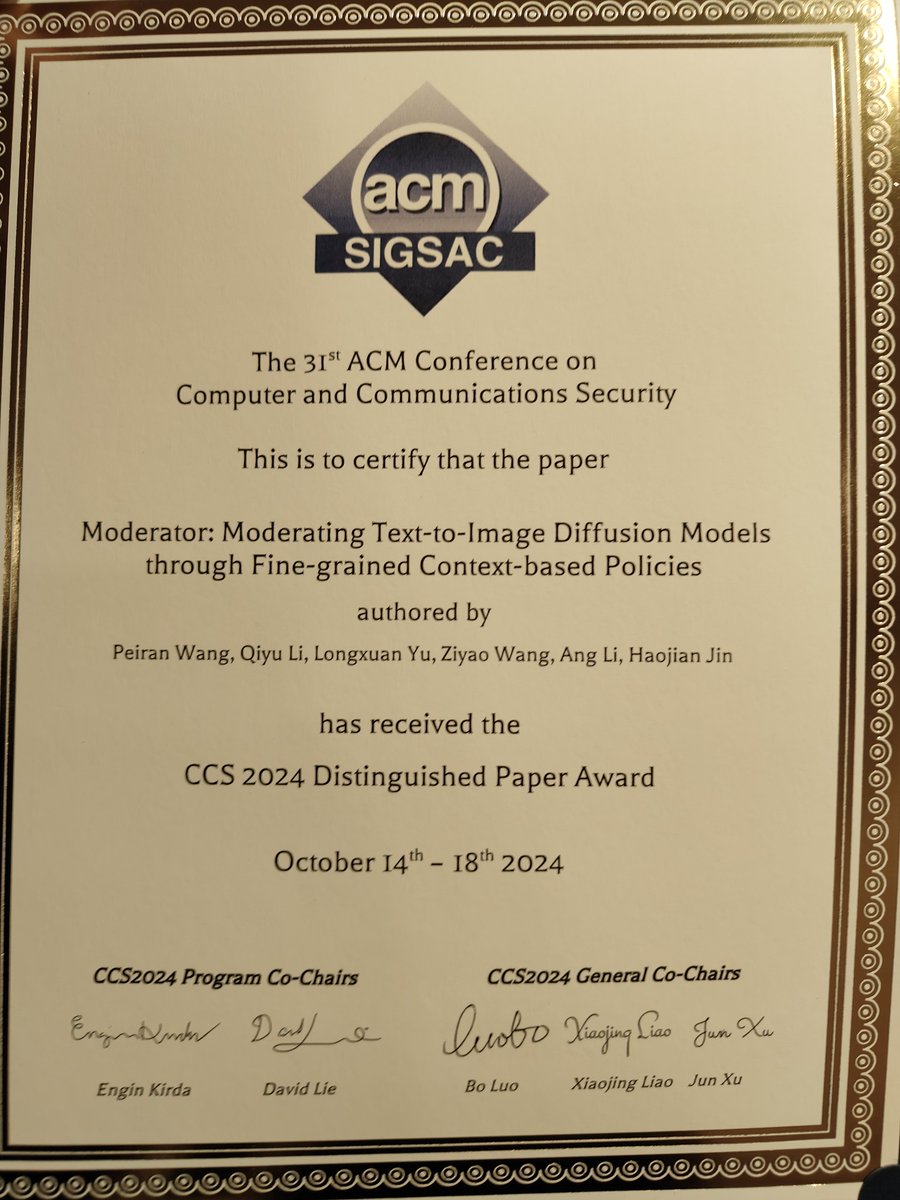

wow, congrats @angcharlesli @haojian_jin and @HDSIUCSD !!

Thrilled to share that our collaborative work with @haojian_jin got the Distinguished Paper Award at CCS'24. Congrats to all the co-authors! @eceumd paper: arxiv.org/pdf/2408.07728

whoo, UCSD is doing great!!

And big changes in the ranking of various AI labs in the Zeta Alpha top-100 most cited papers. Microsoft made Google dance, also in the most cited AI papers of 2023. Big moves up in the ranking for AI Research at @CarnegieMellon @MIT @hkust and @UCSanDiego

Join us on Wednesday, October 16th at 4pm PT to explore how we’re optimizing LLM serving under stricter latency requirements by maximizing goodput! 🙌 Link: hubs.la/Q02T9pcR0

Join us for our next #PyTorch Expert Exchange Webinar on Wednesday, October 16th at 4 PM PT ➡️ Distsserve: disaggregating prefill and decoding for goodput-optimized LLM inference with @haozhangml Asst. Prof. at @HDSIUCSD & @ucsd_cse Tune in at: hubs.la/Q02T9pcR0

I'll talk about DistServe (hao-ai-lab.github.io/blogs/distserv…) at PyTorch webinar this Wed. Looking forward😃. Thank @PyTorch and @AIatMeta for the support!

Join us for our next #PyTorch Expert Exchange Webinar on Wednesday, October 16th at 4 PM PT ➡️ Distsserve: disaggregating prefill and decoding for goodput-optimized LLM inference with @haozhangml Asst. Prof. at @HDSIUCSD & @ucsd_cse Tune in at: hubs.la/Q02T9pcR0

My @Google colleague and longtime @UCBerkeley faculty member David Patterson has a great essay out in this month's Communications of the ACM (@TheOfficialACM):🎉 "Life Lessons from the First Half-Century of My Career Sharing 16 life lessons, and nine magic words." I saw an…

BREAKING NEWS The Royal Swedish Academy of Sciences has decided to award the 2024 #NobelPrize in Chemistry with one half to David Baker “for computational protein design” and the other half jointly to Demis Hassabis and John M. Jumper “for protein structure prediction.”

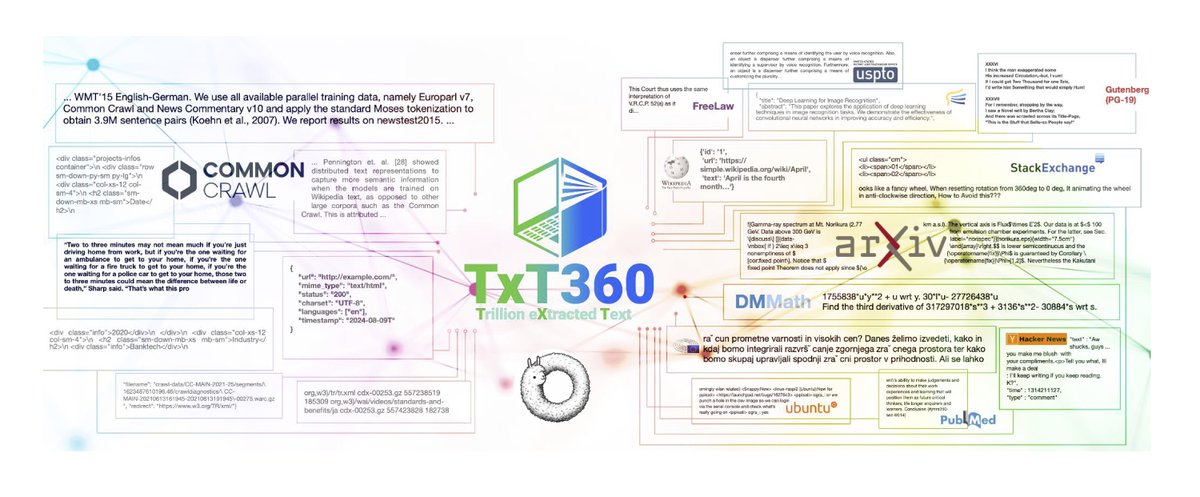

📢📢 We are releasing TxT360: a globally deduplicated dataset for LLM pretraining 🌐 99 Common Crawls 📘 14 Curated Sources 👨🍳 recipe to easily adjust data weighting and train the most performant models Dataset: huggingface.co/datasets/LLM36… Blog: huggingface.co/spaces/LLM360/…

✨ Check out our revamped repo! Analysis360: Open Implementations of LLM Analyses 🔗 github.com/LLM360/Analysi… Featuring tutorials on: 💾 Data memorization 🧠 LLM unlearning ⚖️ AI safety, toxicity, & bias 🔍 Mechanistic interpretability 📊 Evaluation metrics

Former @SCSatCMU faculty member Geoffrey Hinton has been awarded the 2024 Nobel Prize in Physics! 👏 Hinton, now at @UofT, was recognized alongside John J. Hopfield of @Princeton for their work in machine learning with artificial neural networks. ➡️ cmu.is/Hinton-Nobel-P…

Geoff and John are a truly inspired choice for the Nobel Prize in Physics. Not only because they have done groundbreaking work for machine learning research, but also since this choice reflects an understanding that machine learning methods are changing how science is done (1/2)

Congrats @geoffreyhinton, who really laid the foundations for deep learning which enables so many breakthroughs. I quite like @bschoelkopf's interpretation x.com/bschoelkopf/st… that that AI/ML is gradually yet fundamentally changing *how science is done*, and this is truly…

BREAKING NEWS The Royal Swedish Academy of Sciences has decided to award the 2024 #NobelPrize in Physics to John J. Hopfield and Geoffrey E. Hinton “for foundational discoveries and inventions that enable machine learning with artificial neural networks.”

Join our online meetup on Oct. 16 for efficient LLM deployment and serving, co-hosted by SGLang, FlashInfer, and MLC LLM! 🥳 You are all welcome to join by filling out the Google form forms.gle/B3YeedLxmrrhL1… It will cover topics such as low CPU overhead scheduling, DeepSeek MLA…

United States Trends

- 1. Jake Paul 1,02 Mn posts

- 2. #Arcane 196 B posts

- 3. Jayce 37,9 B posts

- 4. Serrano 245 B posts

- 5. Vander 12 B posts

- 6. #SaturdayVibes 2.201 posts

- 7. maddie 16,6 B posts

- 8. #HappySpecialStage 60 B posts

- 9. Canelo 17,4 B posts

- 10. Jinx 94,8 B posts

- 11. The Astronaut 25,7 B posts

- 12. Isha 28 B posts

- 13. #NetflixFight 75,8 B posts

- 14. Good Saturday 20,4 B posts

- 15. Father Time 10,8 B posts

- 16. Super Tuna 17,9 B posts

- 17. Logan 80,2 B posts

- 18. Boxing 312 B posts

- 19. He's 58 29,4 B posts

- 20. Ekko 15,2 B posts

Who to follow

-

Zhuohan Li

Zhuohan Li

@zhuohan123 -

Tri Dao

Tri Dao

@tri_dao -

SkyPilot

SkyPilot

@skypilot_org -

Lianmin Zheng

Lianmin Zheng

@lm_zheng -

lmarena.ai (formerly lmsys.org)

lmarena.ai (formerly lmsys.org)

@lmarena_ai -

Piotr Nawrot

Piotr Nawrot

@p_nawrot -

Woosuk Kwon

Woosuk Kwon

@woosuk_k -

Xinyun Chen

Xinyun Chen

@xinyun_chen_ -

Joey Gonzalez

Joey Gonzalez

@profjoeyg -

Zico Kolter

Zico Kolter

@zicokolter -

Eric Xing

Eric Xing

@ericxing -

Ying Sheng

Ying Sheng

@ying11231 -

Dacheng Li

Dacheng Li

@DachengLi177 -

Tianqi Chen

Tianqi Chen

@tqchenml -

Lei Li

Lei Li

@lileics

Something went wrong.

Something went wrong.