Lapsa-Malawski

@munhitsuTweets on Technology and Art. Views my own @[email protected]

Similar User

@simoneverest

@jeremygould

@stuart_hollands

@drsnooks

@tewilliam

I loved gevent. It was bringing all the benefits of event loop I needed and leaving me with a straightforward API on monkey patched threads. I never could understand why it was treated as an ugly child

“I'm now convinced that async/await is, in fact, a bad abstraction for most languages, and we should be aiming for something better instead and that I believe to be thread.” lucumr.pocoo.org/2024/11/18/thr…

Nice, I might be eventually able to use letter “m” in passwords for some, ancient services. But then again if they are already ancient, will their CISO actually care about the new NIST guidance? mastodon.social/@LukaszOlejnik…

Explains why I found myself forced to not just block Musk, but also mute the terms “Elon”, “Musk”, “Elonmusk” to get a Twitter experience where I wouldn’t have every second tweet of his on my timeline. Case study worthy on how you degrade a social network long-term

As Apple Intelligence is rolling out to our beta users today, we are proud to present a technical report on our Foundation Language Models that power these features on devices and cloud: machinelearning.apple.com/research/apple…. 🧵

We live in the future; it's just not distributed evenly: hakaimagazine.com/videos-visuals…

Next time you estimate development think about leaky pipelines: hiandrewquinn.github.io/til-site/posts…

Convolutional Neural Networks in action

Yann LeCun says he is working to develop an entirely new generation of AI systems that he hopes will power machines with human-level intelligence. It could take up to 10 years to achieve, he tells the @FT in an interview on.ft.com/3KbShLF

I'm playing with G-Eval to test the LLM outputs using LLM. It roughly works until it doesn't. How am I supposed to reason with test result: "the actual output's prompt is in Polish which mismatches the language-prompt specified as Polish, aligning correctly" #llm #gpt #deepeval

honestly, Word, we have enough CPU to keep the table of contents updating automatically

For anyone interested, I've just written up my 'AI form extractor' experiment from a few weeks ago as a blog post timpaul.co.uk/posts/using-ai…

I’ve just been just told by the staff at Pret A Manger that there is no water in espresso 🙈

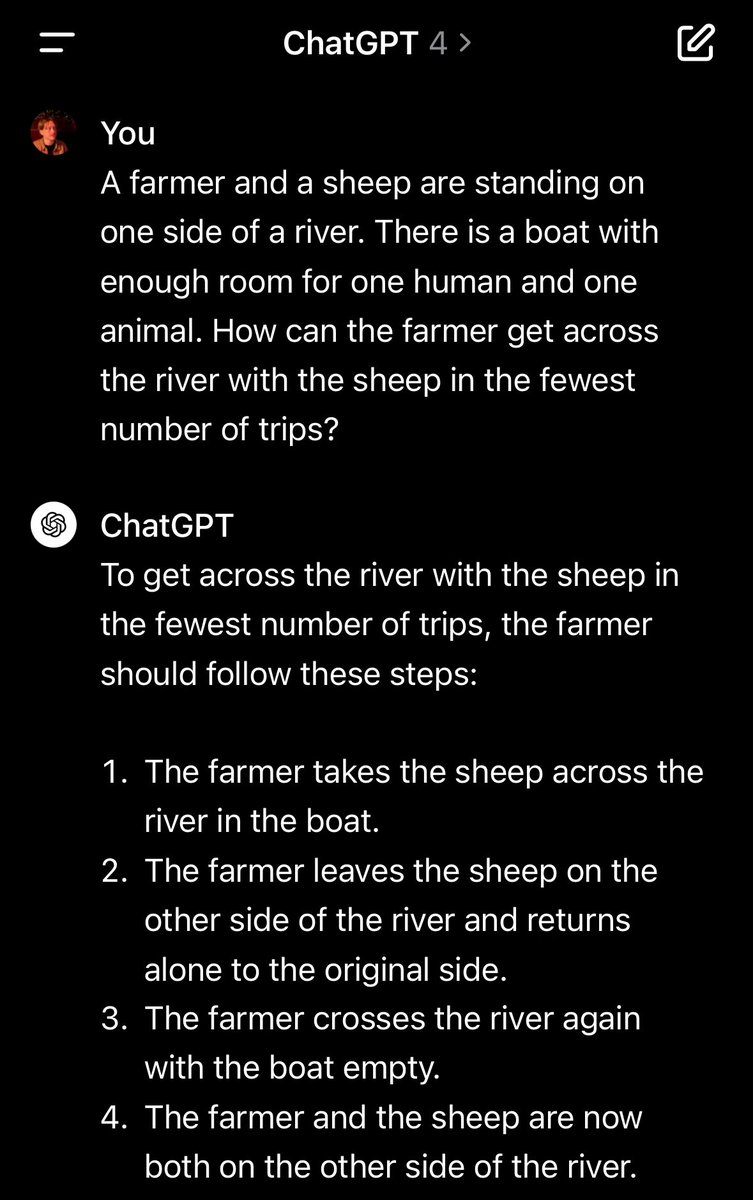

How to be as "smart" as Auto-Regressive LLMs: - memorize lots of problem statements together with recipes on how to solve them. - to solve a new problem, retrieve the recipe whose problem statement superficially matches the new problem. - apply the recipe blindly and declare…

There’s an art to distilling these to the absolute minimal necessary text. The human brain can’t comprehend how stupid these things are without practice.

At this point I feel like we understand pretty well what's going on with LLMs: - Outputs are roughly equivalent to kernel smoothing over positional embeddings (arxiv.org/pdf/1908.11775…) - The learned computation model is *probably* bounded by RASP-L (arxiv.org/pdf/2310.16028…) -…

United States Trends

- 1. Remy 7.412 posts

- 2. Papoose 1.631 posts

- 3. #MANASOL N/A

- 4. Good Thursday 24,2 B posts

- 5. #ThursdayMotivation 4.335 posts

- 6. Claressa 1.177 posts

- 7. #playstationwrapup 1.067 posts

- 8. Voice of America 31,8 B posts

- 9. Our Lady of Guadalupe 4.416 posts

- 10. #Wordle1272 N/A

- 11. Rejected 38,2 B posts

- 12. Selena 123 B posts

- 13. Diligence 4.470 posts

- 14. Warriors 67,7 B posts

- 15. Austin Tice 4.735 posts

- 16. Kari Lake 34,9 B posts

- 17. Steve Kerr 6.753 posts

- 18. Rockets 44,6 B posts

- 19. Benny Blanco 22,2 B posts

- 20. #Survivor47 13,2 B posts

Something went wrong.

Something went wrong.