Chris Meserole

@chrismeseroleExecutive Director, Frontier Model Forum | Former Director, Brookings A.I. & Emerging Tech Initiative

Similar User

@Lauren_A_Kahn

@dbyman

@RobinSStern

@Jason_Blazakis

@columlynch

@DeweyAM

@assafmoghadam

@samthorpe__

@ARCJustClinic

@DevorahMargolin

@Andrewtabler

@reed_alastair

@PrincetonGCEPS

@Jacob_A_Ware

@ericfan_journo

Deeply excited to serve as Executive Director of the Frontier Model Forum! We need a much better understanding of the capabilities and risks of the most advanced AI models if we're to realize their benefits, and the Forum will be tackling that challenge head-on.

Today, we are announcing Chris Meserole as the Executive Director of the Frontier Model Forum, and the creation of a new AI Safety Fund, a $10 million initiative to promote research in the field of AI safety. openai.com/blog/frontier-…

🚀Excited to announce our issue brief on #frontierAI #SafetyFrameworks! Drawn from the Frontier AI Safety Commitments and published frameworks, the brief reflects a preliminary consensus among FMF member firms on the core components of safety frameworks: frontiermodelforum.org/updates/issue-…

We’ve published a new document, Common Elements of Frontier AI Safety Policies, that describes the emerging practice for AI developer policies that address the Seoul Frontier AI Safety Commitments.

The mission of the Frontier Model Forum is to advance frontier AI safety by identifying best practices, supporting scientific research, and facilitating greater information-sharing. We’re excited to share our early progress in our latest update: frontiermodelforum.org/updates/progre…

Excited to announce our first issue brief documenting best practices for #FrontierAI safety evaluations! Read more about our recommended best practices for designing and interpreting frontier AI safety evaluations #AISafety #Evaluations frontiermodelforum.org/updates/early-…

Excited to see the announcement today of the UK’s new Systemic AI Safety fund, which will be a great complement to our AI Safety Fund. Very much look forward to all the important research it will support!

We are announcing new grants for research into systemic AI safety. Initially backed by up to £8.5 million, this program will fund researchers to advance the science underpinning AI safety. Read more: gov.uk/government/new…

Welcome @Amazon and @Meta to the @fmf_org! They join founding members @AnthropicAI, @Google, @Microsoft, and @OpenAI in advancing frontier AI safety – from best practice workshops to policymaker education and collaborative research. More here: frontiermodelforum.org/updates/amazon…

Congrats to the folks at GDM, this is an important step forward!

🔭 Very happy to share @GoogleDeepMind's exploratory framework to ensure future powerful capabilities from frontier models are detected and mitigated. We're starting with an initial focus on Autonomy, Biosecurity, Cybersecurity, and Machine Learning R&D. 🚀storage.googleapis.com/deepmind-media…

⚖️Measuring training compute appropriately is essential for ensuring that AI safety measures are applied in an effective and proportionate way. See here for a new brief on how we’re approaching the issue: frontiermodelforum.org/updates/issue-…

🚨 The Frontier Model Forum (@fmf_org) is hiring! They're looking for a *Research Science Lead* and *Research Associates*. frontiermodelforum.org/careers/

Great to see the announcement made today by @NIST to establish the USAISI’s new consortium. The @FMF_org is proud to be a founding member - we're excited to take part in the consortium and look forward to contributing to the shared goal of advancing AI safety.

We’re thrilled to participate in the US’s AI Safety Institute Consortium assembled by @NIST Ongoing collaboration between government, civil society, and industry is critical to ensure that AI systems are as safe as they are beneficial.

The nerd in me has never felt so seen. Thanks @politico and @markscott88 for such a cool honor!

Honored that our Executive Director @chrismeserole was named @Politico's Wonk of the Week 🤓 thanks @markscott82

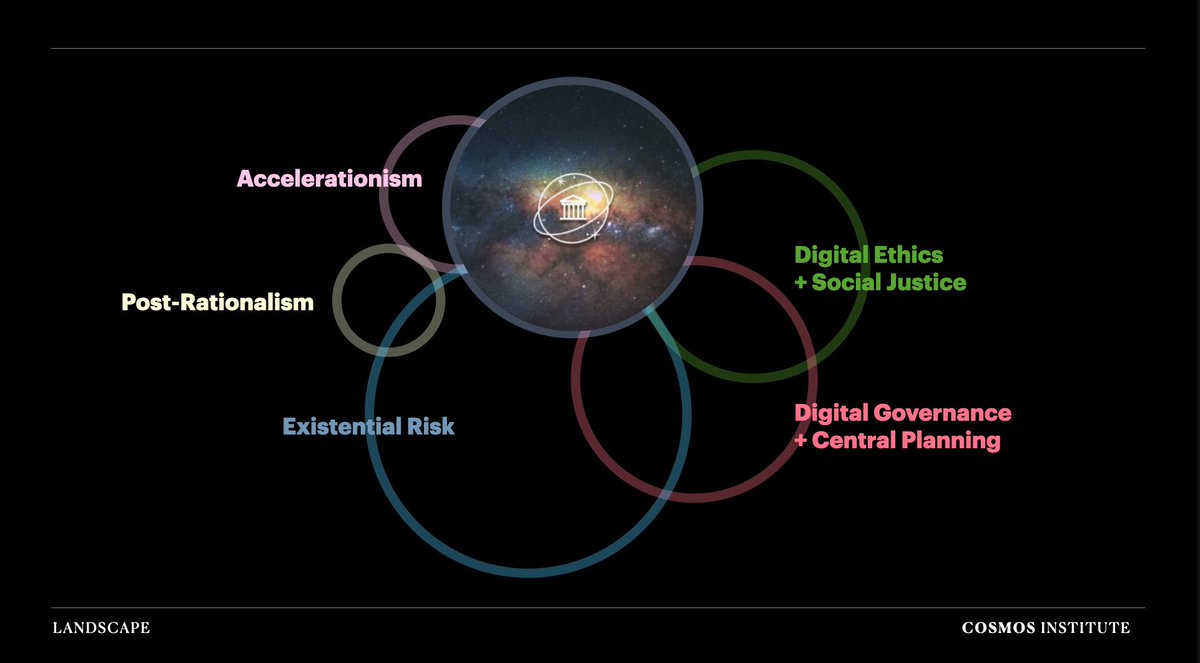

The more AI advances, the more we’ll need new efforts at the intersection of philosophy, ethics, and technology. Congrats to @mbrendan1 for his work on @cosmos_inst Look forward to following along ⤵️

1/ Introducing: The AI Philosophy Landscape Full analysis in my bio, including a sneak preview of Cosmos Institute @cosmos_inst, the philanthropic effort I've been building over the past few months Thread ⬇️

As the year draws to a close, I'm proud of the work @fmf_org has done so far -- and even more excited for the great work to come.

(4/4) To learn more about what we’ve been up to and our plans for 2024, check out our end of year blog post here and follow along: frontiermodelforum.org/updates/year-i…

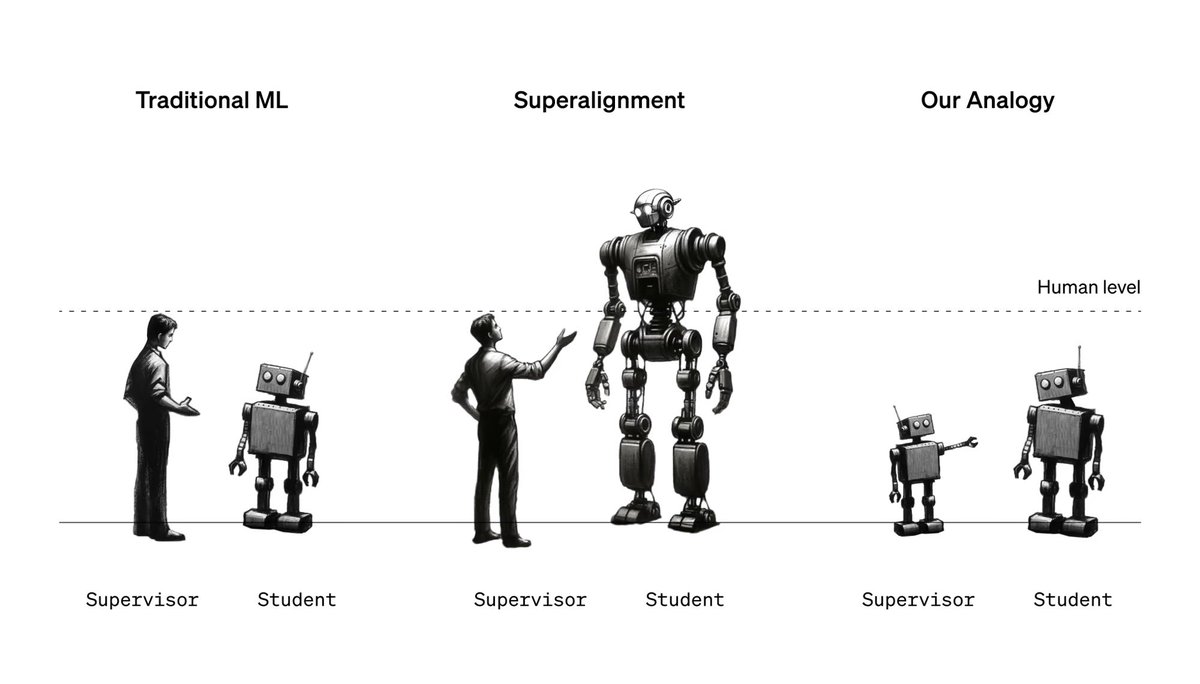

Intuitively, superhuman AI systems should "know" if they're acting safely. But can we "summon" such concepts from strong models with only weak supervision? Incredibly excited to finally share what we've been working on: weak-to-strong generalization. 1/ x.com/OpenAI/status/…

In the future, humans will need to supervise AI systems much smarter than them. We study an analogy: small models supervising large models. Read the Superalignment team's first paper showing progress on a new approach, weak-to-strong generalization: openai.com/research/weak-…

So excited to launch this today after many months of work, and proud & grateful to the team and all contributors who helped work on this. 🙏 If you're interested in emerging technology and public service, check it out! There's never been a more exciting time to get involved! 🚀

We're very excited to announce the publication of emergingtechpolicy.org, a new website compiling in-depth guides, expert advice, and resources for people interested in public service careers related to emerging technology & policy. A little preview of the content - 1/🧵

We’re at a pivotal moment in the history of AI. This important launch of an AI Safety Fund from the Frontier Model Forum will support independent research to test and evaluate the most advanced AI models. Key collaboration for @GoogleDeepMind

Today, we’re launching a new AI Safety Fund from the Frontier Model Forum: a commitment from @Google, @AnthropicAI, @Microsoft and @OpenAI of over $10 million to advance independent research to help test and evaluate the most capable AI models. ↓ dpmd.ai/3tJ9KWV

United States Trends

- 1. Pam Bondi 156 B posts

- 2. Browns 39,4 B posts

- 3. Jameis 11 B posts

- 4. #911onABC 24,3 B posts

- 5. Gaetz 947 B posts

- 6. #PITvsCLE 3.747 posts

- 7. Tomlin 4.952 posts

- 8. #TNFonPrime 2.269 posts

- 9. Eddie 46,9 B posts

- 10. Brian Kelly 3.743 posts

- 11. Fields 46,3 B posts

- 12. Myles Garrett 2.020 posts

- 13. Nick Chubb 2.392 posts

- 14. Bryce Underwood 20,5 B posts

- 15. #DawgPound 3.407 posts

- 16. #HereWeGo 7.618 posts

- 17. Pickens 5.025 posts

- 18. Michigan 71,3 B posts

- 19. Lamelo 7.028 posts

- 20. Al Michaels N/A

Who to follow

-

Lauren Kahn

Lauren Kahn

@Lauren_A_Kahn -

Daniel Byman

Daniel Byman

@dbyman -

Dr. Robin Stern

Dr. Robin Stern

@RobinSStern -

Jason Blazakis

Jason Blazakis

@Jason_Blazakis -

columlynch

columlynch

@columlynch -

Dewey Murdick

Dewey Murdick

@DeweyAM -

Assaf Moghadam

Assaf Moghadam

@assafmoghadam -

Sam Thorpe

Sam Thorpe

@samthorpe__ -

ARC Justice Clinic

ARC Justice Clinic

@ARCJustClinic -

Devorah Margolin

Devorah Margolin

@DevorahMargolin -

Andrew J. Tabler

Andrew J. Tabler

@Andrewtabler -

Alastair Reed

Alastair Reed

@reed_alastair -

The Griswold Center for Economic Policy Studies

The Griswold Center for Economic Policy Studies

@PrincetonGCEPS -

Jacob Ware

Jacob Ware

@Jacob_A_Ware -

Eric Fan

Eric Fan

@ericfan_journo

Something went wrong.

Something went wrong.