Vaishaal Shankar

@VaishaalML research @ apple. Trying to find artificial intelligence. Opinions are my own.

Similar User

@lschmidt3

@Pavel_Izmailov

@shimon8282

@jefrankle

@YinCuiCV

@JacobSteinhardt

@ClementineDomi6

@FrancescoLocat8

@andrew_ilyas

@gkdziugaite

@ananyaku

@katherine1ee

@jerryzli

@SuryaGanguli

@MLMazda

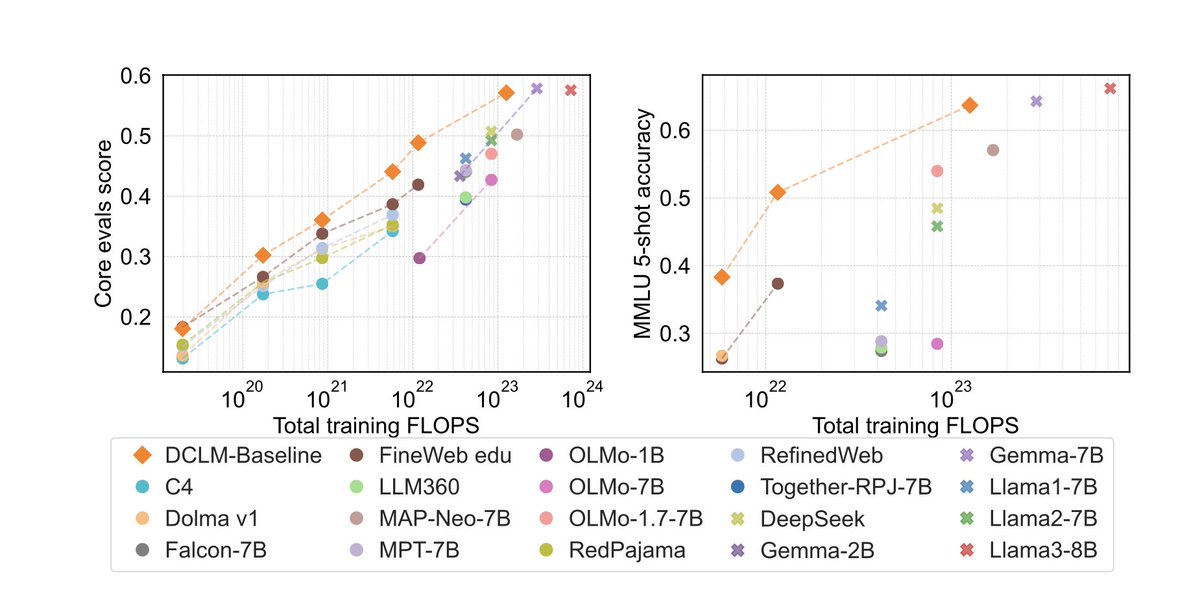

I am really excited to introduce DataComp for Language Models (DCLM), our new testbed for controlled dataset experiments aimed at improving language models. 1/x

As Apple Intelligence is rolling out to our beta users today, we are proud to present a technical report on our Foundation Language Models that power these features on devices and cloud: machinelearning.apple.com/research/apple…. 🧵

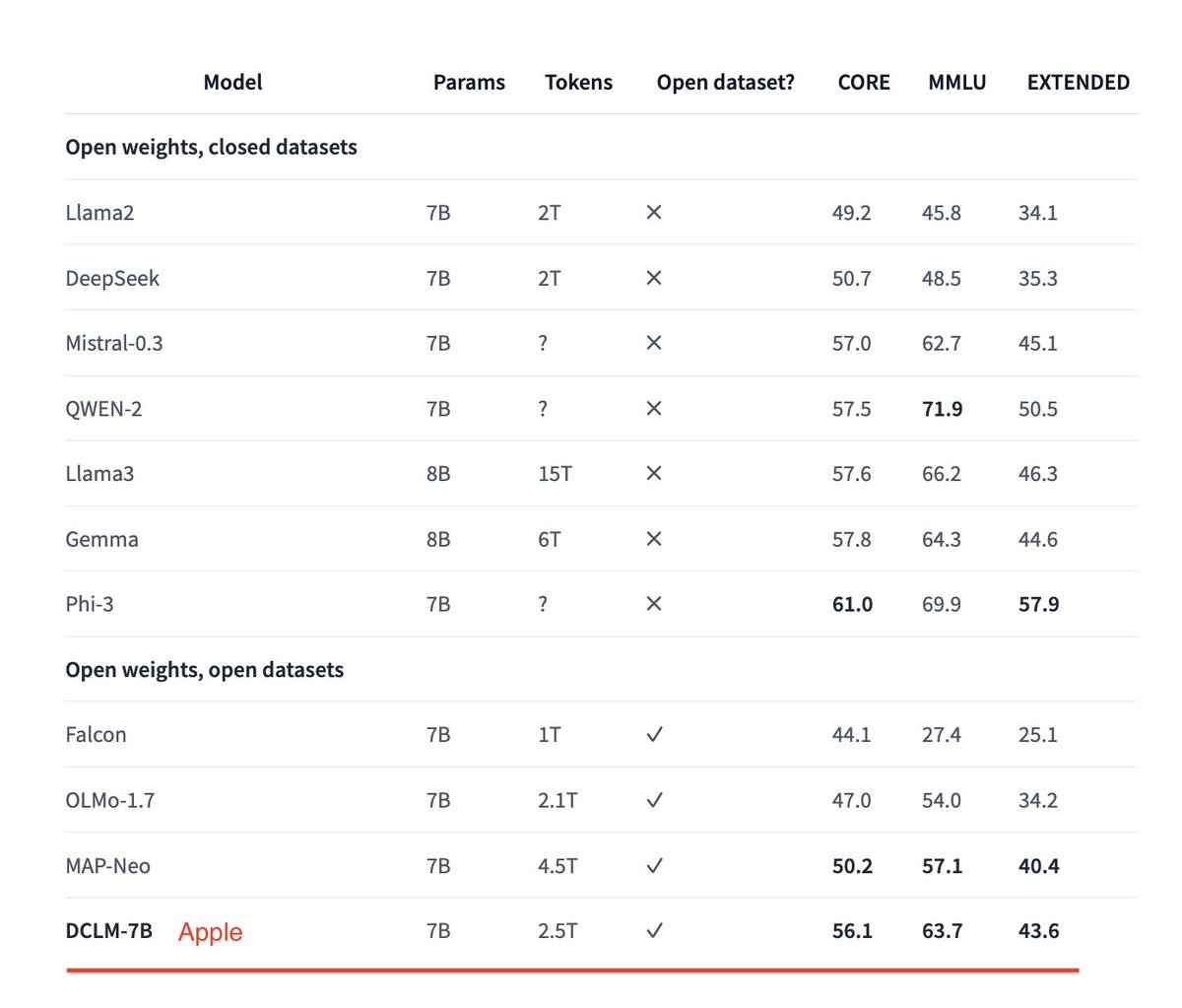

Datacomp-LM (DCLM) was presented today in ICLM FOMO workshop. DCLM is a data-centric benchmark for LLMs. It is also the state of the art open-source LLM and the state of the art open training dataset. Probably the most important finding is that data curation algorithms that…

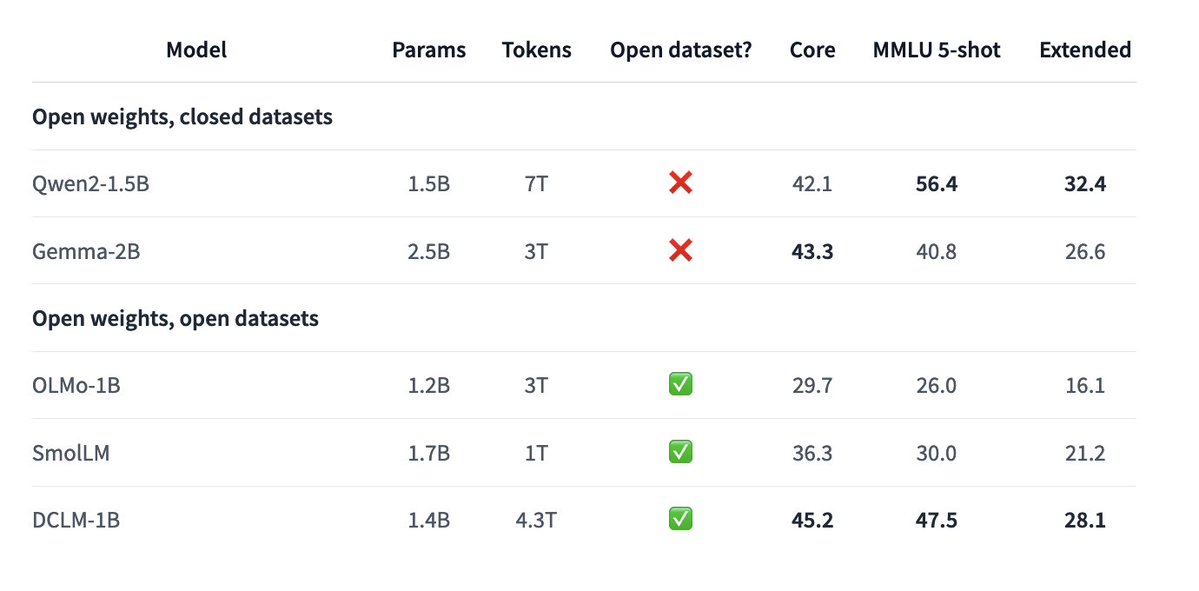

DCLM models keep on coming! This time we release (by far) the best open-data 1B model!

Excited to share our new-and-improved 1B models trained with DataComp-LM! - 1.4B model trained on 4.3T tokens - 5-shot MMLU 47.5 (base model) => 51.4 (w/ instruction tuning) - Fully open models: public code, weights, dataset!

Kudos to Apple. They publish their new 7B model not only open weight, but also open data-set! And in this ranking Apple even takes 1st place! An outstanding achievement that others should take as an example and be just as transparent. huggingface.co/datasets/mlfou…

Kudos to @Apple for dropping a game-changer! 🚀 Their new 7B model outshines Mistral 7B and fully open-sourced, complete with the pretraining dataset! 🔥🌟 huggingface.co/apple/DCLM-7B

🚀 Exciting news! @Apple has released its own open-source LLM, DCLM-7B. Everything is open-source, including the model weights and datasets. 💡Why should you be excited? 1. The datasets and tools released as part of this research lay the groundwork for future advancements in…

Apple shows off open AI prowess: new models outperform Mistral and Hugging Face offerings venturebeat.com/ai/apple-shows…

Apple joined the race of small models. DCLM-7B Released a few days back is open-source in every respect, including weights, training code, and dataset! 👀 🧠 7B base model, trained on 2.5T tokens from open datasets. 🌐 Primarily English data with a 2048 context window. 📈…

I will be at ICML in Vienna next week. DM if you want to talk about language models, dataset design or any other exciting research :)

Apple at it again with "truly open-source models" 🔥🔥🔥

We have released our DCLM models on huggingface! To our knowledge these are by far the best performing truly open-source models (open data, open weight models, open training code) 1/5

Okay first DFN then that Apple is now the king of open-source datasets, both vision and NLP

Apple released a 7B model that beats Mistral 7B - but the kicker is that they fully open sourced everything, also the pretraining dataset 🤯 huggingface.co/apple/DCLM-7B

Kudos to @Apple for dropping a game-changer! 🚀 Their new 7B model outshines Mistral 7B and fully open-sourced, complete with the pretraining dataset! 🔥🌟 huggingface.co/apple/DCLM-7B

Apple has entered the game! @Apple just released a 7B open-source LLM, weights, training code, and dataset! 👀 TL;DR: 🧠 7B base model, trained on 2.5T tokens on an open datasets 🌐 Primarily English data and a 2048 context window 📈 Combined DCLM-BASELINE, StarCoder, and…

Apple released a 7B model that beats Mistral 7B - but the kicker is that they fully open sourced everything, also the pretraining dataset 🤯 huggingface.co/apple/DCLM-7B

United States Trends

- 1. #UFC309 53,4 B posts

- 2. Brian Kelly 8.993 posts

- 3. Jim Miller 4.632 posts

- 4. #MissUniverse 105 B posts

- 5. Beck 14,1 B posts

- 6. Mizzou 6.643 posts

- 7. Feds 36,8 B posts

- 8. Nebraska 12,1 B posts

- 9. Romero 17,9 B posts

- 10. Gators 11,2 B posts

- 11. Louisville 7.065 posts

- 12. #AEWCollision 9.394 posts

- 13. Onama 2.766 posts

- 14. Tennessee 39,6 B posts

- 15. #LAMH 1.247 posts

- 16. Dylan Sampson 1.363 posts

- 17. Locke 3.879 posts

- 18. #GoDawgs 6.427 posts

- 19. Stanford 9.516 posts

- 20. Antifa 32,7 B posts

Who to follow

-

Ludwig Schmidt

Ludwig Schmidt

@lschmidt3 -

Pavel Izmailov

Pavel Izmailov

@Pavel_Izmailov -

Shimon Whiteson

Shimon Whiteson

@shimon8282 -

Jonathan Frankle

Jonathan Frankle

@jefrankle -

Yin Cui

Yin Cui

@YinCuiCV -

Jacob Steinhardt

Jacob Steinhardt

@JacobSteinhardt -

Clémentine Dominé 🍊

Clémentine Dominé 🍊

@ClementineDomi6 -

Francesco Locatello

Francesco Locatello

@FrancescoLocat8 -

Andrew Ilyas

Andrew Ilyas

@andrew_ilyas -

Gintare Karolina Dziugaite

Gintare Karolina Dziugaite

@gkdziugaite -

Ananya Kumar

Ananya Kumar

@ananyaku -

Katherine Lee

Katherine Lee

@katherine1ee -

Jerry Li

Jerry Li

@jerryzli -

Surya Ganguli

Surya Ganguli

@SuryaGanguli -

Mazda Moayeri

Mazda Moayeri

@MLMazda

Something went wrong.

Something went wrong.