Azade Nova

@Azade_naResearch Scientist at Google DeepMind

Similar User

@annadgoldie

@ayazdanb

@willhang_

@YoungXiong1

@MStecklina

@pathomkar

@FX76956129

@nigamavykari

@DaoustMj

@ArekSredzki

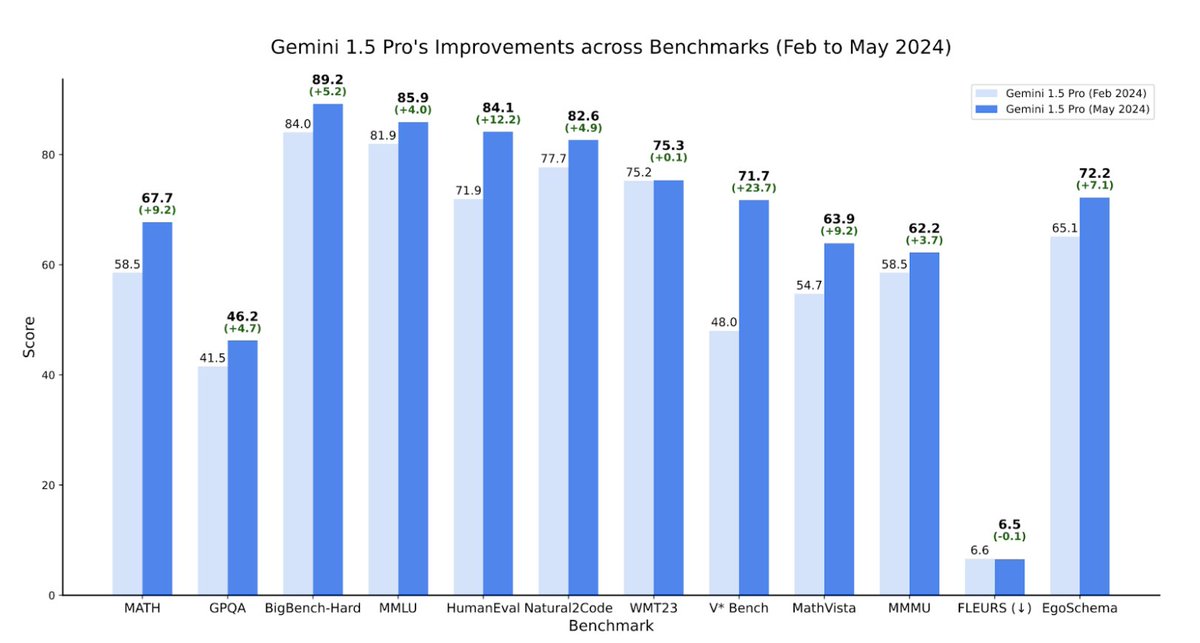

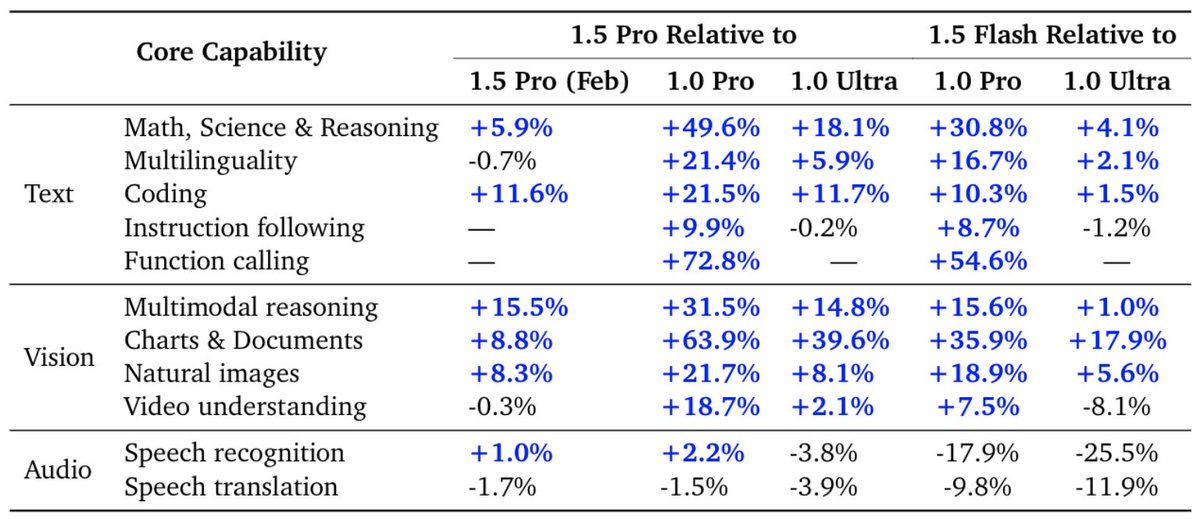

Happy to share what I've been working on for the past few months. Check out updated Gemini 1.5 report. goo.gle/GeminiV1-5

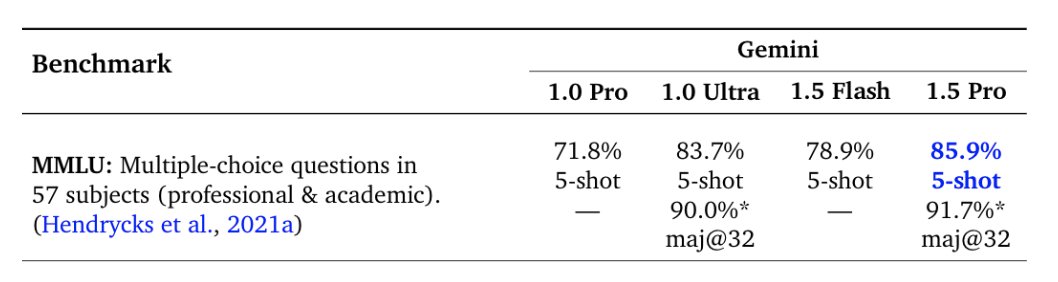

Gemini 1.5 Model Family: Technical Report updates now published In the report we present the latest models of the Gemini family – Gemini 1.5 Pro and Gemini 1.5 Flash, two highly compute-efficient multimodal models capable of recalling and reasoning over fine-grained information…

If you want to learn about efficient inference in LLMs, stop by to our poster #733 on Wed (July 26) at 11am icml.cc/virtual/2023/p…, #ICML23

Check out our gradient-free structural pruning approach for large models. No need for retraining or labeled data! In just a few minutes on one GPU, reduces up to 40% of the original FLOPs at minimal loss of accuracy. w/ @hanjundai, Dale Schuurmans paper: arxiv.org/abs/2303.04185

Check out our gradient-free structural pruning approach for large models. No need for retraining or labeled data! In just a few minutes on one GPU, reduces up to 40% of the original FLOPs at minimal loss of accuracy. w/ @hanjundai, Dale Schuurmans paper: arxiv.org/abs/2303.04185

Truly an honor. Didn’t sleep much but we did it!

Thank you to everyone on the organizing and steering committee for putting together a great ML for Systems workshop at NeurIPS!

Interested in learning more about ML for Systems research at Google? Stop by the booth today at 10:30 am to hear @martin_maas discuss the latest in ML-driven systems! And if you want to learn more about the area, join the ML for Systems workshop on Saturday, December 3rd.

Join us on Saturday, December 3 at #NeurIPS2022 for the 6th edition of the ML for Systems workshop. We have an exciting program with 5 invited talks and 19 accepted papers, as well as plenty of opportunities to chat with researchers in the area. mlforsystems.org

As a 4th time organizer, I must say we have an amazing program this year at ML for Systems at NeurIPS. Before I forget — Organizers @martin_maas @Azade_na @BenoitSteiner Neel Kant @DZhang50 Steering @annadgoldie @Azaliamirh @jonathanrraiman @miladhash @kswersk + PC. 🙏

🔥 LLMs can do OOD reasoning:We show that we can teach algorithms to LLMs with only three examples and it generalizes to much longer input length as much as the context length allows! We can also teach multiple algorithms,compose them to teach complex ones & use them as tools! 🔥

“LLMs can’t even do addition” 📄🚨We show that they CAN add! To teach algos to LLMs, the trick is to describe the algo in enough detail so that there is no room for misinterpretation w/ @Azade_na @hugo_larochelle @AaronCourville @bneyshabur @HanieSedghi arxiv.org/abs/2211.09066

Excited to share that our work has been published in Nature! Our RL agent generates chip layouts in just a few hours, whereas human experts can take months. These superhuman AI-generated layouts were used in Google's latest AI accelerator (TPU-v5)! nature.com/articles/s4158…

Thrilled to announce that our work on RL for chip floorplanning was published in Nature & used in production to design next generation Google TPUs, with potential to save thousands of hours of engineering effort for each next generation ASIC: nature.com/articles/s4158… (1/7)

Autoregressive graph generation is powerful but slow. Our recent work reduces its complexity from O(V^2) to O((E+V) log V), with sublinear mem cost and training parallelism. Paper: arxiv.org/abs/2006.15502 Code: github.com/google-researc… w/ @Azade_na @liyuajia @daibond_alpha Dale

Check out TraDE, our new transformer based density estimator arxiv.org/abs/2004.02441. It beats NAF, BNAF, TAN, FFJORD, NSF, AEM on reference datasets. Works well for #bumblebee, too (left: original, right: estimate). @rasoolfa @pratikac and Jonas Mueller are the real stars here.

Today we are excited to release video recordings of lectures from "Advanced Deep Learning and Reinforcement Learning", a course on deep RL taught at @UCL earlier this year by DeepMind researchers: youtube.com/playlist?list=… Enjoy!

We've seen @BostonDynamics' infamous robot dog open doors, and climb stairs. But now it can navigate complicated construction sites, and do detailed work inspections too 👀: wired.trib.al/0CcVEmR

If you want to do ML research, consider applying for the 2019 Google AI Residency program! You'll have the opportunity to conduct cutting-edge research working in a wide variety of areas, and this year we're expanding to host residents in even more locations.

Applications for the 2019 Google AI Residency program are now open! Visit g.co/airesidency/ap… for more information on how to apply. To learn more about the accomplishments of the recently graduated second class of residents, visit ↓ goo.gl/5QZbsF

the paper behind BERT is now online: arxiv.org/abs/1810.04805">arxiv.org/abs/1810.04805 BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova arxiv.org/abs/1810.04805">arxiv.org/abs/1810.04805

If you care about the United States being a place where the best and brightest students from around the world come to study, and then stay to create amazing new companies or do ground-breaking research, this chart should make should make you very concerned.

America has long been a magnet for talent from around the world.

United States Trends

- 1. Hunter 1,28 Mn posts

- 2. tannie 110 B posts

- 3. Yeontan 364 B posts

- 4. Good Monday 30,5 B posts

- 5. #Brokeflyday 3.203 posts

- 6. #MondayMotivation 11,7 B posts

- 7. Omer 15 B posts

- 8. Burisma 45,8 B posts

- 9. #CyberMonday 9.682 posts

- 10. 49ers 57,1 B posts

- 11. Josh Allen 50,9 B posts

- 12. Big Guy 78,9 B posts

- 13. NO ONE IS ABOVE THE LAW 49 B posts

- 14. Niners 11,1 B posts

- 15. #BillsMafia 46,1 B posts

- 16. Dolly 16 B posts

- 17. Kushner 45 B posts

- 18. Bannon 25,5 B posts

- 19. Verified 34,8 B posts

- 20. #BaddiesMidwest 22 B posts

Something went wrong.

Something went wrong.