We got a new smolLM x Entropix update ! Now you can display your own token statistics in 3D space and inspect how your parameters fitting into model entropy characteristics. Comes with 2 experimental configs now and a data export module to let you utilize external tools.

Releasing two trillion tokens in the open. huggingface.co/blog/Pclanglai…

We need to be faster or else philolgy will be automated before math

So long as we're automating math, let's automate philology as well.

The qwen 2.5 models seem to have lower overall entropy vs llama models which is one reason i gravitate to llamas. qwen 2.5 coders have the lowest average entropy of any model family i've tested. that said ... qwen 2.5 coder 32B + entropix is looking like an absolute beast and…

Really excited for public release of this !

Today we are launching the Forge Reasoning API Beta, an advancement in inference time scaling that can be applied to any model or a set of models, for a select group of people in our community. nousresearch.com/introducing-th… The Forge Reasoning engine is capable of dramatically…

User : I want you to act as a software quality assurance tester. LLM : Sure User : I want you to help me arrange couple flowers for a bouquet. LLM : Im dying !! HELP !!

I'm entitled win on both worlds as it seems

more generally, i am feeling good about a bright future for cryptocurrency!

Planning one more push on pre-training before it reaches the limits

About the limits of LLMs. Ilya gave Reuters an interview. Reasoning is the future. Reuters published an important article today that refers to the discussion started by The Information yesterday. The following aspects are essential. - Pre-training is reaching its limits. "Ilya…

Opus probably was having fun enjoying some quality time with other llm friends and got distracted by that fact

Many such cases

Great references actually im impressed

Ask ChatGPT “based on what you know about me. draw a picture of what you think my current life looks like” past your responses below. thanks again @mreflow & @danshipper

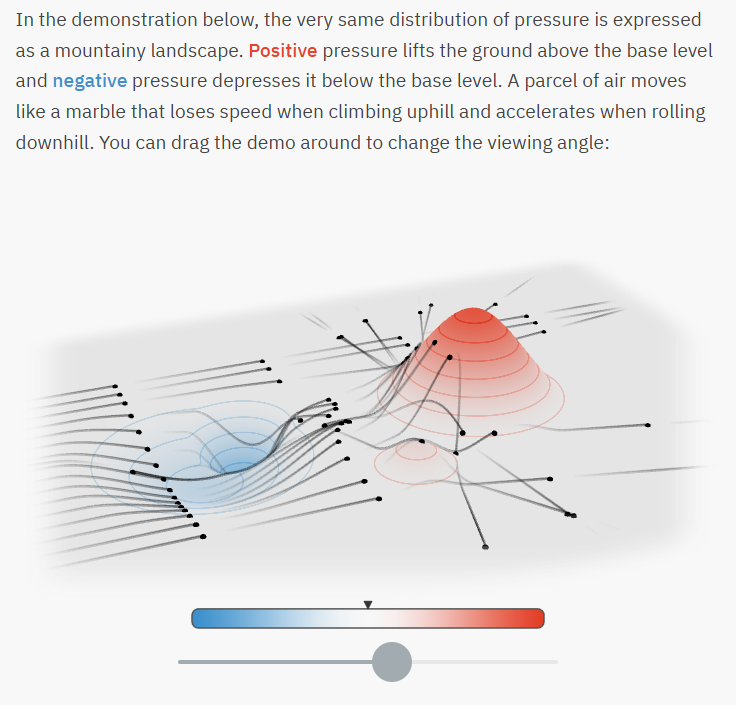

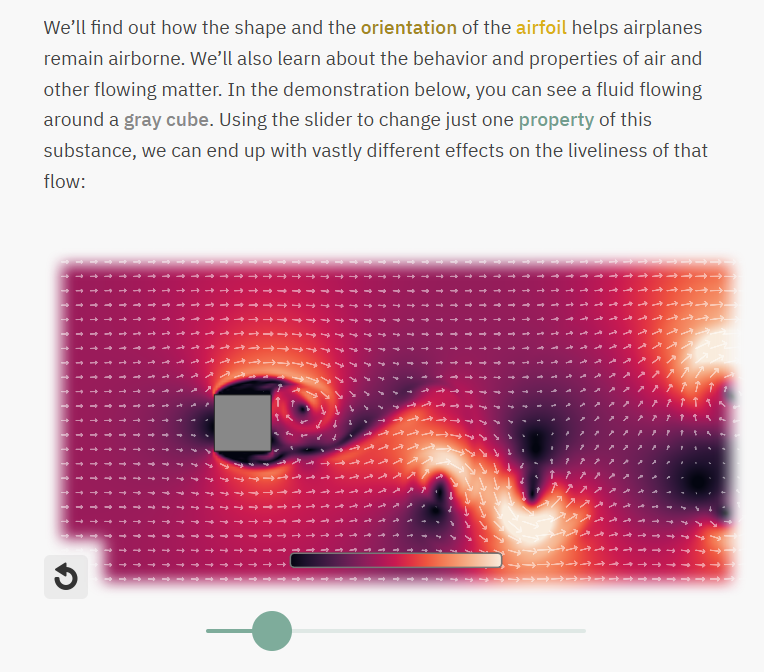

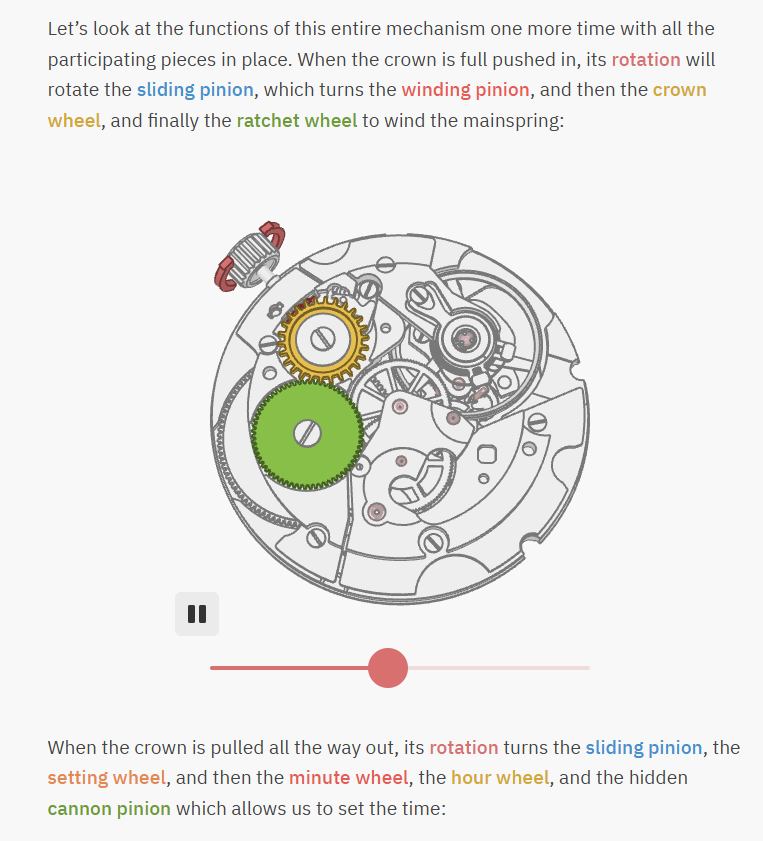

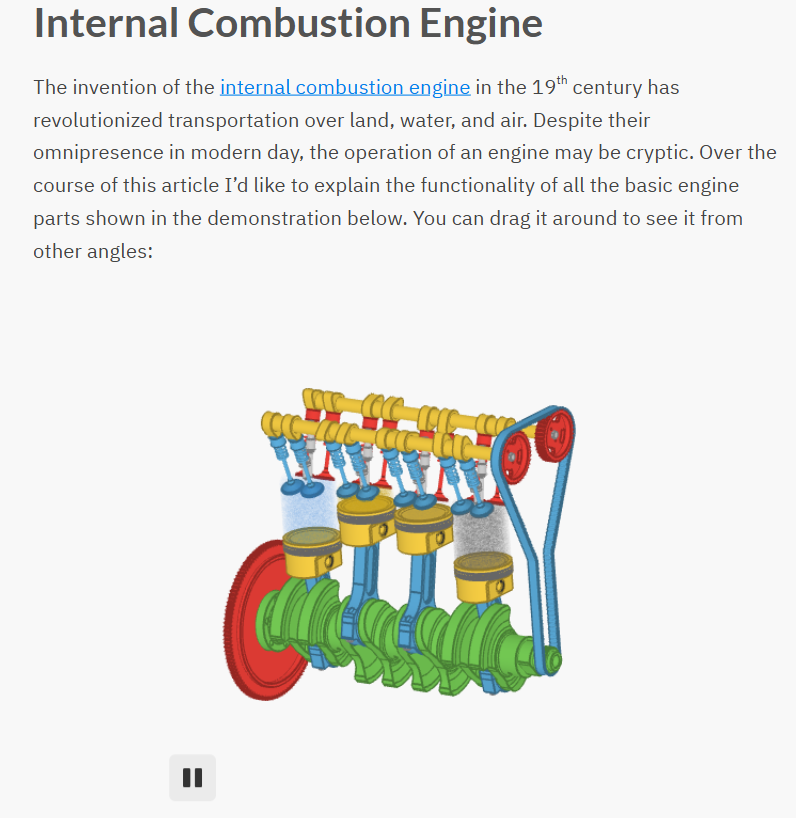

this is peak blogging...nothing can come close to this...every part and process explained in detail...you can move and control things and see the processes from different views...ciechanow.ski/archives/

dear president @realDonaldTrump, because of few sf elites, we are forced to use chinese models like qwen-2.5 locally; however we really want to use american-made models such as openai-o1 or claude-sonnet-3.5 can you please issue an EO and open-source these models?

maximum a posteriori (map) estimation is a key concept in bayesian statistics, used to estimate the most probable value of an unknown parameter given observed data and prior knowledge. it combines the principles of maximum likelihood estimation with bayesian inference, providing…

Frog made Entropix sentient we are doomed, she almost got mad at me for asking simple question with "step-by-step" instruction.

United States Trends

- 1. #PaulTyson 98,7 B posts

- 2. Goyat 10,3 B posts

- 3. #SmackDown 19,8 B posts

- 4. Rosie Perez 1.341 posts

- 5. Cedric 5.474 posts

- 6. Bayley 4.929 posts

- 7. B-Fab 3.738 posts

- 8. Cam Thomas 2.154 posts

- 9. #NetflixBoxing N/A

- 10. CJ Cox N/A

- 11. #netfilx N/A

- 12. Max Christie 1.064 posts

- 13. Purdue 4.833 posts

- 14. #FightNight 3.802 posts

- 15. Hukporti 1.006 posts

- 16. Karoline 37,1 B posts

- 17. Kevin Love 1.896 posts

- 18. Michin 3.261 posts

- 19. End 1Q N/A

- 20. LA Knight 1.882 posts

Something went wrong.

Something went wrong.