edward hicksford

@citizenhicksposts about ai and occasionally apple.

i never really understood the use case for ipad mini. then i saw it being used as a digital ‘menu’ in a restaurant. it is the perfect weight and sizd for that.

this is a very good read. i personally do not look at benchmarks anymore. kinda useless really. stochasm.blog/posts/scaling_…

first blog post! around 2000 words, link in replies. first time writing something like this

you know you are in 2024 when the waiter asks: ‘do you want to take a photo first? then we can cut your dish…’ well, we took a photo.

almost.

elections are over and everyone started to panic about a wall.

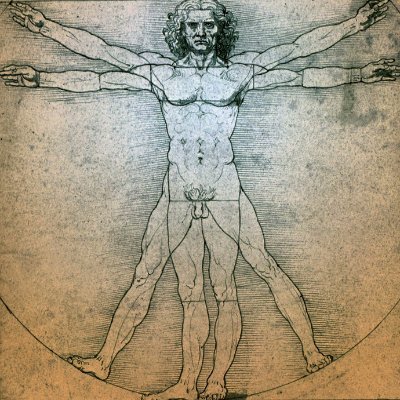

we need more of this guy.

this paper from @Apple machine learning research introduces the concept of ‘super weights’ in llms. pruning even a single parameter, which they call a super weight, can drastically impair an llm's ability to generate text. this is surprising because previous work had only found…

one wonders how come that alphafold3 was released 6 months ago and now it’s just got open sources, albeit with somewhat gated weights. for me, it signals that they have something much more capable in the pipeline already.

given that @GoogleDeepMind open sourced (for academic use) alphafold 3, it’s worth re-visiting the model architecture. at the foundation of the architecture are the input embeddings, which consist of an input embedder and relative position encoding. following the input…

can’t wait.

this paper introduced regularised best-of-n (rbon) sampling, a novel approach to mitigate reward hacking in large language model alignment. bon sampling is a decode-time alignment method that selects the best response from n samples based on a reward model. however, bon can…

engineering just cannot keep up with r&d…

"Of course that's your contention. You just found out about entropix on X, saw that pretty quadrant plot in the README and got sold on varentropy for confidence estimation. That'll last until you'll find llmri and see what those distributions really look like. Same patterns, same…

apple mlx is great but where is my torch.distributions.dirichlet(), sir?!

this looks promising.

Mathematics offers a unique window into AI's reasoning capabilities. Discover why we've launched FrontierMath—a benchmark of hundreds of unpublished, expert-level math problems—to understand the frontier of artificial intelligence.

the paper below investigates the differences between low-rank adaptation (lora) and full fine-tuning methods for adapting pre-trained language models. while lora has been shown to match full fine-tuning performance on many tasks with fewer trainable parameters, the paper…

when your girlfriend calls your new m4max macbook ‘how is the new pc?’ i can’t.

entropy is everywhere.

you lot just click on anything.

United States Trends

- 1. #PaulTyson 23,9 B posts

- 2. Barrios 6.323 posts

- 3. #NetflixFight 7.429 posts

- 4. #SmackDown 56,8 B posts

- 5. Jerry Jones 3.465 posts

- 6. Rosie Perez 4.308 posts

- 7. My Netflix 15,8 B posts

- 8. Goyat 20,7 B posts

- 9. #netfix 1.653 posts

- 10. Nunes 29,8 B posts

- 11. Cedric 8.769 posts

- 12. #BlueBloods 2.388 posts

- 13. Buffering 16,3 B posts

- 14. Holyfield 6.762 posts

- 15. Michael Irvin N/A

- 16. Naomi 23,8 B posts

- 17. Bronson Reed 4.189 posts

- 18. Grok 49,2 B posts

- 19. Shinsuke 3.428 posts

- 20. Seth 22,7 B posts

Something went wrong.

Something went wrong.