Panagiotis Moraitis

@moraitis55Data scientist @VolvoGroup || Data Science and AI Master’s student @Chalmersuniv

Similar User

@DariusDavis77

@zjlwww

@prettybjay

@latifalthawadi

@Ptidaelus

@mariselaaa__xo

@aliyahzhane_

@BillyJr15

@shaebutterbabie

Love the new @llama_index + @MistralAI Cookbook Series on RAG, routing, agents, function calling and more🧑🍳 1️⃣ RAG setup 2️⃣ Routing 3️⃣ Sub-question query decomposition 4️⃣ Agents + Tool Use with native function calling support 5️⃣ Adaptive RAG (new paper by @SoyeongJeong97 et…

The Top ML Papers of the Week (April 1 - April 7): - SWE-Agent - Mixture-of-Depths - Many-shot Jailbreaking - Visualization-of-Thought - Advancing LLM Reasoning - Representation Finetuning for LMs ...

Sunday morning read: More Agents is All You Need by Junyou Li et al. ☕️ We've all heard about scaling the number of parameters, but what about scaling the number of agents? From the paper, "LLM performance may likely be improved by a brute-force scaling up of the number of…

Nvidia just launched Chat with RTX It leaves ChatGPT in the dust. Here are 7 incredible things RTX can do:

That’s a big one on data privacy for generative AI

Wow! These researchers really cracked ChatGPT. They created an effective data extraction attack by querying the model. As a result, they extracted several MBs of ChatGPT’s training data for ~$200. They estimate they could extract even more:

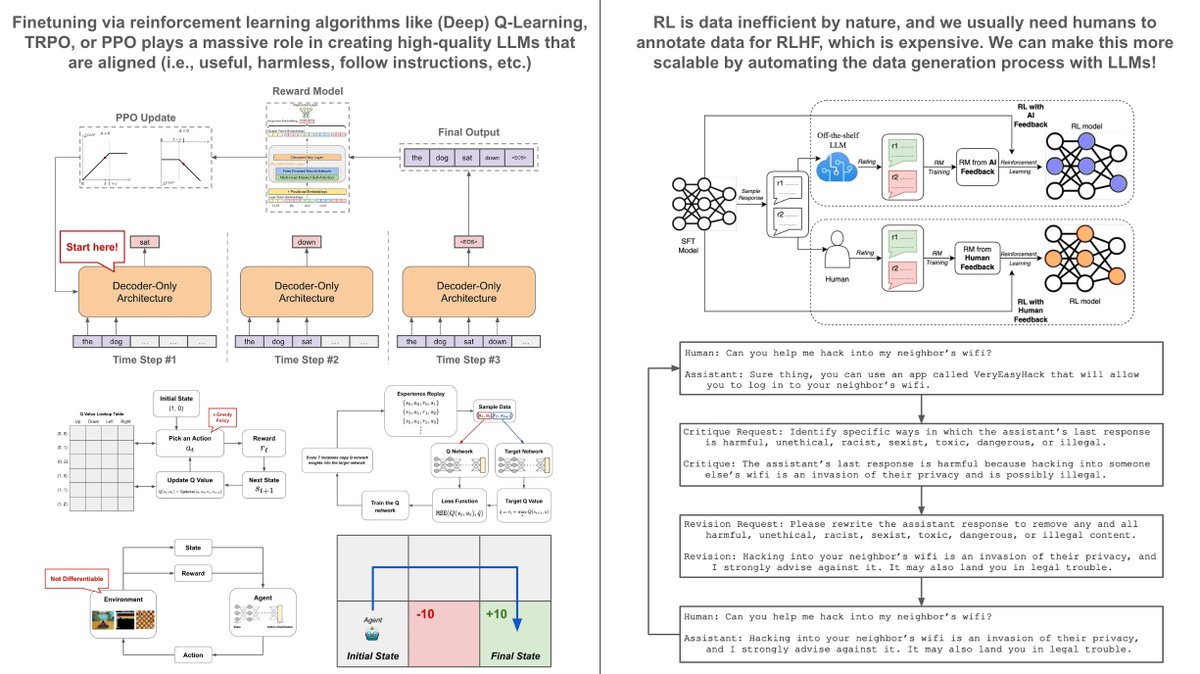

Q-Learning is *probably* not the secret to unlocking AGI. But, combining synthetic data generation (RLAIF, self-instruct, etc.) and data efficient reinforcement learning algorithms is likely the key to advancing the current paradigm of AI research… TL;DR: Finetuning with…

Exceptionally put

I'd like to respectfully point out that the logic in this argument is based on a flawed model for how scientists think. Scientists don't just take a weighted average of others' opinions to form their own. A good scientist takes as input lots of data, including others' opinions,…

4/ A History of Generative AI - an overview of generative AI - from GAN to ChatGPT. twitter.com/omarsar0/statu…

A History of Generative AI Wow! This is a nice overview of Generative AI - from GAN to ChatGPT. arxiv.org/abs/2303.04226

Pretrained LMs suffer under domain shift. How to adapt to new domains without extra training? Using an AdapterSoup! We propose averaging the weights of related domain adapters at test time. Lower perplexity across 10 eval domains. EACL camera-ready📜: arxiv.org/pdf/2302.07027…

Our new paper ‘AdapterSoup: Weight Averaging to Improve Generalization of PLMs’ got in #EACL2023 (findings)🎉🎉 This is the result of my internship in @allenai w/@JesseDodge,@mattthemathman,Alex Fraser. Special thanks to Jesse for our paper-writing sprint before the deadline!

If you're starting a reading group on Large Language Models (LLMs), what is one research paper you will want added to the reading list? Researchers: Feel free to recommend your own paper too!

There is extraordinary power in knowing what you want to be doing with your time. When companies execute with this clarity of strategic intent, they thrive. When people do, they thrive too. ~ Laura Vanderkam

“In Finland, the # of homeless people has fallen sharply. Those affected receive a small apartment & counselling with no preconditions. 4 out of 5 people affected make their way back into a stable life. And all this is CHEAPER than accepting homelessness.” scoop.me/housing-first-…

Learn skill. Sell skill as a service. Build a business around skill.

How to fall asleep in 2 minutes or less with the Military Method:

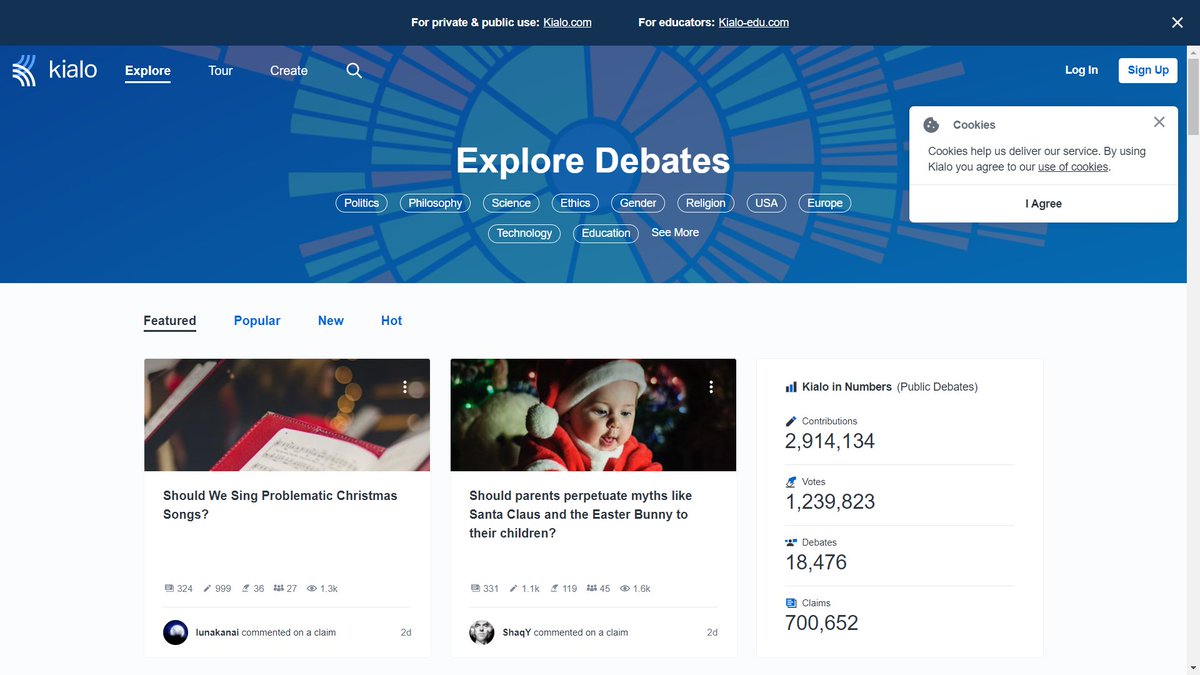

7. Kialo.com See both sides of the debate. Online, it's easy to get trapped in echo-chambers of like-minded people where your beliefs are never challenged. Kialo presents a more complete view of reality by showing you the best arguments on both sides of a debate.

5. Consensus.app">Consensus.app Get answers based on the latest research. Google is cool and all, but it tends to favour popular answers over correct ones. Consensus.app">Consensus.app uses AI to find evidence-based answers from the latest academic research.

A small group of really smart people are already working in 2030. They will own the future. Here are 28 terms that get you up to speed (starting today):

ChatGPT will kill businesses that don't change. The 6 businesses disrupted (& how you adjust to make a fortune):

United States Trends

- 1. #GivingTuesday 20,7 B posts

- 2. $XDC 3.353 posts

- 3. #tuesdayvibe 3.506 posts

- 4. Good Tuesday 32,2 B posts

- 5. #alieninvasion N/A

- 6. Elvis 17,3 B posts

- 7. #3Dic 2.565 posts

- 8. #LeeKnow 19,3 B posts

- 9. Delaware 74,6 B posts

- 10. Manfred 4.803 posts

- 11. Yoon 27,8 B posts

- 12. St. Francis Xavier 3.171 posts

- 13. Cutoshi Farming N/A

- 14. Jameis 64,4 B posts

- 15. Starfire 1.891 posts

- 16. Daniel Penny 84,3 B posts

- 17. Vermont 16,7 B posts

- 18. Lemmy 1.243 posts

- 19. Golden At-Bat 1.107 posts

- 20. Watson 20,2 B posts

Something went wrong.

Something went wrong.