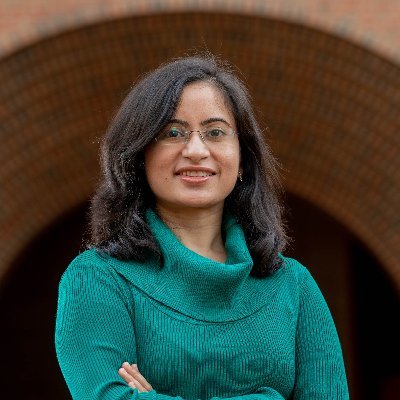

Manik Bhandari

@manikb20Applied Scientist II at Amazon | NLP at Carnegie Mellon University

Similar User

@stefan_fee

@ashutoshk2401

@Jiachen_Gu

@snigdhac25

@toutanova

@tuvllms

@harsh_jhamtani

@xc_feng

@satwik1729

@arnaik19

@Ritam_Dutt

@AditiC123

@im_mansigupta

@esalesk

@orionweller

Are extractive summaries always faithful? Turns out that is not the case! We introduce a typology, human error annotations & new evaluation metric for 5 broad faithfulness problems in extractive summarization! w/ @meetdavidwan @mohitban47 Paper: arxiv.org/abs/2209.03549 🧵👇

If I ever shared how abusive & toxic my experience of the business technologies group at Tepper was, the group would cease to exist. It still rattles me every day. I couldn’t even share my joy when I escaped from that hellhole. Too traumatic to acknowledge I ever worked there.

I've seen quite a few #NAACL2022 papers that say "our code is available at [link]" but the code is not available at "[link]". Everyone, let's release our research code! It's better for everyone, and hey, messy code is better than no code.

Twitterverse: what are some engaging and accessible books on ethics that you’d recommend?

Hate these #NLProc reviews saying the results are “not surprising”. I wrote the whole paper to convince you why the model is novel and should work! I’ve done a good job if the results don’t look surprising 😔

This is a brilliant idea. Wish there was something similar for other virtual conferences as well.

So if virtual conferences like #acl2020nlp or #emnlp2020 seem a bit too large to actually find buddies in a more informal way - consider signing up for the #coling2020 buddy scheme! coling2020.org/2020/11/13/bud…

Checkout our recent #EMNLP 2020 paper on evaluating summarization evaluation metrics! Paper: arxiv.org/pdf/2010.07100…. Code: github.com/neulab/REALSum…

Excited to share our #EMNLP2020 work: REALSum: Re-evaluating Evaluation in Text Summ: arxiv.org/pdf/2010.07100… (super awesome coauthors: @manikb20 @Pranav @ashatabak786 and @gneubig ) Are existing automated metrics reliable??? All relevant resource has been released (1/n)!

Excited to share our #EMNLP2020 work: REALSum: Re-evaluating Evaluation in Text Summ: arxiv.org/pdf/2010.07100… (super awesome coauthors: @manikb20 @Pranav @ashatabak786 and @gneubig ) Are existing automated metrics reliable??? All relevant resource has been released (1/n)!

A compelling argument for Test Driven Development of AI by @tdietterich medium.com/@tdietterich/w…

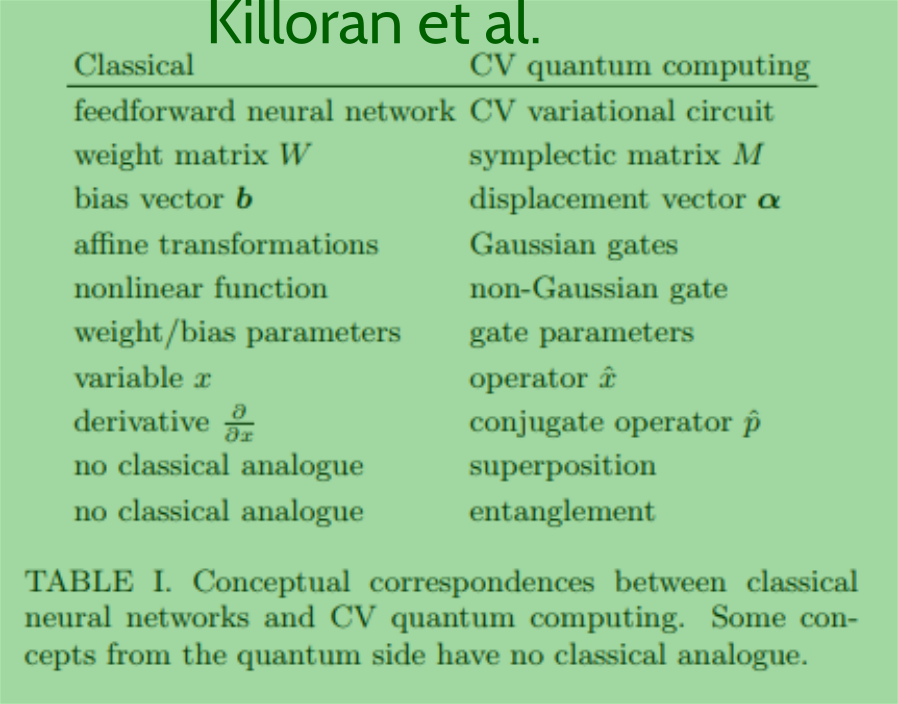

So in @sirajraval's livestream yesterday he mentioned his 'recent neural qubit paper'. I've found that huge chunks of it are plagiarised from a paper by Nathan Killoran, Seth Lloyd, and co-authors. E.g., in the attached images, red is Siraj, green is original

Horrifying

So @Medium has published documents describing the scientific fraud and abusive lab culture that allegedly lead Huixing Chen to take his own life. Trigger Warning: medium.com/@huixiangvoice… (1/n)

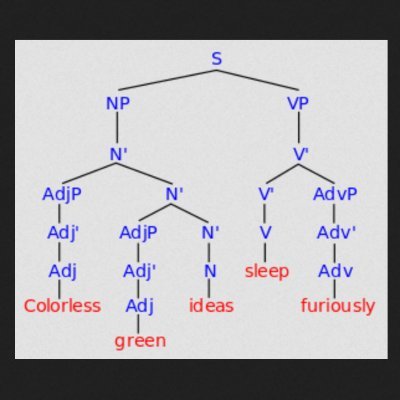

We had three long papers accepted at ACL 2019: 1. Incorporating Syntactic and Semantic Information in Word Embeddings using Graph Convolutional Networks 2. Zero-shot Word Sense Disambiguation using Sense Definition Embeddings (1/2) #nlproc #ACL2019

Very interesting and very scary. Synthesizing Obama. bbc.com/news/av/techno… grail.cs.washington.edu/projects/Audio…

How words change meaning over time: “Baseline” (in NLP) 1998: random choice 2005: majority class 2012: bag of words 2019: LSTM with attention & pre-trained embeddings

Few things feel as good as (in no particular order): 1. A piece of your code doing what you want it to. 2. An empty inbox and to-do list. 3. High-bandwidth, successful communication of nuanced thoughts with a fellow human being.

Should we move on from recommendation letters? What do we do about great students who had mean, abusive, or just plain crappy supervisors?? Can't we find a way to not perpetuate the abuse and get rid of the recommendation letter request somehow? Anyone have alternatives?

This hits too close to home

4/ there are tons of undocumented tricks and know how to get the network to train that are buried in code, or in someone’s experience, or in someone’s “touch”. The same network can work well for someone who knows, and terrible for a newcomer. And there is no place to learn.

Our #naacl2019 meta reviewer was sad to reject our paper and asked us to make the required changes and resubmit. It feels good, despite our paper being rejected. #naaclhlt2019

United States Trends

- 1. Dodgers 49,6 B posts

- 2. Lakers 38 B posts

- 3. #DWTS 74,4 B posts

- 4. Chandler 39,5 B posts

- 5. Dylan Harper 1.560 posts

- 6. Duke 38,1 B posts

- 7. #kaicenat 4.592 posts

- 8. Ilona 12,9 B posts

- 9. Joey 27,3 B posts

- 10. Sasaki 8.908 posts

- 11. Matt Allocco N/A

- 12. Cooper Flagg 5.046 posts

- 13. Ohtani 7.029 posts

- 14. Soto 25,8 B posts

- 15. Beal 7.172 posts

- 16. #IslamabadMassacre 393 B posts

- 17. Glasnow 2.942 posts

- 18. Suns 18,8 B posts

- 19. Kershaw 1.693 posts

- 20. Buehler 1.309 posts

Who to follow

-

Pengfei Liu

Pengfei Liu

@stefan_fee -

Ashutosh Kumar

Ashutosh Kumar

@ashutoshk2401 -

Jia-Chen Gu

Jia-Chen Gu

@Jiachen_Gu -

Snigdha Chaturvedi

Snigdha Chaturvedi

@snigdhac25 -

Kristina Toutanova

Kristina Toutanova

@toutanova -

Tu Vu

Tu Vu

@tuvllms -

Harsh Jhamtani

Harsh Jhamtani

@harsh_jhamtani -

Xiachong Feng

Xiachong Feng

@xc_feng -

Satwik Bhattamishra

Satwik Bhattamishra

@satwik1729 -

Aakanksha Naik

Aakanksha Naik

@arnaik19 -

Ritam Dutt @ 😴

Ritam Dutt @ 😴

@Ritam_Dutt -

Aditi Chaudhary

Aditi Chaudhary

@AditiC123 -

Mansi Gupta

Mansi Gupta

@im_mansigupta -

Elizabeth Salesky

Elizabeth Salesky

@esalesk -

Orion Weller

Orion Weller

@orionweller

Something went wrong.

Something went wrong.