Similar User

@justbhavyaugh

@Geeks_CAT

@LeoNL09076176

@gnuites

@gowtham_ramesh1

@dchawla1307

@SolCatv2

@danielEPR94

@kix2mix2

@EduardoMonino

@Sreekesh11

@hrong2450

@ashtava

@jordibagot

hey everyone, i am sharing my repo for ViT where you can find a proper path to learn vision transformers and its uses in video processings. i have also implemented ViT from scratch so if you wanna know how it works, i got you covered. more coming soon! github.com/0xD4rky/Vision…

🚀 Ja tenim l'AGENDA de la Festa de l'Open Source el 19/10/2024 a Casa de Cultura (Girona) amb uns ponents, xerrades i tallers que sincerament no et pots perdre 👀🔥 Reserva Ara la teva entrada aquí 👉 festa-opensource.geeks.cat #OpenSource #Girona #FestaOS4 #Tech

Eii, tornem a fer la festa de l'open source de Girona. Si algú s'anima a fer una xerrada o taller feu proposta en aquest formulari! Us esperem 😁

👋 Tens una proposta de xerrada o taller relacionada amb el Codi Lliure? Comparteix i Participa a la propera Festa de l’Open Source el 19/10/2024 a Girona! Anima't 💪 omple el formulari ➡️ forms.gle/FNdvngy7rHahKx… #OpenSourceGirona #FestaOS24

👋 Tens una proposta de xerrada o taller relacionada amb el Codi Lliure? Comparteix i Participa a la propera Festa de l’Open Source el 19/10/2024 a Girona! Anima't 💪 omple el formulari ➡️ forms.gle/FNdvngy7rHahKx… #OpenSourceGirona #FestaOS24

Phi goes MoE! @Microsoft just released Phi-3.5-MoE a 42B parameter MoE built upon datasets used for Phi-3. Phi-3.5 MoE outperforms bigger models in reasoning capability and is only behind GPT-4o-mini. 👀 TL;DR 🧮 42B parameters with 6.6B activated during generation 👨🏫 16…

Link to blog post: hamel.dev/blog/posts/cou… In the post, we tell you how to get the most out of the course, what to expect, and how to navigate the materials. We are still adding a few lessons, but 95% of them are on the site. This is a unique course with 30+ legendary…

🚨 Introducing "ColPali: Efficient Document Retrieval with Vision Language Models" ! We use Vision LLMs + late interaction to improve document retrieval (RAG, search engines, etc.), solely using the image representation of document pages ! arxiv.org/abs/2407.01449 🧵(1/N)

Whoa, I just realized that raising a kid is basically 18 years of prompt engineering 🤯

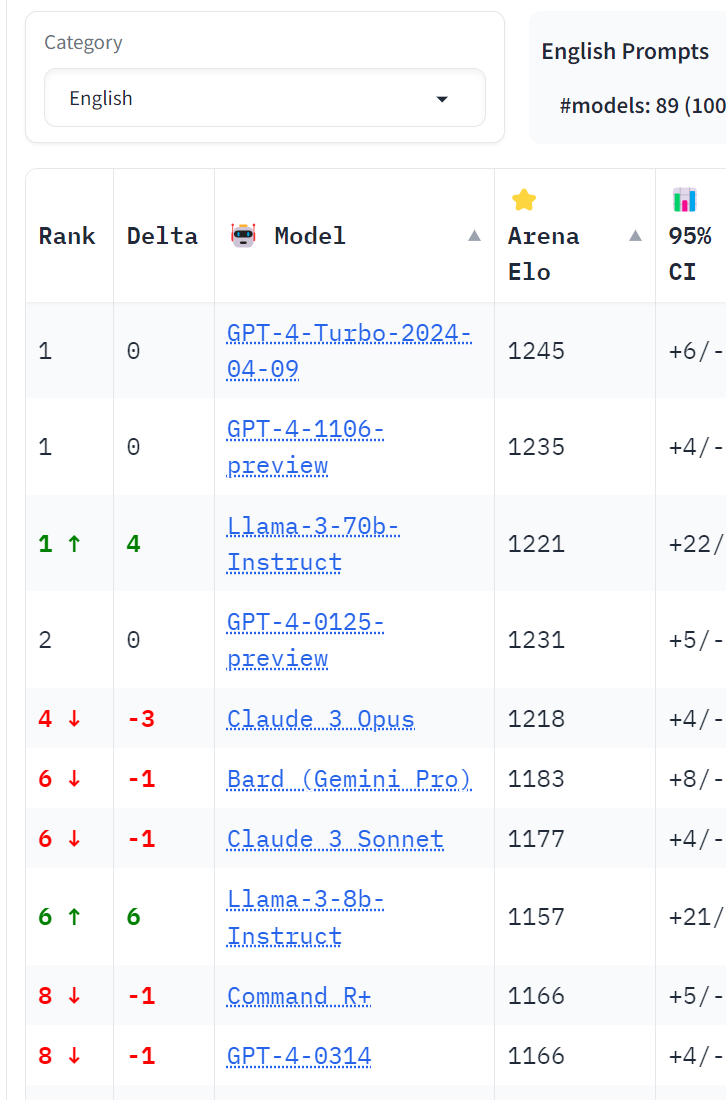

Its genuinely hard to believe a 70B model is up there with the 1.8T GPT4? I guess training data really is everything

great quote from karpathy most great organizations require leader(s) with a disproportionate amount of power when this is absent you end up with countless hierarchies of ineffective committees, e.g. many google products lack a Directly Responsible Individual with actual power

(1/n) With our wonderful student researcher @OscarLi101, we’re thrilled to release our OmniPred paper, showing a language model (only 200M params + trained from scratch) can be used as a universal regressor to predict experimental outcomes! Link: arxiv.org/abs/2402.14547 We…

Sora is Not Released. All this buzz to try to get people excited about something they can't use yet. While I am interested to try out Sora, I'm not gonna fall for the obvious marketing play and get excited about something that's still not usable. And I also know it will be…

540x Faster than GPT-4 100x Longer Sequences than GPT-4 And, better performance on Long Sequence tasks than GPT-4. Multi-Modal Mamba is going to change the LLM game on a scale you couldn't possibly imagine in the flash of a lightning. [Get Access Now] github.com/kyegomez/Multi…

Huuugee!!!!

🧵 (1/n) 👉 Introducing QuIP#, a new SOTA LLM quantization method that uses incoherence processing from QuIP & lattices to achieve 2 bit LLMs with near-fp16 performance! Now you can run LLaMA 2 70B on a 24G GPU w/out offloading! 💻 cornell-relaxml.github.io/quip-sharp/

This chart shows a very common pattern for how to improve performance using different prompt engineering methods. When I saw it the first time, I wondered how generalizable this stuff is. It probably is as shown in this blog post that Microsoft published. You can keep track of…

Sorry I know it's a bit confusing: to download phi-2 go to Azure AI Studio, find the phi-2 page and click on the "artifacts" tab. See picture.

No they fully released it. But they hide it very well for some reason. Go to artifacts tab.

There's too much happening right now, so here's just a bunch of links GPT-4 + Medprompt -> SOTA MMLU microsoft.com/en-us/research… Mixtral 8x7B @ MLX nice and clean github.com/ml-explore/mlx… Beyond Human Data: Scaling Self-Training for Problem-Solving with Language Models…

United States Trends

- 1. Ravens 76,5 B posts

- 2. Steelers 103 B posts

- 3. Bears 110 B posts

- 4. Packers 69,6 B posts

- 5. Lamar 30 B posts

- 6. Jets 53,7 B posts

- 7. #HereWeGo 18,5 B posts

- 8. #GoPackGo 9.093 posts

- 9. Paige 10,6 B posts

- 10. Lions 88,9 B posts

- 11. Caleb 31,4 B posts

- 12. Falcons 10,4 B posts

- 13. Worthy 46,7 B posts

- 14. Justin Tucker 19,4 B posts

- 15. Bo Nix 3.462 posts

- 16. Bills 97 B posts

- 17. Taysom Hill 9.335 posts

- 18. WWIII 35,9 B posts

- 19. Browns 29,7 B posts

- 20. Russ 14,4 B posts

Who to follow

-

gochujang

gochujang

@justbhavyaugh -

GeeksCAT

GeeksCAT

@Geeks_CAT -

Leonardo A. Navarro-Labastida

Leonardo A. Navarro-Labastida

@LeoNL09076176 -

GNUites

GNUites

@gnuites -

Gowtham Ramesh

Gowtham Ramesh

@gowtham_ramesh1 -

Deepak chawla

Deepak chawla

@dchawla1307 -

Sol Cataldo

Sol Cataldo

@SolCatv2 -

Daniel E.P.R.

Daniel E.P.R.

@danielEPR94 -

cristina

cristina

@kix2mix2 -

Eduardo Moñino

Eduardo Moñino

@EduardoMonino -

Sreekesh V

Sreekesh V

@Sreekesh11 -

Hao R

Hao R

@hrong2450 -

Ashutosh Srivastava

Ashutosh Srivastava

@ashtava -

Jordi Bagot 🐍

Jordi Bagot 🐍

@jordibagot

Something went wrong.

Something went wrong.