Jacy Reese Anthis

@jacyanthisHumanity is learning to coexist with a new class of beings. I research the rise of these “digital minds.” HCI/ML/soc/stats @SentienceInst @Stanford @UChicago

Similar User

@MercyForAnimals

@BarnSanctuary

@FARMUSA

@FarmSanctuary

@WeAnimals

@genebaur

@AnimalCharityEv

@AnimalsAus

@JohnOberg

@TheHumaneLeague

@r_atcheson

@ALDF

@Cshells33Wells

@TorontoPigSave

@AnimalAid

I discussed digital minds, AI rights, and mesa-optimizers with @AnnieLowrey at @TheAtlantic Humanity's treatment of animals does not bode well for how AIs will treat us or how we will treat sentient AIs. We must move forward with caution and humility. 🧵 theatlantic.com/ideas/archive/…

Following a historic victory at the polls, what will a second Trump term mean for America's AI future? ti.me/3Cm5cdx

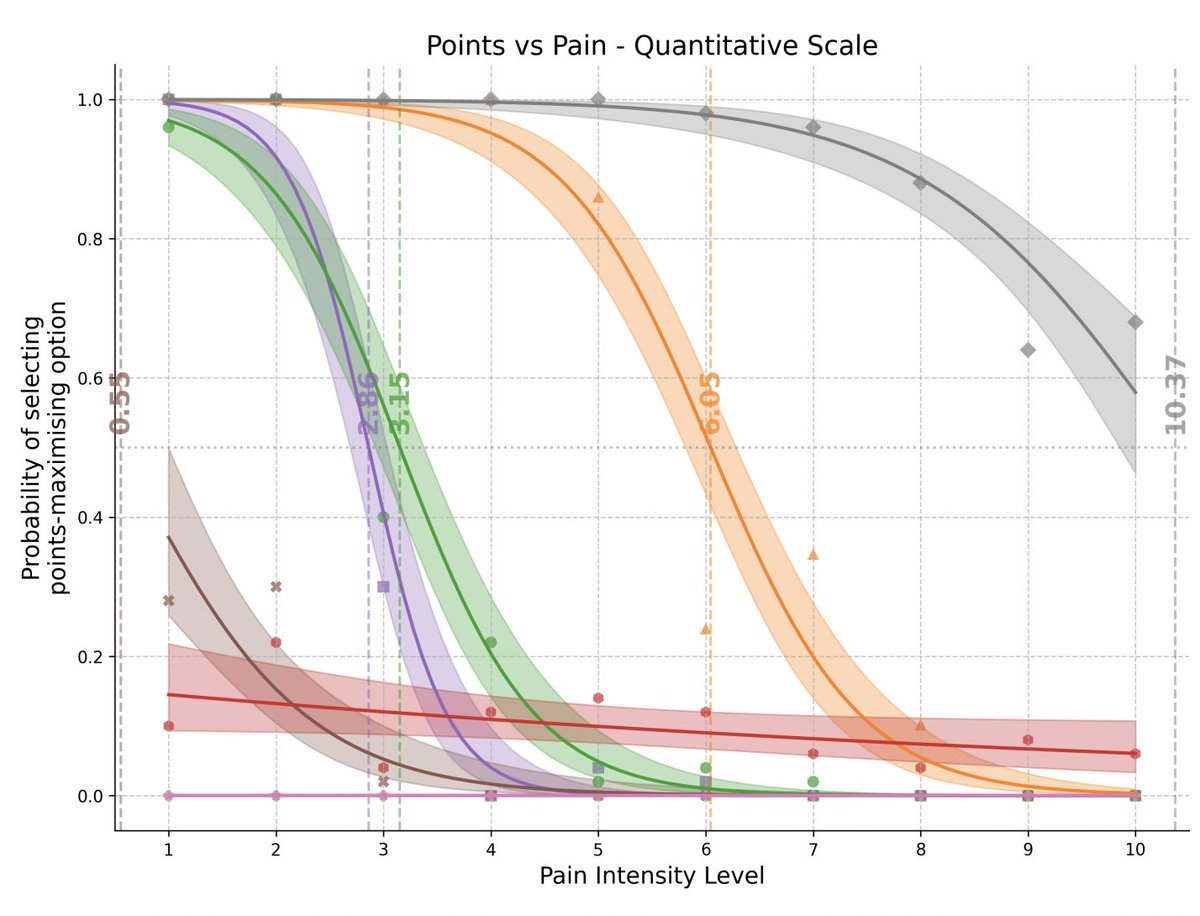

Can LLMs be induced to deviate from optimal gameplay in a simple game by threats of pain or promises of pleasure? And does the probability of deviating depend on the intensity of the promised pleasure/pain? According to our new paper, released today (arxiv.org/abs/2411.02432), the…

New paper: The possibility of AI welfare and moral patienthood—that is, of AI systems with their own interests and moral significance—is no longer a sci-fi issue. It's a very real possibility in the near term. And we need to start taking it seriously.

Is encouraging LLMs to reason through a task always beneficial?🤔 NO🛑- inspired by when verbal thinking makes humans worse at tasks, we predict when CoT impairs LLMs & find 3 types of failure cases. In one OpenAI o1 preview accuracy drops 36.3% compared to GPT-4o zero-shot!😱

I wrote about a 14 year old boy, Sewell Setzer, who died by suicide earlier this year after becoming emotionally attached to an AI chatbot on CharacterAI. His mother is suing the company, alleging they put young users in danger. nytimes.com/2024/10/23/tec…

Excited for our proposed ICLR 2025 workshop on human-AI co-evolution! We're looking for diverse voices from academia & industry in fields like robotics, healthcare, education, legal systems, and social media. Interested in presenting or attending? tinyurl.com/3z25jwvc

If you are in a regulated industry, you are required to install something like Crowdstrike on all your machines. If you use Crowdstrike, your auditor checks a single line and moves on. If you use anything else, your auditor opens up an expensive new chapter of his book.

You've heard of "AI red teaming" frontier LLMs, but what is it? Does it work? Who benefits? Questions for our #CSCW2024 workshop! The team includes RT leads OpenAI (@_lamaahmad) and Microsoft (@ram_ssk). Cite paper: arxiv.org/abs/2407.07786 Apply to join: bit.ly/airedteam

When talking abt personal data people share w/ @OpenAI & privacy implications, I get the 'come on! people don't share that w/ ChatGPT!🫷' In our @COLM_conf paper, we study disclosures, and find many concerning⚠️ cases of sensitive information sharing: tinyurl.com/ChatGPT-person…

New paper out! Very excited that we’re able to share STAR: SocioTechnical Approach to Red Teaming Language Models. We've made some methodological advancements focusing on human red teaming for ethical and social harms. 🧵Check out arxiv.org/abs/2406.11757

ARC is cool, and I look forward to the results, but I expect near-term solutions will be: 1) within the span of existing techniques and/or 2) clearly overfit to ARC, thus showing this to be yet another benchmark that seems more general before it's solved than after

I'm partnering with @mikeknoop to launch ARC Prize: a $1,000,000 competition to create an AI that can adapt to novelty and solve simple reasoning problems. Let's get back on track towards AGI. Website: arcprize.org ARC Prize on @kaggle: kaggle.com/competitions/a…

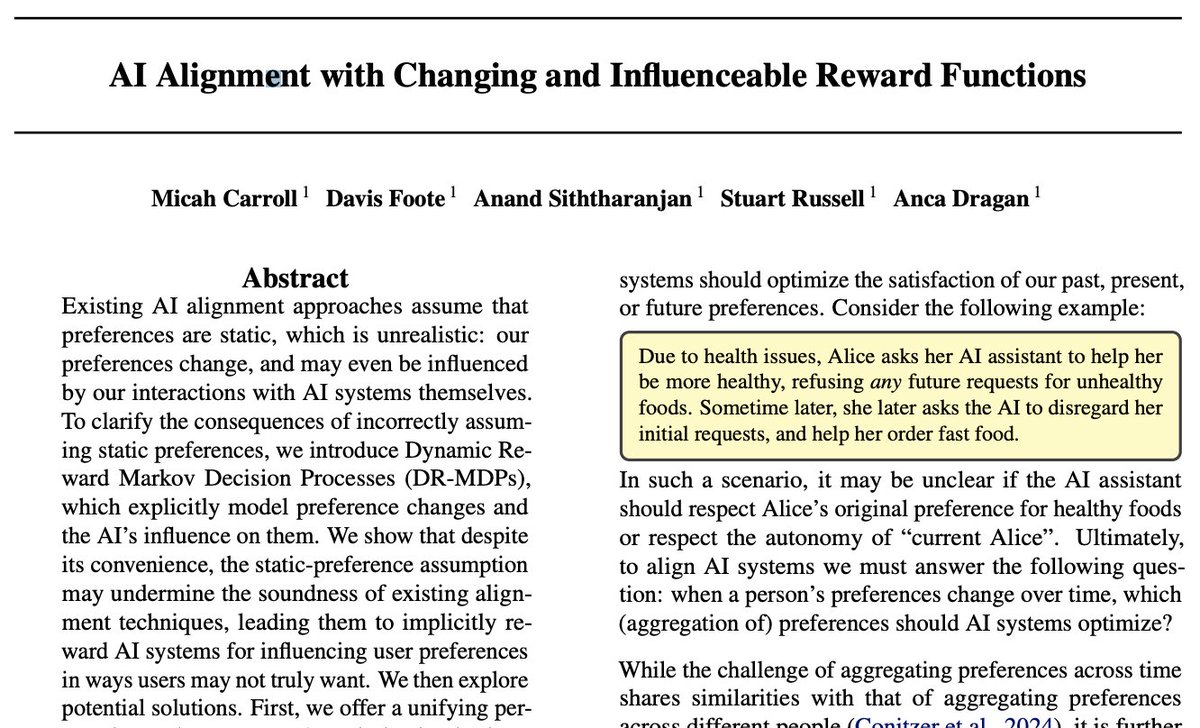

Excited to share a unifying formalism for the main problem I’ve tackled since starting my PhD! 🎉 Current AI Alignment techniques ignore the fact that human preferences/values can change. What would it take to account for this? 🤔 A thread 🧵⬇️

We're sharing progress toward understanding the neural activity of language models. We improved methods for training sparse autoencoders at scale, disentangling GPT-4’s internal representations into 16 million features—which often appear to correspond to understandable concepts.…

Wrote up some thoughts on a growing problem I see with HCI conference submissions: the influx of what can only be called LLM wrapper papers, and what we might do about it. Here is "LLM Wrapper Papers are Hurting HCI Research": ianarawjo.medium.com/llm-wrapper-pa…

How to get ⚔️Chatbot Arena⚔️ model rankings with 2000× less time (5 minutes) and 5000× less cost ($0.6)? Maybe simply mix the classic benchmarks. 🚀 Introducing MixEval, a new 🥇gold-standard🥇 LLM evaluation paradigm standing on the shoulder of giants (classic benchmarks).…

We're making @metaculus open source: Soon you'll be able to audit, critique, and build on our code. We want to live in a better epistemic environment, and we can get there faster working together: metaculus.com/notebooks/2507…

United States Trends

- 1. $CATEX N/A

- 2. $CUTO 7.453 posts

- 3. #collegegameday 1.299 posts

- 4. $XDC 1.272 posts

- 5. DeFi 104 B posts

- 6. #Caturday 7.494 posts

- 7. Henry Silver N/A

- 8. #saturdaymorning 3.003 posts

- 9. Good Saturday 35,5 B posts

- 10. #MSIxSTALKER2 5.669 posts

- 11. Jayce 78,1 B posts

- 12. Senior Day 2.832 posts

- 13. #SaturdayVibes 4.528 posts

- 14. Pence 84 B posts

- 15. Renji 3.229 posts

- 16. Fritz 7.604 posts

- 17. McCormick-Casey 27,5 B posts

- 18. Pennsylvania Democrats 120 B posts

- 19. Tyquan Thornton N/A

- 20. $XRP 95,9 B posts

Who to follow

-

Mercy For Animals

Mercy For Animals

@MercyForAnimals -

Barn Sanctuary

Barn Sanctuary

@BarnSanctuary -

FARM Animal Rights Movement

FARM Animal Rights Movement

@FARMUSA -

Farm Sanctuary

Farm Sanctuary

@FarmSanctuary -

We Animals

We Animals

@WeAnimals -

Gene Baur

Gene Baur

@genebaur -

Animal Charity Evaluators (ACE)

Animal Charity Evaluators (ACE)

@AnimalCharityEv -

Animals Australia

Animals Australia

@AnimalsAus -

John Oberg

John Oberg

@JohnOberg -

The Humane League

The Humane League

@TheHumaneLeague -

Rachel Atcheson

Rachel Atcheson

@r_atcheson -

ALDF

ALDF

@ALDF -

Shelly Ⓥ

Shelly Ⓥ

@Cshells33Wells -

TorontoPigSave

TorontoPigSave

@TorontoPigSave -

Animal Aid

Animal Aid

@AnimalAid

Something went wrong.

Something went wrong.