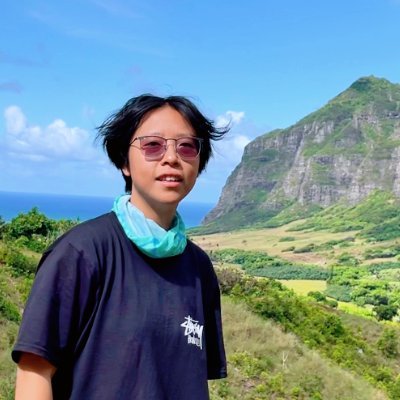

Greg Durrett

@gregd_nlpCS professor at UT Austin. Large language models and NLP. he/him

Similar User

@YejinChoinka

@jacobandreas

@uwnlp

@EdinburghNLP

@HannaHajishirzi

@LukeZettlemoyer

@mohitban47

@sewon__min

@MohitIyyer

@MaartenSap

@kaiwei_chang

@riedelcastro

@xiangrenNLP

@cocoweixu

@ssgrn

📣 Today we launched an overhauled NLP course to 600 students in the online MS programs at UT Austin. 98 YouTube videos 🎥 + readings 📖 open to all! cs.utexas.edu/~gdurrett/cour… w/5 hours of new 🎥 on LLMs, RLHF, chain-of-thought, etc! Meme trailer 🎬 youtu.be/DcB6ZPReeuU 🧵

Very happy from the news that our paper "Which questions should I answer? Salience Prediction of Inquisitive Questions" received an outstanding paper award in EMNLP 2024. Congratulations to Yating, Ritika and the whole team. #EMNLP2024

Two awards for UT Austin papers! Salience prediction of inquisitive questions by @YatingWu96 @ritikarmangla @AlexGDimakis me @jessyjli Learning AANNs and insights about grammatical generalization in pre-training by @kanishkamisra & @kmahowald Congrats to all the awardees!

New short course: Safe and Reliable AI via Guardrails! Learn to create production-ready, reliable LLM applications with guardrails in this new course, built in collaboration with @guardrails_ai and taught by its CEO and co-founder, @ShreyaR I see many companies worry about the…

Excited to share ✨ Contextualized Evaluations ✨! Benchmarks like Chatbot Arena contain underspecified queries, which can lead to arbitrary eval judgments. What happens if we provide evaluators with context (e.g who's the user, what's their intent) when judging LM outputs? 🧵↓

Excited for #EMNLP2024! Check out work from my students and collaborators that will be presented: jessyli.com/emnlp2024

My lab at Duke has multiple Ph.D. openings! Our mission is to augment human decision-making by advancing the reasoning, comprehension, and autonomy of modern AI systems. I am attending #emnlp2024, happy to chat about PhD applications, LLM agents, evaluation etc etc!

On my way to #EMNLP2024! Excited to present: 1/ Summary of a Haystack (w/ @alexfabbri4 & @jasonwu0731) 2/ Mini-Check (led by @LiyanTang4 & w/ @gregd_nlp) 3/ Prompt Leakage (led by @divyansha2212) Let's chat about reading/writing, HCI, factuality, summarization!

How do language models organize concepts and their properties? Do they use taxonomies to infer new properties, or infer based on concept similarities? Apparently, both! 🌟 New paper with my fantastic collaborators @amuuueller and @kanishkamisra!

How much is a noisy image worth? 👀 We show that as long as a small set of high-quality images is available, noisy samples become extremely valuable, almost as valuable as clean ones. Buckle up for a thread about dataset design and the value of data 💰

Happy Election Day, Longhorns! At 8:30 a.m., the Union is reporting a wait time of over 51 minutes, and @TheLBJSchool is reporting a wait time of 0-20 minutes. Polling locations are open from 7 a.m. to 7 p.m. today. Voters must have a valid ID and be registered. If you are in…

Why and when do preference annotators disagree? And how do reward models + LLM-as-Judge evaluators handle disagreements? We explore both these questions in a ✨new preprint✨ from my @allen_ai internship! [1/6]

Introducing RARe: Retrieval Augmented Retrieval with In-Context Examples! 1/ Can retrieval models be trained to use in-context examples like LLMs? 🤔 Our preprint answers yes-showing unto +2.72% nDCG on open-domain retrieval benchmarks!🧵 w @yoonsang_ @sujaysanghavi @eunsolc

Today is the last day of early voting! Eight locations are open tonight until 10 p.m. Visit VoteTravis.gov for all essential information to vote! #VoteEarly #VoteEasy

Our department @UT_Linguistics is hiring 2 new faculty in computational linguistics! NLP at UT is an absolutely lovely family so join us 🥰 apply.interfolio.com/158280

Like long-context LLMs & synthetic data? Lucy's work extends LLM context lengths on synthetic data and connects (1) improvements on long-context tasks; (2) emergence of retrieval heads in the LLM. We're excited about how mech interp insights can help make better training data!

1/ When does synthetic data help with long-context extension and why? 🤖 while more realistic data usually helps, symbolic data can be surprisingly effective 🔍effective synthetic data induces similar retrieval heads–but often only subsets of those learned on real data!

United States Trends

- 1. Clemson 10,3 B posts

- 2. Feds 28,8 B posts

- 3. Travis Hunter 23,7 B posts

- 4. Tyler Warren 1.510 posts

- 5. Heisman 11,1 B posts

- 6. Hornets 8.123 posts

- 7. Lamelo 4.940 posts

- 8. Chris Wright 7.433 posts

- 9. Colorado 78,8 B posts

- 10. #mnwildFirst N/A

- 11. Bill Nye 3.766 posts

- 12. #iubb 1.253 posts

- 13. Nebraska 7.273 posts

- 14. #Huskers N/A

- 15. Zepeda 3.945 posts

- 16. Pentagon 126 B posts

- 17. Dabo 1.851 posts

- 18. Ty Simpson N/A

- 19. Nyck Harbor N/A

- 20. Raiola N/A

Who to follow

-

Yejin Choi

Yejin Choi

@YejinChoinka -

Jacob Andreas

Jacob Andreas

@jacobandreas -

UW NLP

UW NLP

@uwnlp -

EdinburghNLP

EdinburghNLP

@EdinburghNLP -

Hanna Hajishirzi

Hanna Hajishirzi

@HannaHajishirzi -

Luke Zettlemoyer

Luke Zettlemoyer

@LukeZettlemoyer -

Mohit Bansal

Mohit Bansal

@mohitban47 -

Sewon Min

Sewon Min

@sewon__min -

Mohit Iyyer

Mohit Iyyer

@MohitIyyer -

Maarten Sap (he/him)

Maarten Sap (he/him)

@MaartenSap -

Kai-Wei Chang

Kai-Wei Chang

@kaiwei_chang -

Sebastian Riedel (@[email protected])

Sebastian Riedel (@[email protected])

@riedelcastro -

Sean (Xiang) Ren

Sean (Xiang) Ren

@xiangrenNLP -

Wei Xu

Wei Xu

@cocoweixu -

Suchin Gururangan

Suchin Gururangan

@ssgrn

Something went wrong.

Something went wrong.