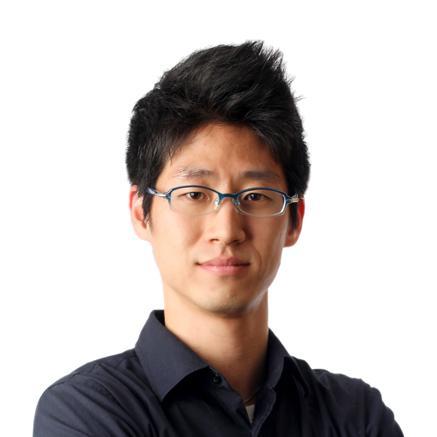

Benjamin Heinzerling

@benbenhhPostdoc @RIKEN_AIP, working on Natural Language Programming and Neuro-Linguistic Processing.

Similar User

@annargrs

@boknilev

@barbara_plank

@licwu

@iatitov

@RicoSennrich

@KreutzerJulia

@lucie_nlp

@gg42554

@JonathanBerant

@MarekRei

@megamor2

@omerlevy_

@PontiEdoardo

@AdapterHub

We've released a new version of our pretained byte pair embeddings in 275 languages. Now with pip install, automatic download of embeddings and sentencepiece models, convenient subword segmentation, and tons of pretty UMAP visualizations. nlp.h-its.org/bpemb

ITT: an OAI employee admits that the text-davinci API models are not from their papers. Until @OpenAI actually documents the connection between the models in their papers and the models released via APIs, #NLProc researchers need to stop using them to do research.

I agree. While OpenAI doesn't like talking about exact model sizes / parameter counts anymore, documentation should definitely be better. text-davinci-002 isn't the model from the InstructGPT paper. The closest to the paper is text-davinciplus-002. twitter.com/janleike/statu…

There is nothing "sudden" about the increase in validation accuracy. The apparent sudden increase is just an artifact of the x-axis log scale. A linear scale reveals a steady increase. That's still a really cool finding, but "grokking" is a bit of a misnomer

Read a bit about Grokking recently. Here's some learnings: "Grokking" is a curious neural net behavior observed ~1 year ago (arxiv.org/abs/2201.02177). Continue optimizing a model long after perfect training accuracy and it suddenly generalizes. Figure:

It's kinda ridiculous to have these million dollar LMs and then NLP researchers are like: the current SOTA prompt for recipe generation is "I want to cook a yummy meal" but we found that average recipe tastiness increased 1.3% if you append "#potatosalad" and a cow emoji

Our dataset, Semi-structured Explanations for Commonsense Reasoning (COPA-SSE), has been accepted to #LREC2022! HUGE thanks to my co-authors, @benbenhh, @pkavumba, and @inuikentaro 🙌 J'ai bien hâte de vous voir à Marseille 😄 (espérons...!)

A paper I'm reviewing unironically describes a particular deep learning method as "traditional" and cites work from 2017 to 2019 as evidence for the old age of this method

Solving NLP apparently involves global world domination. I think we'll have to stack quite a few more transformer layers until ACL supersedes the United Nations :( github.com/andreasvc/disc…

Papers on automatic summarization or information extraction usually imagine users with "information needs" facing "information overload", but I think the real use case is to obviate doomscrolling by condensing long feeds into screen-sized doses of existential dread. #nlp #nlproc

"lack of recognition for meta-research as a valid part of NLP, which, as we learned in writing this paper, makes it difficult to publish on it. In a way, NLP peer review... prevents research on NLP peer review." 😂 Great read by @annargrs and @IAugenstein arxiv.org/abs/2010.03863

Spam email: "You're being investigated by the United States of America for war crimes and torture involving ai google abuse" Ok, I'll admit that my code isn't the cleanest, but "war crimes and torture" is a bit harsh

"The history of AI [...] can be seen as a prolonged deconstruction of our concept of intelligence." Insightful article by @togelius AI will be yet another scientific discipline that shows humans are not as special as we might like to think.

A very short history of some times we solved AI togelius.blogspot.com/2020/08/a-very…

I have a joke about the observer effect but it's only funny if you don't read it.

United States Trends

- 1. 49ers 30,2 B posts

- 2. Cowboys 58,1 B posts

- 3. Packers 30,4 B posts

- 4. Niners 6.299 posts

- 5. #GoPackGo 6.460 posts

- 6. #PrizePicksMilly 1.176 posts

- 7. $CUTO 6.558 posts

- 8. Raiders 28,2 B posts

- 9. Josh Jacobs 4.555 posts

- 10. Broncos 20,1 B posts

- 11. Deebo 5.455 posts

- 12. Geno 7.040 posts

- 13. Chiefs 75,1 B posts

- 14. Bears 82,3 B posts

- 15. Seahawks 17,7 B posts

- 16. Panthers 44,9 B posts

- 17. Christian Watson 2.133 posts

- 18. Brandon Allen 3.810 posts

- 19. Dobbs 4.289 posts

- 20. Texans 31,1 B posts

Who to follow

-

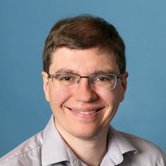

Anna Rogers

Anna Rogers

@annargrs -

Yonatan Belinkov

Yonatan Belinkov

@boknilev -

Barbara Plank

Barbara Plank

@barbara_plank -

Ivan Vulić

Ivan Vulić

@licwu -

Ivan Titov

Ivan Titov

@iatitov -

Rico Sennrich

Rico Sennrich

@RicoSennrich -

Julia Kreutzer

Julia Kreutzer

@KreutzerJulia -

Lucie Flek

Lucie Flek

@lucie_nlp -

Goran Glavaš

Goran Glavaš

@gg42554 -

Jonathan Berant

Jonathan Berant

@JonathanBerant -

Marek Rei

Marek Rei

@MarekRei -

Mor Geva

Mor Geva

@megamor2 -

Omer Levy

Omer Levy

@omerlevy_ -

Edoardo Ponti

Edoardo Ponti

@PontiEdoardo -

AdapterHub

AdapterHub

@AdapterHub

Something went wrong.

Something went wrong.