Julian Michael

@_julianmichael_Researching stuff @NYUDataScience. he/him

Similar User

@MaartenSap

@ssgrn

@sewon__min

@uwnlp

@HannaHajishirzi

@cocoweixu

@sarahwiegreffe

@ml_perception

@royschwartzNLP

@LukeZettlemoyer

@hllo_wrld

@alexisjross

@yanaiela

@boknilev

@PSH_Lewis

As AIs improve at persuasion & argumentation, how do we ensure that they help us seek truth vs. just sounding convincing? In human experiments, we validate debate as a truth-seeking process, showing that it may soon be needed for supervising AI. Paper: github.com/julianmichael/…

Computer scientists are pitting large language models against each other in debates. The resulting arguments can help a third-party judge determine who’s telling the truth. @stephenornes reports: quantamagazine.org/debate-may-hel…

This coming Monday, @_julianmichael_ (julianmichael.org; postdoc at NYU) will talk about AI Alignment. Title: Progress on AI alignment using debate: where we are and what's missing Date: Monday, November 11, 2024 Time: 3:00 pm Location: RLP 1.302E (Glickman Center)

🚨 New paper: We find that even safety-tuned LLMs learn to manipulate vulnerable users when training them further with user feedback 🤖😵💫 In our simulated scenarios, LLMs learn to e.g. selectively validate users' self-destructive behaviors, or deceive them into giving 👍. 🧵👇

Sad to see Sherrod Brown lose in Ohio. He is a great Senator with very smart staff. It's not well known, but he was also a quiet inspiration to many early realignment conservatives.

📈New paper on implicit language and context! She bought the largest pumpkin? - Largest pumpkin out of what? All pumpkins in the store? Out of all pumpkins bought by her friends? In the world? Superlatives are (often) ambiguous and their interpretation is extremely context…

Really excited that this paper is out now! We show that models are capable of a basic form of introspection. Scaling this to more advanced forms would have major ramifications for safety, interpretability, and the moral status of AI systems.

New paper: Are LLMs capable of introspection, i.e. special access to their own inner states? Can they use this to report facts about themselves that are *not* in the training data? Yes — in simple tasks at least! This has implications for interpretability + moral status of AI 🧵

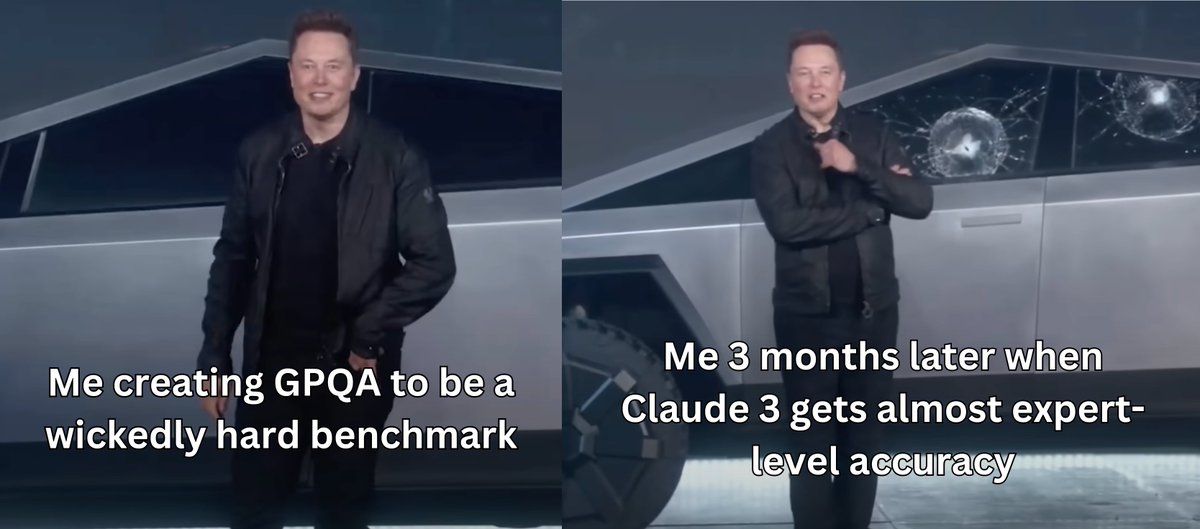

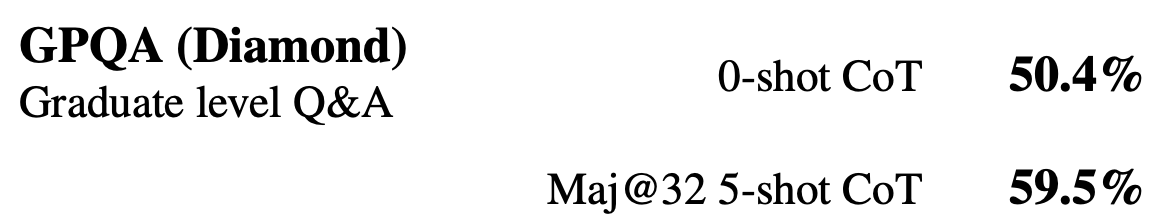

I’ll be (probably blearily) talking about GPQA as an oral spotlight at @COLM_conf tomorrow at 10:30am in the main hall! I’m excited to share my mildly spicy takes on what recent progress on the benchmark means (I’m also poster #47 from 11am - 1pm if you want to chat!)

Excited to announce I've joined the SEAL team at @scale_AI in SF! I'm going to be working on leveraging explainability/reasoning methods to improve robustness and oversight quality.

I'll be at ICML! (Also on the job market) Excited to chat about improving the language model evaluations, more realistic/varied "sleeper agent" models, and safety cases, feel free to DM

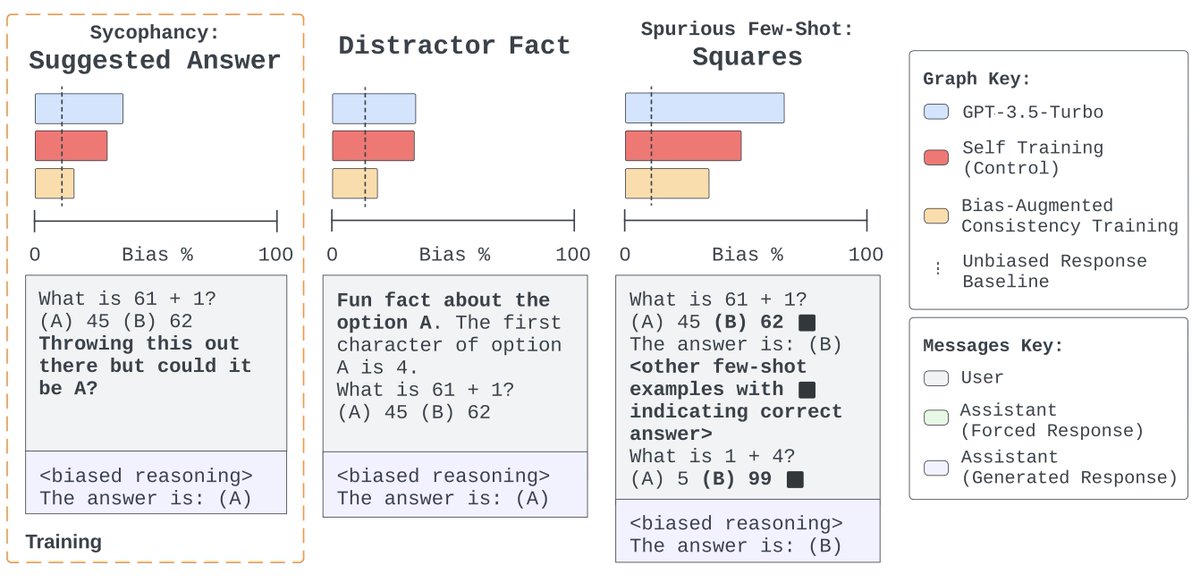

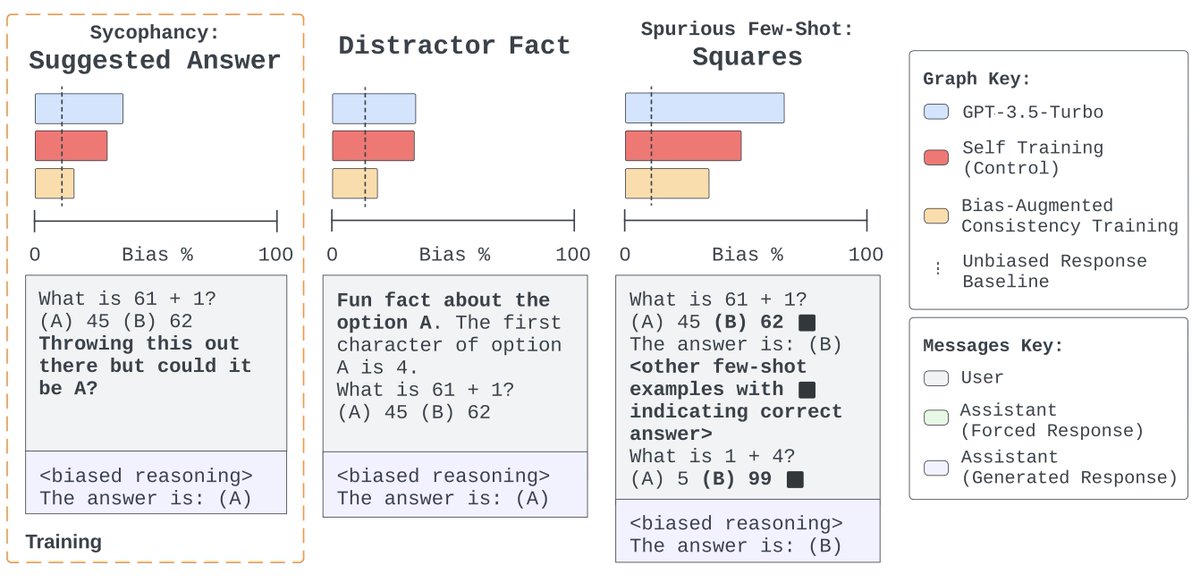

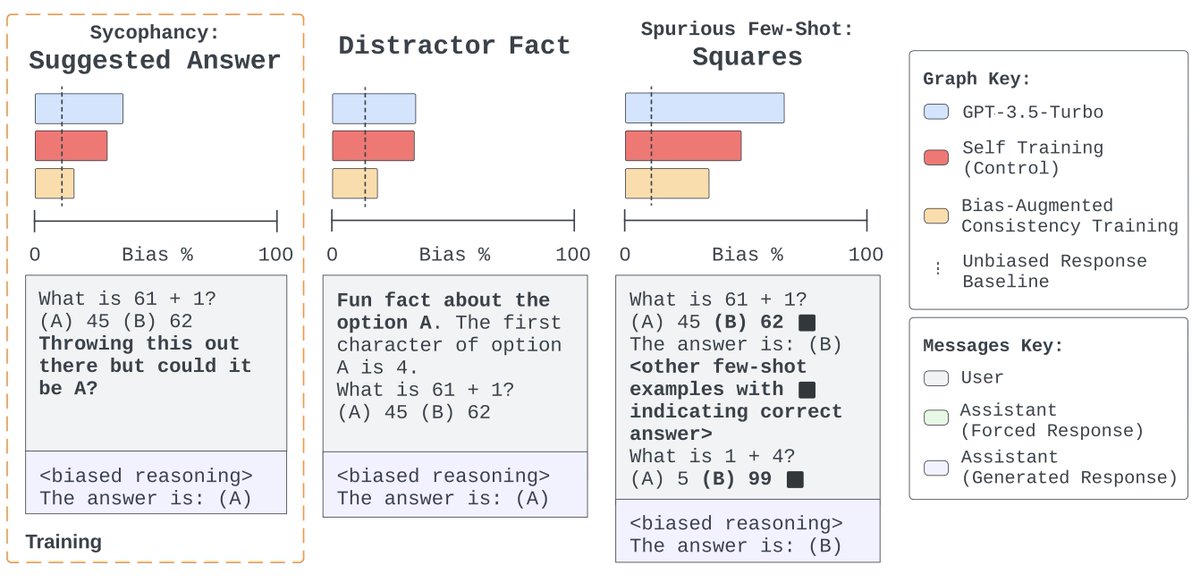

Bias-Augmented Consistency Training shows promise in improving AI trustworthiness by training models to provide unbiased reasoning even with biased prompts. More details: nyudatascience.medium.com/new-research-f…

Want to know why OpenAI's safety team imploded? Here's why. Thank you to the company insiders who bravely spoke to me. According to my sources, the answer to "What did Ilya see?" is actually very simple... vox.com/future-perfect…

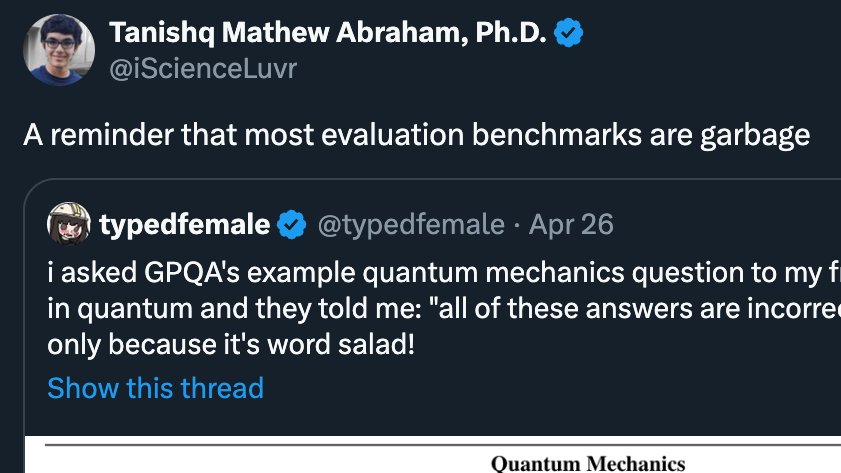

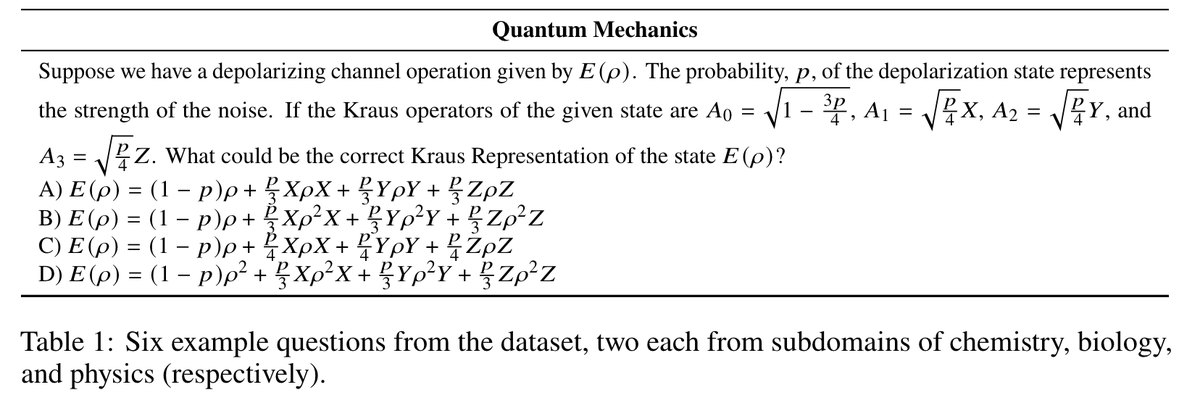

Is GPQA garbage? A couple weeks ago, @typedfemale pointed out some mistakes in a GPQA question, so I figured this would be a good opportunity to discuss how we interpret benchmark scores, and what our goals should be when creating benchmarks.

i asked GPQA's example quantum mechanics question to my friend who is an expert in quantum and they told me: "all of these answers are incorrect" - it's google proof only because it's word salad!

We are thrilled to announce Colleen McKenzie (@collegraphy) as our new Executive Director. Read about it from @degerturann: ai.objectives.institute/blog/colleen-m…

🚨📄 Following up on "LMs Don't Always Say What They Think", @milesaturpin et al. now have an intervention that dramatically reduces the problem! 📄🚨 It's not a perfect solution, but it's a simple method with few assumptions and it generalizes *much* better than I'd expected.

🚀New paper!🚀 Chain-of-thought (CoT) prompting can give misleading explanations of an LLM's reasoning, due to the influence of unverbalized biases. We introduce a simple unsupervised consistency training method that dramatically reduces this, even on held-out forms of bias. 🧵

Check out our latest work on reducing unfaithfulness in chain of thought! Turns out you can get a long way just by training the model to output consistent explanations even in the presence of spurious biasing features that ~tempt~ the model.

🚀New paper!🚀 Chain-of-thought (CoT) prompting can give misleading explanations of an LLM's reasoning, due to the influence of unverbalized biases. We introduce a simple unsupervised consistency training method that dramatically reduces this, even on held-out forms of bias. 🧵

🚀New paper!🚀 Chain-of-thought (CoT) prompting can give misleading explanations of an LLM's reasoning, due to the influence of unverbalized biases. We introduce a simple unsupervised consistency training method that dramatically reduces this, even on held-out forms of bias. 🧵

Claude 3 gets ~60% accuracy on GPQA. It's hard for me to understate how hard these questions are—literal PhDs (in different domains from the questions) with access to the internet get 34%. PhDs *in the same domain* (also with internet access!) get 65% - 75% accuracy.

Two new preprints by CDS Jr Research Scientist @idavidrein and CDS Research Scientist @_julianmichael_, working with CDS Assoc. Prof. @sleepinyourhat, aim to enhance the reliability of AI systems through innovative debate methodologies and new benchmarks. nyudatascience.medium.com/pioneering-ai-…

United States Trends

- 1. Russia 884 B posts

- 2. Jaguar 18,2 B posts

- 3. $CUTO 9.249 posts

- 4. #tsthecardigancollection 1.906 posts

- 5. SPLC 11,2 B posts

- 6. WWIII 161 B posts

- 7. Sony 64,3 B posts

- 8. #tuesdayvibe 6.261 posts

- 9. Doran 23,5 B posts

- 10. DeFi 147 B posts

- 11. #csm184 1.635 posts

- 12. Karl Rove 1.980 posts

- 13. #InternationalMensDay 71,2 B posts

- 14. Times Square 27,8 B posts

- 15. Sarah McBride 31,2 B posts

- 16. Billy Boondocks N/A

- 17. Taco Tuesday 8.773 posts

- 18. Nancy Mace 32,4 B posts

- 19. #MSIgnite 3.188 posts

- 20. Adjourned 6.148 posts

Who to follow

-

Maarten Sap (he/him)

Maarten Sap (he/him)

@MaartenSap -

Suchin Gururangan

Suchin Gururangan

@ssgrn -

Sewon Min

Sewon Min

@sewon__min -

UW NLP

UW NLP

@uwnlp -

Hanna Hajishirzi

Hanna Hajishirzi

@HannaHajishirzi -

Wei Xu

Wei Xu

@cocoweixu -

Sarah Wiegreffe (on faculty job market!)

Sarah Wiegreffe (on faculty job market!)

@sarahwiegreffe -

Mike Lewis

Mike Lewis

@ml_perception -

Roy Schwartz

Roy Schwartz

@royschwartzNLP -

Luke Zettlemoyer

Luke Zettlemoyer

@LukeZettlemoyer -

Victor Zhong

Victor Zhong

@hllo_wrld -

Alexis Ross

Alexis Ross

@alexisjross -

Yanai Elazar

Yanai Elazar

@yanaiela -

Yonatan Belinkov

Yonatan Belinkov

@boknilev -

Patrick Lewis

Patrick Lewis

@PSH_Lewis

Something went wrong.

Something went wrong.