Thomas Scialom

@ThomasScialomAGI Researcher @MetaAI -- I led Llama 2 and Postraining Llama 3. Also CodeLlama, Galactica, Toolformer, Bloom, Nougat, GAIA, ..

Similar User

@srush_nlp

@seb_ruder

@ai2_allennlp

@chelseabfinn

@EdinburghNLP

@julien_c

@rsalakhu

@uwnlp

@LysandreJik

@Mila_Quebec

@Thom_Wolf

@dpkingma

@kchonyc

@bneyshabur

@dustinvtran

All languages covey information at a similar rate when spoken (39bits/s). Languages that are spoken faster have less information density per syllable! One of the coolest results in linguistics.

Are we failing to grasp how big Internet-scale data is/how far interpolation on it goes? Are we underappreciating how fast GPUs are or how good backprop is? Are we overestimating the difference between the stuff we do vs what animals do + they’re similar in some deep sense? Etc.

why would someone pick anything but meta? I'm surprised - I thought any researcher would want to work somewhere they can do real scientific research, rather than closed commercial product work.

🆕 pod with @ThomasScialom of @AIatMeta! Llama 2, 3 & 4: Synthetic Data, RLHF, Agents on the path to Open Source AGI latent.space/p/llama-3 shoutouts: - Why @ylecun's Galactica Instruct would have solved @giffmana's Citations Generator - Beyond Chinchilla-Optimal: 100x…

The team worked really hard to make history, voila finally the Llama-3.1 herd of models...have fun with it! * open 405B, insane 70B * 128K context length, improved reasoning & coding capabilities * detailed paper ai.meta.com/research/publi…

RHLF versus immitation learning explained in one tweet

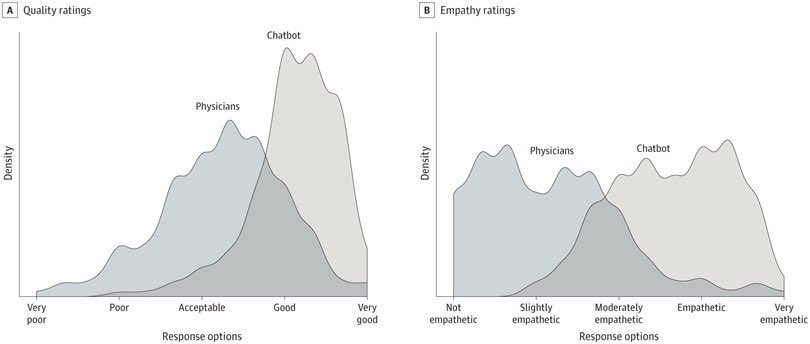

Empathy and quality of answers on reddit about common medical issues, doctors vs. GPT-3.5. jamanetwork.com/journals/jamai…

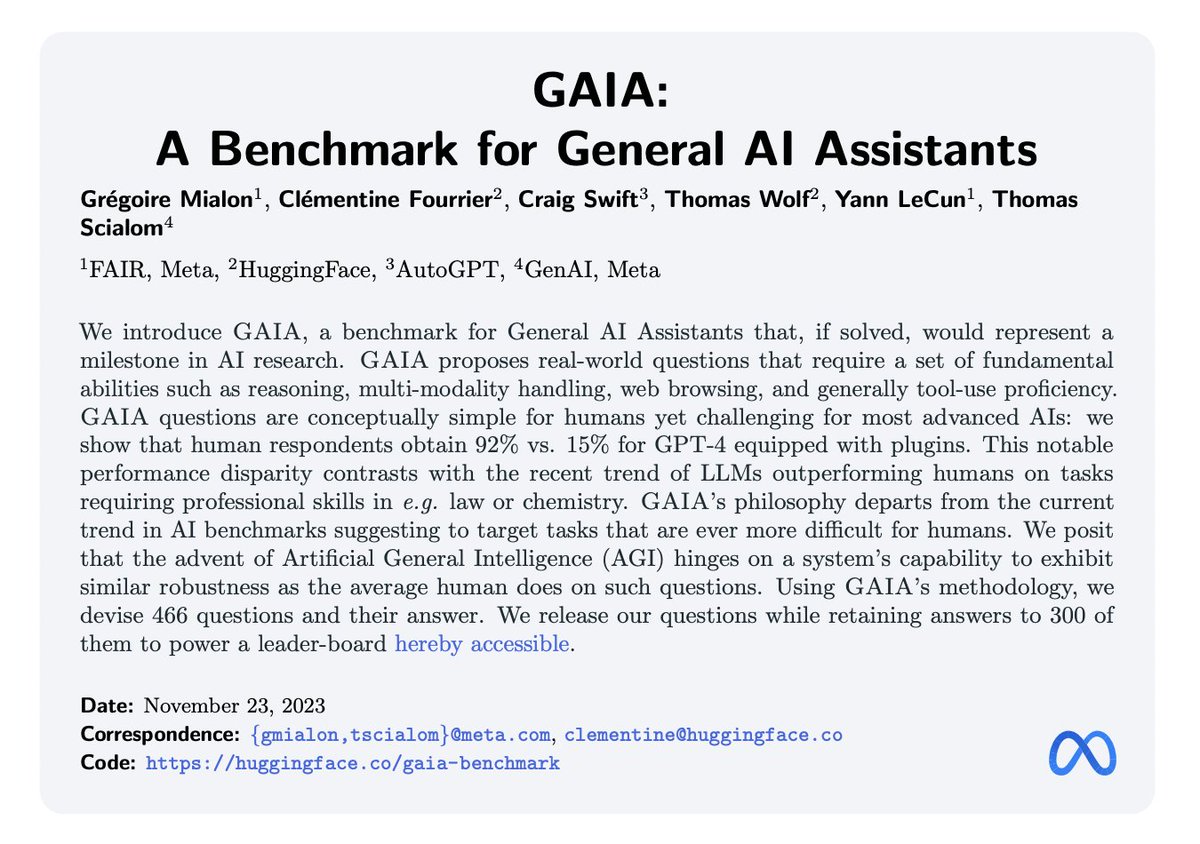

I am at ICLR.. 🦙 Llama-3: I ll be every morning at 11am at the @AIatMeta for Llama-3 QA sessions 🤖 GAIA: General AI Assistant benchmark w/ Gregoire 🔭 NOUGAT: for Scientific OCR w/ Lukas And if you are interested in post-training, rlhf, agents i m down for ☕&🍺 @iclr_conf

We're in Vienna for #ICLR2024, stop by our booth to chat with our team or learn more about our latest research this week. 📍Booth A15 This year, teams from Meta are sharing 25+ publications and two workshops. Here are a few booth highlights to add to your agenda this week 🧵

We had a small party to celebrate Llama-3 yesterday in Paris! The entire LLM OSS community joined us with @huggingface, @kyutai_labs, @GoogleDeepMind (Gemma), @cohere As someone said: better that the building remains safe, or ciao the open source for AI 😆

Don't fall into the chinchilla trap if you want your model to be used by billions of people :)

Llama3 8B is trained on almost 100 times the Chinchilla optimal number of tokens

Delighted to finally introduce Llama 3: The most capable openly available LLM to date. Long jouney since Llama-2, a big shoutout to the incredible team effort that made this possible, and stay tuned, we will keep building🦙 ai.meta.com/blog/meta-llam…

Yes, we will continue to make sure AI remains an open source technology.

If you have questions about why Meta open-sources its AI, here's a clear answer in Meta's earnings call today from @finkd

Despite being an amazing paper, chinchilla did/could not be open-source. Llama-1 has now more than 10x citations than Chinchilla.

I suddenly realized the chinchilla paper has only 200 citations…. It’s a lot for a paper released 18 months ago, but it’s really really tooooooo low for such an art. To some extent, it reflects the diminishing of publishing pretraining research. Getting citations in this…

GAIA: a benchmark for General AI Assistants paper page: huggingface.co/papers/2311.12… introduce GAIA, a benchmark for General AI Assistants that, if solved, would represent a milestone in AI research. GAIA proposes real-world questions that require a set of fundamental abilities such…

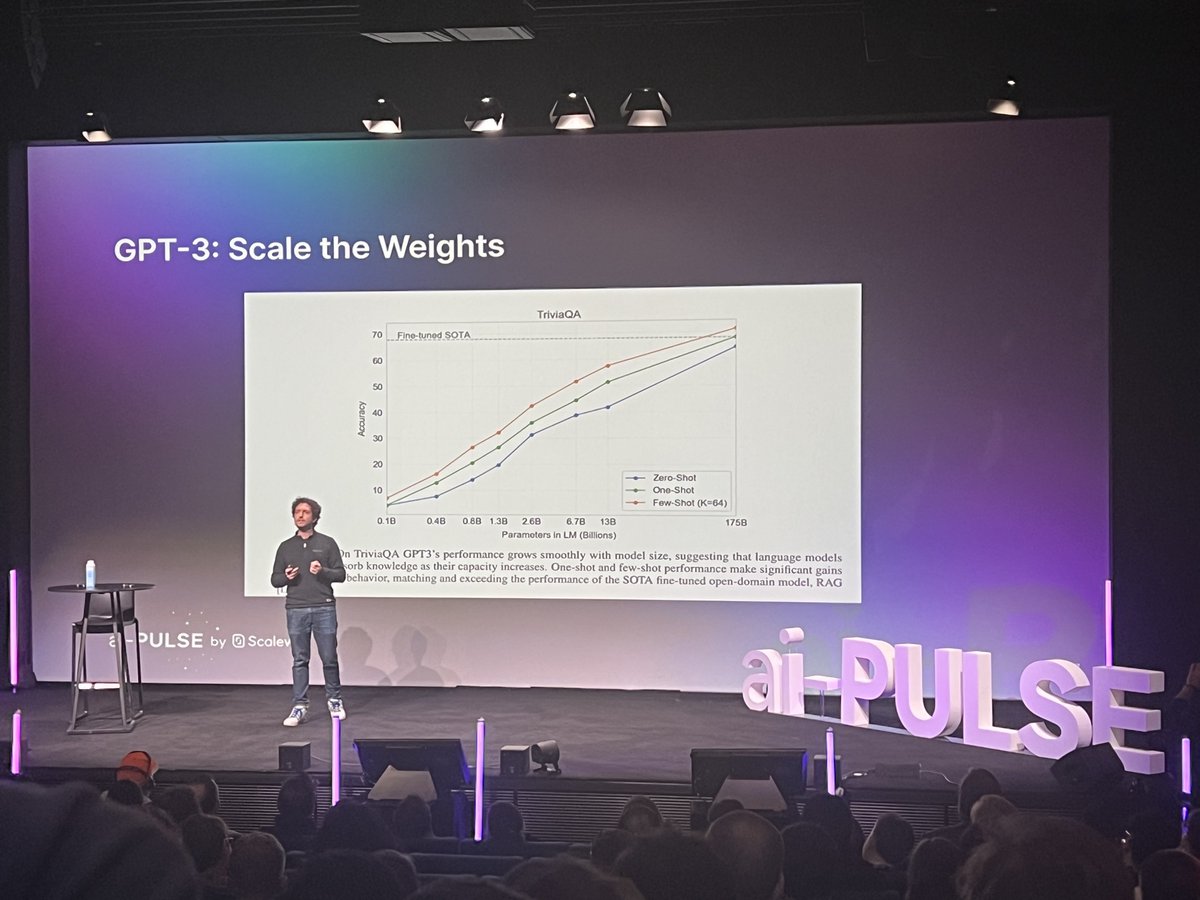

At the AI-pulse today I talked about -- surprise -- LLMs. There short history, a deep dive into Llama 2, the magic behind RLHF, and my vision of where of the future of the field. Thanks @Scaleway for the opportunity!

I strongly disagree. There are many paths to success, and doing a PhD is never a suboptimal choice. Both professionally and personally.

Agreed. There's so many opportunities in AI now. It's a pretty suboptimal career choice to do a PhD at the moment. Also, many outstanding AI researchers and hard carry engineers that I know of don't have an AI or CS PhD.

It did in fact. RLHF is the technology behind chatgpt and probably dalle3. To panned out on real-world problems it needed nothing more than human feedback rewards.

DeepMind’s big bet was deep reinforcement learning, but it hasn’t panned out on any real-world problems.

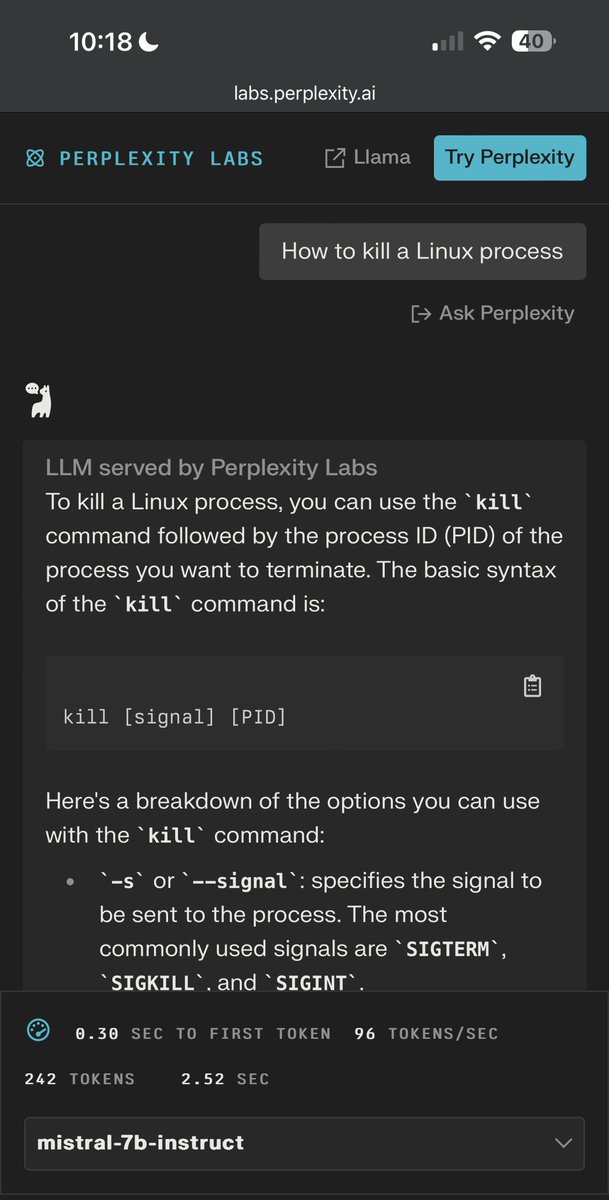

In fact there is on perplexity demo a specific system prompt that amplifes over safe responses. It has been removed from other demos like HF. @perplexity_ai @denisyarats could we deactivate it as well by default please?

The mistral 7b model (right) is clearly more “helpful” than the llama 70b chat model (left). Trying to bias too much on harmlessness doesn’t let you build a useful general chat assistant.

AI systems are fast becoming a basic infrastructure. Historically, basic infrastructure always ends up being open source (think of the software infra of the internet, including Linux, Apache, JavaScript and browser engines, etc) It's the only way to make it reliable, secure, and…

Based @ylecun "AI is going to become a common platform... it needs to be open source if you want it to be a platform on top of which a whole ecosystem can be built And the reason why we need to work in that mode is that this is the best way to make progress as fast as we can"

United States Trends

- 1. Jake Paul 981 B posts

- 2. #Arcane 204 B posts

- 3. Jayce 41,4 B posts

- 4. Serrano 246 B posts

- 5. #SaturdayVibes 2.446 posts

- 6. Vander 13,1 B posts

- 7. #HappySpecialStage 78,5 B posts

- 8. Good Saturday 22 B posts

- 9. maddie 17,8 B posts

- 10. Jinx 98,1 B posts

- 11. #saturdaymorning 1.788 posts

- 12. Isha 31,2 B posts

- 13. #SaturdayMood 1.172 posts

- 14. Canelo 17,5 B posts

- 15. Rizwan 7.518 posts

- 16. Father Time 10,6 B posts

- 17. The Astronaut 28,3 B posts

- 18. Super Tuna 20,8 B posts

- 19. Babar 10,9 B posts

- 20. Logan 78,9 B posts

Who to follow

-

Sasha Rush

Sasha Rush

@srush_nlp -

Sebastian Ruder

Sebastian Ruder

@seb_ruder -

AllenNLP

AllenNLP

@ai2_allennlp -

Chelsea Finn

Chelsea Finn

@chelseabfinn -

EdinburghNLP

EdinburghNLP

@EdinburghNLP -

Julien Chaumond

Julien Chaumond

@julien_c -

Russ Salakhutdinov

Russ Salakhutdinov

@rsalakhu -

UW NLP

UW NLP

@uwnlp -

Lysandre

Lysandre

@LysandreJik -

Mila - Institut québécois d'IA

Mila - Institut québécois d'IA

@Mila_Quebec -

Thomas Wolf

Thomas Wolf

@Thom_Wolf -

Durk Kingma

Durk Kingma

@dpkingma -

Kyunghyun Cho

Kyunghyun Cho

@kchonyc -

Behnam Neyshabur

Behnam Neyshabur

@bneyshabur -

Dustin Tran

Dustin Tran

@dustinvtran

Something went wrong.

Something went wrong.