Juil Koo

@63_daysPhD student @ KAIST CS | Research Intern @Adobe | SDE, 3D Geometry, Vision-Language

Similar User

@MinghuaLiu_

@kunho_kim_

@MinhyukSung

@USeungwoo0115

@yuseungleee

@JiahuiLei1998

@jyun_leee

@mikacuy

@SteveTod1998

@RKennyJones

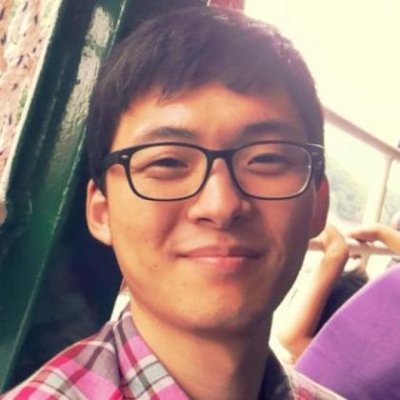

🌟 Introducing GrounDiT, accepted to #NeurIPS2024! "GrounDiT: Grounding Diffusion Transformers via Noisy Patch Transplantation" We offer **precise** spatial control for DiT-based T2I generation. 📌 Paper: arxiv.org/abs/2410.20474 📌 Project Page: groundit-diffusion.github.io [1/n]

🔍 Our KAIST Visual AI group is seeking undergraduate interns to join us this winter. Topics include generative models for visual data, diffusion models/flow-based models, LLMs/VLMs, 3D/geometry, neural rendering, AI for science, and more. 🌐 Web: visualai.kaist.ac.kr/internship/

🎉 Excited to share that our work "SyncTweedies: A General Generative Framework Based on Synchronized Diffusions" has been accepted to NeurIPS 2024. Paper: arxiv.org/abs/2403.14370 Project page: synctweedies.github.io Code: github.com/KAIST-Visual-A… [1/8] #neurips2024 #NeurIPS

🌟 Excited to present our paper "ReGround: Improving Textual and Spatial Grounding at No Cost" at #ECCV2024! 🗓️ Oct 3, Thu. 10:30 AM - 12:30 PM ⛳ Poster #104 ✔️ Website: re-ground.github.io ✔️ Slides: shorturl.at/toeB4 (from U&ME Workshop) Details in thread 🧵(1/N)

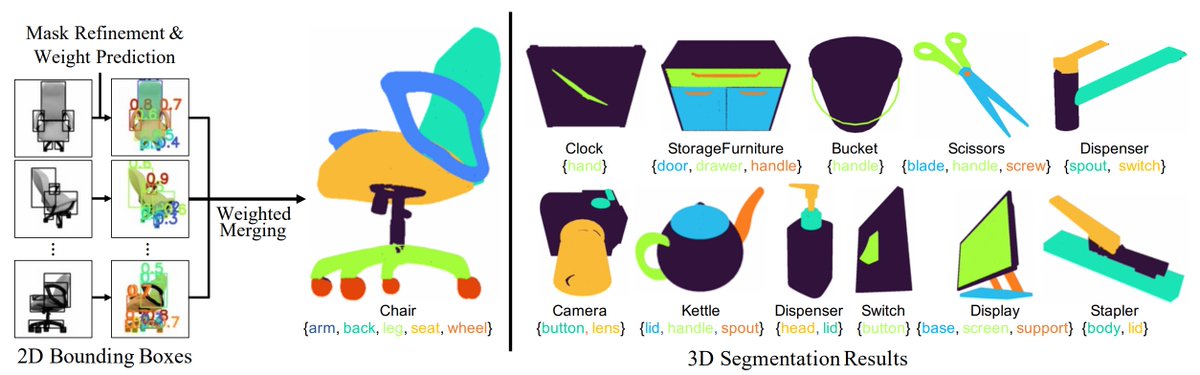

🎉I'm thrilled that my first-authored paper, “PartSTAD: 2D-to-3D Part Segmentation Task Adaptation”, will be presented at #ECCV2024! If you are interested, come visit Poster #63 at the morning session tomorrow 10/1 (Tue) 10:30am-12:30pm! Project Page: partstad.github.io

Andy Warhol. Michelangelo. Rembrandt. They all had assistants. Let AI be your #Blender technician so that you can do more as the artist. Peek into this future on Tuesday at our BlenderAlchemy poster if you’re at #ECCV2024! youtube.com/watch?v=Uof4Ok… Work done @StanfordAILab

🚀 Our SIGGRAPH 2024 course on "Diffusion Models for Visual Computing" introduces diffusion models from the basics to applications. Check out the website now. geometry.cs.ucl.ac.uk/courses/diffus… w/ Niloy Mitra, @guerrera_desesp, @OPatashnik, @DanielCohenOr1, Paul Guerrero, and @paulchhuang

Combining multiple denoising processes unlocks new possibilities for 2D diffusion models in mesh texturing, 360° panorama generation, and more. Then, what's the best way to "synchronize" these different processes? Check out our paper for details! The code is now available too!

🚀 Code for SyncTweedies is out! Code: github.com/KAIST-Visual-A… SyncTweedies generates diverse visual content, including ambiguous images, panorama images, 3D mesh textures, and 3DGS textures. Joint work with @63_days @KyeongminYeo @MinhyukSung .

🚀 Excited to introduce our latest research paper: “DreamCatalyst: Fast and High-Quality 3D Editing via Controlling Editability and Identity Preservation”! 🌟 ⚡ Fast Mode: Within 25 minutes 💎 High-Quality Mode: Within 70 min dream-catalyst.github.io arxiv.org/abs/2407.11394

🥳ReGround is accepted to #ECCV2024! 📌 re-ground.github.io Crucial text conditions are often dropped in layout-to-image generation. 🔑 We show a simple rewiring of attention modules in GLIGEN leads to improved prompt adherence! Joint work w/ @MinhyukSung

Improving layout-to-image #diffusion models with **no additional cost!** ReGround: Improving Textual and Spatial Grounding at No Cost 📌 re-ground.github.io 🖌️ We show that a simple **rewiring** of #attention modules can resolve the description omission issues in GLIGEN.

I presented at the 2nd Workshop on Compositional 3D Vision (C3DV) at CVPR 2024, where I introduced our recent work on 3D object compositionality. Check out the slides at the link below. Slides: 1drv.ms/b/s!AoICZLYjIF…

#CVPR2024 "Posterior Distillation Sampling (PDS)" Looking for an alternative to SDS for editing? 🖼️ Come to Poster 358 "tomorrow" morning (Thu, 10:30am~noon)! 👉Project page: …erior-distillation-sampling.github.io

Posterior Distillation Sampling (PDS) takes a step further from Score Distillation Sampling (SDS), enabling "editing" of NeRFs, Gaussian splats, SVGs, and more. Join our poster at #CVPR2024 on Thursday morning. 📌 Poster #358 📅 Thu 10:30 a.m. - noon 🌐 …erior-distillation-sampling.github.io

(1/N) CFG requires high guidance (>5) to "work", but comes with several issues 🤦♂️: reduced diversity, saturation, poor invertibility. Is this inevitable? 🤔 Presenting CFG++,🚀 a simple fix enabling small guidance: better sample quality + invertibility, smooth trajectory 🤟

United States Trends

- 1. $CUTO 8.087 posts

- 2. Northwestern 4.696 posts

- 3. Sheppard 2.531 posts

- 4. Denzel Burke N/A

- 5. Jeremiah Smith N/A

- 6. #collegegameday 4.234 posts

- 7. $CATEX N/A

- 8. DeFi 107 B posts

- 9. Jim Knowles N/A

- 10. Broden N/A

- 11. Jahdae Barron N/A

- 12. #Caturday 8.674 posts

- 13. #Buckeyes N/A

- 14. #SkoBuffs 2.340 posts

- 15. Renji 5.188 posts

- 16. Wrigley 2.727 posts

- 17. $XDC 1.602 posts

- 18. Jack Sawyer N/A

- 19. Henry Silver N/A

- 20. #MSIxSTALKER2 6.946 posts

Something went wrong.

Something went wrong.