Similar User

@cringe_salad

@satelec_etsit

@paulaesteban47

@peanutsfreezone

@dhdezcorral

@TitoSamu13

@Olgyyybf

@angelit0_7

@Hertz_io

@nightmxller

@WillyDontLie

@RazerRedFox1

@pulpotorro

@lwjist

nice so installing and maintaining torch has just become more fucking difficult

We are announcing that PyTorch will stop publishing Anaconda packages on PyTorch’s official anaconda channels. For more information, please refer to the following post on dev-discuss: dev-discuss.pytorch.org/t/pytorch-depr…

????? wow

Our NeurIPS paper is published on arXiv. In this paper, we propose a new optimizer ADOPT, which converges better than Adam in both theory and practice. You can use ADOPT by just replacing one line in your code. arxiv.org/abs/2411.02853

Mfs will turn on a LED and then say you can’t make it in CS without hardware😭

Hardware level CNN in system verilog Achieving good performance and low latency in simulation Fully synthesizable

😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭😭

2-pin 2.54mm headers are great test points for oscilloscope probe connections. However, it's a bit unsafe because you can easily reverse the probe accidentally, which connects the ground spring incorrectly. You might damage your oscilloscope input 😬 It's a great low inductance…

jaja osea q ahora resulta que attention is not all you need de hecho parece q ni lo necesitas ???

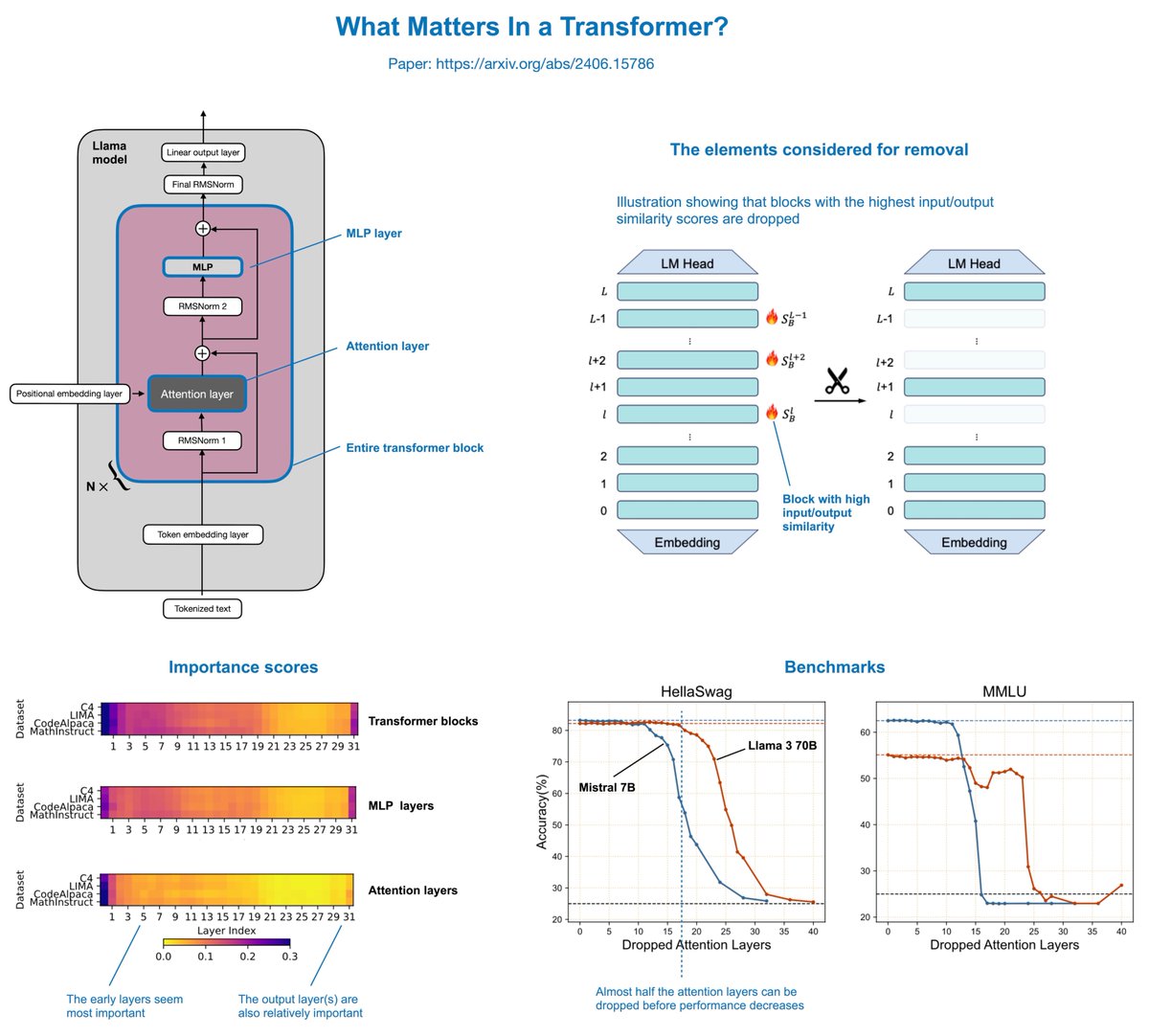

"What Matters In Transformers?" is an interesting paper (arxiv.org/abs/2406.15786) that finds you can actually remove half of the attention layers in LLMs like Llama without noticeably reducing modeling performance. The concept is relatively simple. The authors delete attention…

Acabo de leer que “los 20s se sienten como si estuvieras yendo tarde a algo importante pero no sabes a qué” y wey no mms I felt that.

being called smart because you have a variety of information on different subjects but in reality it’s all surface level intelligence and you don’t feel like you’re really good at anything

Consulting

how do i get a higher paying job as a low functioning / useless person

United States Trends

- 1. $CUTO 10,1 B posts

- 2. Russia 28,1 B posts

- 3. #tuesdayvibe 4.803 posts

- 4. DeFi 146 B posts

- 5. Good Tuesday 34,8 B posts

- 6. Sony 56,1 B posts

- 7. WWIII 3.451 posts

- 8. SPLC 2.130 posts

- 9. #MSIgnite 1.745 posts

- 10. Billy Boondocks N/A

- 11. $SHRUB 7.213 posts

- 12. #19Nov 3.591 posts

- 13. KADOKAWA 48,4 B posts

- 14. #InternationalMensDay 58,4 B posts

- 15. Karl Rove 1.021 posts

- 16. Times Square 24,6 B posts

- 17. ATACMS 111 B posts

- 18. Accused 2.254 posts

- 19. US-made 13,1 B posts

- 20. $MEMERA N/A

Who to follow

-

Royale with cheese

Royale with cheese

@cringe_salad -

Satelec 2025

Satelec 2025

@satelec_etsit -

EstPau 💨

EstPau 💨

@paulaesteban47 -

Carlitos (farm arc) 🍉🌲

Carlitos (farm arc) 🍉🌲

@peanutsfreezone -

Skairipa

Skairipa

@dhdezcorral -

samba 🌹

samba 🌹

@TitoSamu13 -

Olga

Olga

@Olgyyybf -

Angel Gomez

Angel Gomez

@angelit0_7 -

Hertz

Hertz

@Hertz_io -

rocío 🍄

rocío 🍄

@nightmxller -

DTB

DTB

@WillyDontLie -

RazerRedFox

RazerRedFox

@RazerRedFox1 -

Pulpo 🐙

Pulpo 🐙

@pulpotorro -

☆

☆

@lwjist

Something went wrong.

Something went wrong.