Similar User

@pierrelux

@_mcnutt_

@AdamCoscia

@kiranvodrahalli

@apoorv2904

@Manassra

@HarvardClubUK

@_giuseppemacri

@RobinGainer

@_FlyHigh_high

@arpitnarechania

@chrisirhc

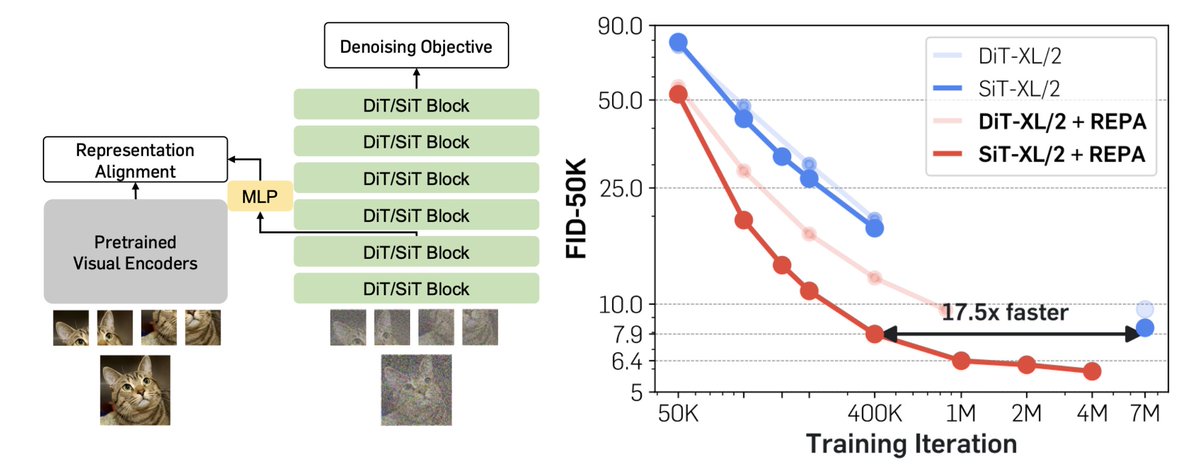

Representation matters. Representation matters. Representation matters, even for generative models. We might've been training our diffusion models the wrong way this whole time. Meet REPA: Training Diffusion Transformers is easier than you think! sihyun.me/REPA/(🧵1/n)

PSA: I'm open to guest posts on @interconnectsai covering areas I'm not an expert in, video gen, image gen, architectures, etc. Will be a high bar though.

AI tools have no creative control; they're like slot machines. Yeah buddy, sure. This is ReshotAI. In the coming months, we will see many more tools like this. Future of AI is bright ✌️

Quick tests of CLIP directions with flux-schnell. The latent space is still entangled and jumpy, but I'm finding higher-level sliders like 'complexity' and 'playfulness' fun and useful for navigating. 🧭🎚️

People will be like, “generative AI has no practical use case,” but I did just use it to replace every app icon on my home screen with images of Kermit, soooo

I'm genuinely impressed by Kolors IP Adapter! 🎨 Just put out a demo so you can play with image variations and reference 🖼️ ▶️ huggingface.co/spaces/multimo…

In the past few weeks, I deep dived into an exploration revolving around the use of physical interfaces to feed and interact with a real-time img2img diffusion pipeline using Stream Diffusion and SDXL Turbo. What really captivated me is to use my hands, objects, art supplies,…

New way to navigate latent space. It preservers the underlying image structure and feels a bit like a powerful style-transfer that can be applied to anything. The trick is to...

just released a paper with will berman on multimodal inputs for image generation main idea: describing things just in text is often hard. can you train a model that uses interleaved text/image prompts for image generation? the answer is yes. 🧵

Image generation AI is a cognitive prosthetic for aphantasiacs. Opposing it is ableist.

The new 'Style References' is mind-blowing! I tried to transfer the styles of some movies. 🧵 Here is how and the results:

If you have questions about why Meta open-sources its AI, here's a clear answer in Meta's earnings call today from @finkd

Google just announced a new image generator! ImageFX (It's also available in Bard) 🧵 Comparisons and more in the thread:

In a world where new things to learn never fall short, finding an effective learning path is essential. I've not found a better Diffusion models tutorial than arxiv.org/abs/2208.11970. It explains things better than a whole quarter of Stanford course I took.

What a fruitful #kdd week! Learned so much and also of course witnessed how LLM is the hottest way to do recommendation systems / causal inferencing / outlier detection…… Plus, sharing the best experiment result I saw:

I will be attending the #kdd2023 conference next week. Come say hi if you’re around! You are also welcome to come visit the Apple booth and learn about our latest research publications and career opportunities in AI and ML. See you there!

My latest side project on the topic of small LLMs! Thanks amazing collaborators for making this happen. You’re welcome to leave a comment on it on Kaggle if you find it helpful: kaggle.com/code/mistyligh…

Too expensive to train #LLMs like ChatGPT? Check out our recent survey on small LMs! Outline: • What are "small" LMs? • How to make them small? • Comparison between recent #opensource small LMs • Applications in the real world Survey: tinyurl.com/mini-giants

Daily dose of life lessons from stats-teaching youtubers: * [Markov chain]: "the future is not independent of the past, but the future is conditionally independent of the past given the present". * [Ergodic therum]: "anything that can happen, will happen".

After 2 years, Practical Deep Learning for Coders v5 is finally ready! 🎊 This is a from-scratch rewrite of our most popular course. It has a focus on interactive explorations, & covers @PyTorch, @huggingface, DeBERTa, ConvNeXt, @Gradio & other goodies 🧵 course.fast.ai

United States Trends

- 1. Kendrick 189 B posts

- 2. Daniel Jones 36,6 B posts

- 3. Luther 20 B posts

- 4. #TSTTPDSnowGlobe 4.455 posts

- 5. $CUTO 4.821 posts

- 6. Giants 68,9 B posts

- 7. Squabble Up 9.760 posts

- 8. Kdot 3.770 posts

- 9. TV OFF 13,3 B posts

- 10. Kenny 19,9 B posts

- 11. Danny Dimes 1.231 posts

- 12. Reincarnated 10,2 B posts

- 13. Wayne 30 B posts

- 14. Dodger Blue 3.734 posts

- 15. Muppets 6.837 posts

- 16. #StrayKids_dominATE 5.816 posts

- 17. Jack Antonoff 1.916 posts

- 18. Heart Pt 6.823 posts

- 19. One Mic 3.461 posts

- 20. Wacced Out Murals 12,5 B posts

Who to follow

-

Pierre-Luc Bacon

Pierre-Luc Bacon

@pierrelux -

Andrew McNutt | @[email protected]

Andrew McNutt | @[email protected]

@_mcnutt_ -

Adam Coscia

Adam Coscia

@AdamCoscia -

Kiran Vodrahalli ([email protected])

Kiran Vodrahalli ([email protected])

@kiranvodrahalli -

Apoorv Vyas

Apoorv Vyas

@apoorv2904 -

Wesam 🇵🇸

Wesam 🇵🇸

@Manassra -

Harvard Club UK

Harvard Club UK

@HarvardClubUK -

Giuseppe Macri'

Giuseppe Macri'

@_giuseppemacri -

RobinGainer

RobinGainer

@RobinGainer -

Isaac Cho

Isaac Cho

@_FlyHigh_high -

Arpit Narechania 🇮🇳 🏏♟️

Arpit Narechania 🇮🇳 🏏♟️

@arpitnarechania -

Chris Chua

Chris Chua

@chrisirhc

Something went wrong.

Something went wrong.