Kuan-Hao Huang

@kuanhaoh_Assistant Professor CSE @TAMU | Postdoc @UofIllinois | CS PhD @UCLA | Natural Language Processing and Multimodal Learning

Similar User

@shi_weiyan

@kaiwei_chang

@ysu_nlp

@WeijiaShi2

@zhezeng0908

@billyuchenlin

@yizhongwyz

@_Hao_Zhu

@hanjie_chen

@taoyds

@muhao_chen

@JialuLi96

@yufei_t

@yuz9yuz

@IHung_Hsu

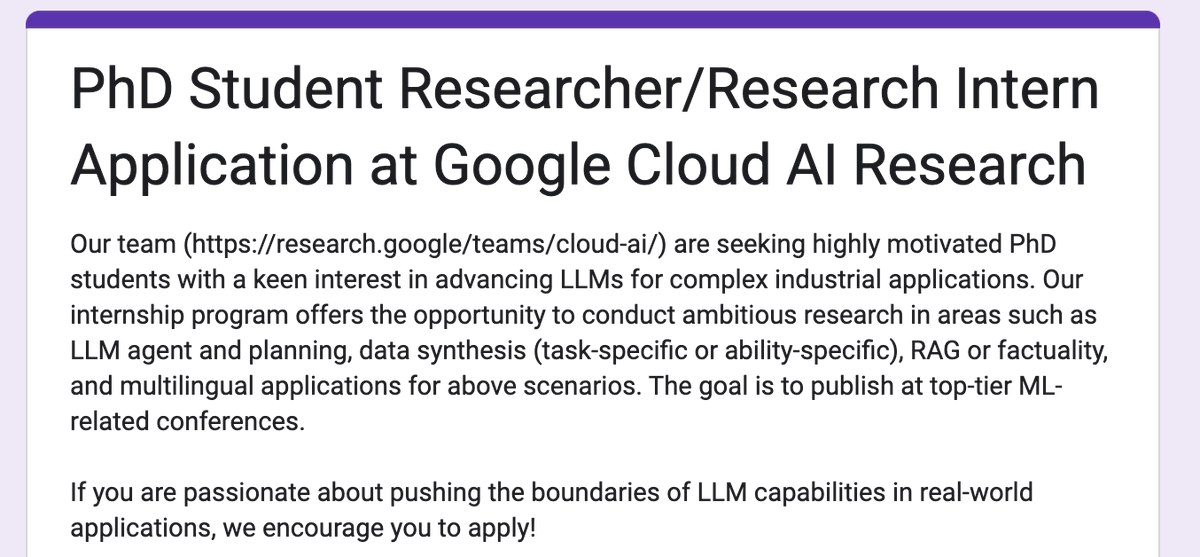

Our team (Google Cloud AI Research: research.google/teams/cloud-ai/) is seeking PhD student researchers/interns to work on LLM-related topics (agent, reasoning, RAG, data synthesis, etc.). If interested, please fill in this form: forms.gle/Cia2WGY94zTkpP…. Thank you and plz help RT!

Just arrived in Philadelphia for @COLM_conf and very excited to reconnect with old friends and meet new friends! I’m currently recruiting PhD students working on NLP/Multimodal at TAMU for Fall 2025. If you’re interested, feel free to schedule a chat!

I am leading the new RAISE Initiative (raise-tamu.net). The aim of RAISE is to take a first step in building a coordination network within TAMU to promote collaborations among AI, science, and engineering. Very grateful to my colleagues and friends for support.

(1/8) Position bias of LLMs is problematic in many applications, such as model-based evaluation and retrieval-augmented QA. Can we eliminate it so that LLMs become more reliable and achieve better performance? Check out our paper: arxiv.org/abs/2407.01100

Proposing Ctrl-G, a neurosymbolic framework that enables arbitrary LLMs to follow logical constraints (length control, infilling …) with 100% guarantees. Ctrl-G beats GPT4 on the task of text editing by >30% higher satisfaction rate in human eval. arxiv.org/abs/2406.13892

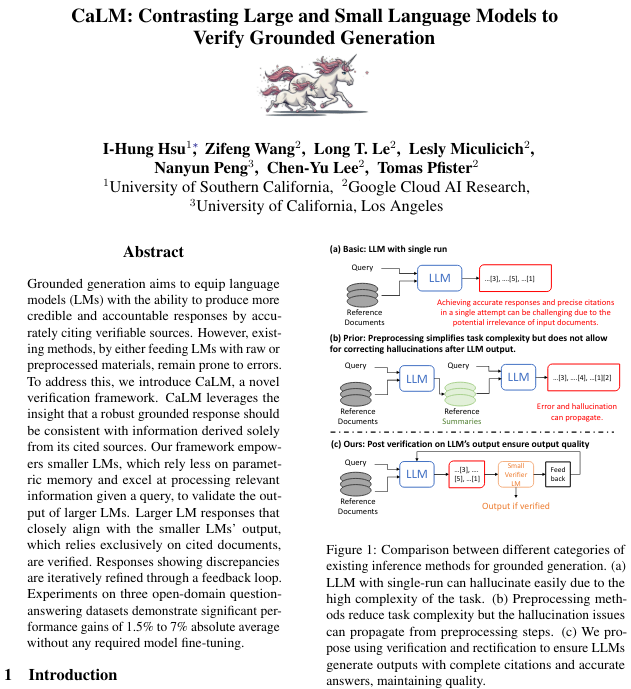

🚀 New paper alert! Want to make your LLM's generation more credible and grounded on pieces of evidence? Our CaLM algorithm, presented at @ACL'24, leverages smaller LMs to achieve just that **unsupervisedly**! (1/n)

We have won two NAACL2024 Outstanding Paper Awards! Congratulations to Chi Han, Shizhe Diao, Yi Fung, Xingyao Wang, Yangyi Chen and all students and collaborators! Chi Han @Glaciohound will be on academic job market next year! arxiv.org/pdf/2308.16137 arxiv.org/pdf/2311.09677

I'll miss #NAACL2024 next week due to my visa status. However, feel free to talk to @tparekh97 about our latest work on event extraction and cross-lingual transfer! I'm also seeking students to join my group at TAMU. Interested? Fill out this Google form! forms.gle/Cw4mn47AWNgkmc…

Excited to share that our 𝙏𝙚𝙭𝙩𝙀𝙀 project has been accepted by #ACL2024 findings! 🎉 Try it out for a fair evaluation on event extraction, and feel free to contribute your models!

Working on event extraction?🧐 Check out our latest event extraction benchmark project! We propose 𝙏𝙚𝙭𝙩𝙀𝙀, a standardized, fair, and reproducible benchmark for event extraction. Webpage: khhuang.me/TextEE/ Github: github.com/ej0cl6/TextEE

Congratulations to the @uclanlp alumnus, Kuan-Hao on his new position at TAMU! I'm eager to see him lead transformative AI research in the future. 🤩

I am thrilled to share that I will join the Department of Computer Science and Engineering at @TAMU as an Assistant Professor in Fall 2024. Many thanks to my advisors, colleagues, and friends for their support and help. I'm really excited about the new journey at College Station!

I am thrilled to share that I will join the Department of Computer Science and Engineering at @TAMU as an Assistant Professor in Fall 2024. Many thanks to my advisors, colleagues, and friends for their support and help. I'm really excited about the new journey at College Station!

Very proud of this work by @zhenhailongW, based on collaboration with @jiajunwu_cs ‘s amazing group at Stanford. We fill in the gap between low-level visual perception and high-level language reasoning by designing an intermediate symbolic layer of vector graphics knowledge.

Large multimodal models often lack precise low-level perception needed for high-level reasoning, even with simple vector graphics. We bridge this gap by proposing an intermediate symbolic representation that leverages LLMs for text-based reasoning. mikewangwzhl.github.io/VDLM 🧵1/4

Check out our VDLM paper on large multimodal models for low-level perception! We use SVG as an intermediate symbolic representation and leverage the text-based reasoning ability from LLMs. VDLM shows strong zero-shot performance in low-level perception tasks for vector graphics!

Large multimodal models often lack precise low-level perception needed for high-level reasoning, even with simple vector graphics. We bridge this gap by proposing an intermediate symbolic representation that leverages LLMs for text-based reasoning. mikewangwzhl.github.io/VDLM 🧵1/4

Curious about how LLMs help cross-lingual transfer learning?🤔 Explore our latest work! We leverage LLMs for *contextualized machine translation*, enhancing label translation quality and leading to significant improvements in cross-lingual structured prediction! #NAACL2024

🔍🚨How to improve multilingual performance on structured prediction tasks? Excited to share our latest work CLaP - a label projection technique utilizing LLMs to do contextualized machine translation, improving two tasks in 47 languages including 10 extremely low-resource ones!

Check out our work on epidemic prediction! 🔍🦠🔍🦠 We show that event detection can be a good tool for providing early warning for *unseen* pandemic. #NAACL2024

🦠How to avoid a future COVID pandemic? 🔍How to detect epidemics early? Excited to share our latest work SPEED - an Event Detection framework to extract epidemic-related events from Tweets and can provide breakthrough early warnings for the unseen epidemic of Monkeypox! 🤯😱

One hub for all Event Extraction needs! Do check out our extensive benchmarking. We also tried five different LLMs and they perform poorly at this task. Also, it's completely open-source, so feel free to benchmark and add your models to the hub!

Working on event extraction?🧐 Check out our latest event extraction benchmark project! We propose 𝙏𝙚𝙭𝙩𝙀𝙀, a standardized, fair, and reproducible benchmark for event extraction. Webpage: khhuang.me/TextEE/ Github: github.com/ej0cl6/TextEE

Happy that my student Oscar's paper on Spurious Corr. has been accepted to EACL'24 Findings. It tries to understand it from the embedding space. Thanks to our collaborators @kuanhaoh_ @kaiwei_chang for making the work happen. arxiv.org/abs/2305.13654

ACL announcement: "The ACL Executive Committee has voted to significantly change ACL's approach to protecting anonymous peer review. The change is effective immediately. (1/4) #NLPRoc

United States Trends

- 1. Pam Bondi 59,8 B posts

- 2. Brian Kelly 2.815 posts

- 3. Gaetz 921 B posts

- 4. Bryce Underwood 14 B posts

- 5. #GoBlue 4.931 posts

- 6. Shohei Ohtani 32,8 B posts

- 7. Aaron Judge 14,2 B posts

- 8. Ken Paxton 21,9 B posts

- 9. Browns 26,7 B posts

- 10. Lindor 2.918 posts

- 11. Rubio 49,5 B posts

- 12. DeSantis 34,8 B posts

- 13. Collins 108 B posts

- 14. Dashie 6.195 posts

- 15. NL MVP 15,4 B posts

- 16. Trey Gowdy 8.047 posts

- 17. Trump University 5.608 posts

- 18. Portnoy 1.160 posts

- 19. Murkowski 77,4 B posts

- 20. Keanu 8.575 posts

Who to follow

-

Weiyan Shi@EMNLP

Weiyan Shi@EMNLP

@shi_weiyan -

Kai-Wei Chang

Kai-Wei Chang

@kaiwei_chang -

Yu Su ✈️ #NeurIPS2024

Yu Su ✈️ #NeurIPS2024

@ysu_nlp -

Weijia Shi

Weijia Shi

@WeijiaShi2 -

Zhe Zeng

Zhe Zeng

@zhezeng0908 -

Bill Yuchen Lin 🤖

Bill Yuchen Lin 🤖

@billyuchenlin -

Yizhong Wang

Yizhong Wang

@yizhongwyz -

Hao Zhu 朱昊

Hao Zhu 朱昊

@_Hao_Zhu -

Hanjie Chen

Hanjie Chen

@hanjie_chen -

Tao Yu

Tao Yu

@taoyds -

🌴Muhao Chen🌴

🌴Muhao Chen🌴

@muhao_chen -

Jialu Li

Jialu Li

@JialuLi96 -

Yufei Tian @EMNLP

Yufei Tian @EMNLP

@yufei_t -

Yu Zhang

Yu Zhang

@yuz9yuz -

I-Hung Hsu

I-Hung Hsu

@IHung_Hsu

Something went wrong.

Something went wrong.