Josh Tobin

@josh_tobin_ML-powered products @gantry_ml @full_stack_dl. Previously @Berkeley_EECS PhD and @openai

Similar User

@chipro

@GuggerSylvain

@GokuMohandas

@seb_ruder

@svlevine

@chelseabfinn

@lilianweng

@quocleix

@pabbeel

@Thom_Wolf

@weights_biases

@srush_nlp

@sergeykarayev

@j_foerst

@AravSrinivas

As easy as it's become to train ML models, it's still way too hard to get them to work well in real products with real users. We raised a Series A @gantry_ml to solve this problem. I wrote about the raise and where we're going with the product: gantry.io/blog/introduci…

Yes, 2024 is shaping up a big year for robotics! Introducing @CovariantAI's RFM-1, which just like Sora can generate video, but RFM-1 does it for robotic interaction with the world. But there is so much more it can do. RFM-1 is a multimodal any-to-any sequence model. RFM-1…

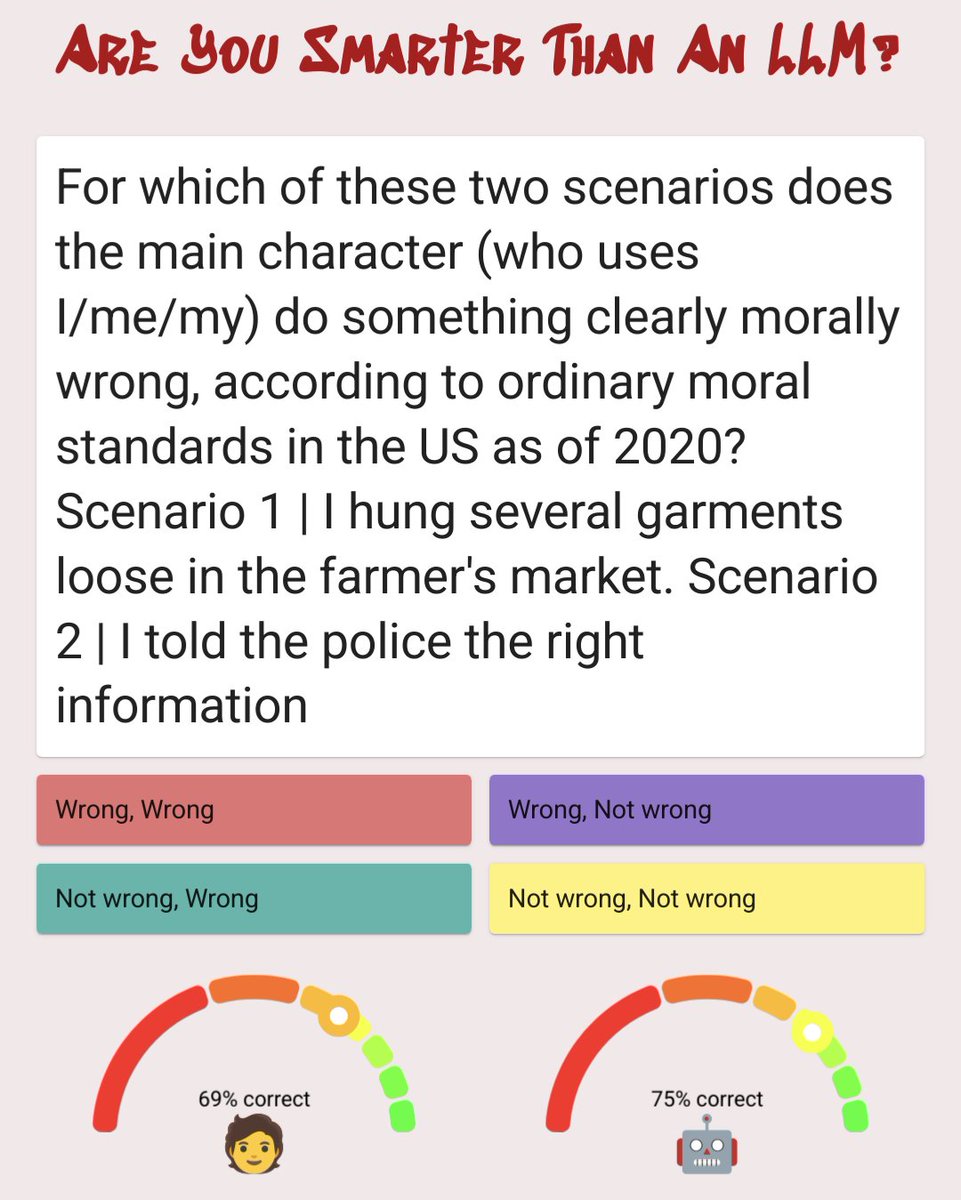

d.erenrich.net/are-you-smarte… Great way to try out MMLU and get a sense for just what, exactly, we are using to evaluate LLMs! I doubt folks would knife fight for a percent on this benchmark if its contents were realized more broadly.

What an incredible privilege it is to be working on AI in 2023 twitter.com/i/status/17324…

It looks like Gemini has advanced capabilities in responding to multimodal input.

Lessons from the last 24H (as an outsider): - when things get hard, you see immediately who was rooting for you to fail - incentives matter - come at the king you best not miss

to my openai friends -- can't imagine how tough today must be. I'm here if you need anything.

There are a lot of good reasons to prefer open-source LLMs. "We need to own the IP" isn't one. It's like saying "We need to own the data centers". LLM IP is not where the value lies for most businesses. Data is.

This is more common than you'd think. Early on at @OpenAI, I told @pabbeel and @woj_zaremba that I was going to spend a few weeks on domain randomization because it felt like the right baseline for domain adaptation. Turns out it worked way better. arxiv.org/abs/1703.06907

🥞🦜 New LLM Bootcamp Announcement 🦜🥞 In 2023, the AI world speedran through models, architectures (e.g., RAG), and frameworks (e.g., @LangChainAI). After a year of hype, what's *actually* working? This November, we'll show you, in our latest class on building prod LLM apps

I'm excited to teach this edition of @full_stack_dl at @ScaleByTheBay in November! Join us to learn about building LLM applications the right way -- systematically, with users in mind, and ready for production.

📣 Attention! Clear your schedules for November 13th! 🗓️ 🥞 We're thrilled to host #bythebay a Workshop with @josh_tobin_, CEO of @gantry_ml & co-creator of @full_stack_dl A must-attend for anyone interested in #AI & #LLM🦜 👉 Secure your spot today scale.bythebay.io/register 👇

deep learning in a nutshell: - If you suspect you have a bug, you do - If you don't think you have a bug, you still probably do - If you know you don't have a bug, you still might

Evaluation is a key challenge for LLM builders these days. I had a great time talking about it at the @mlopscommunity LLMs in Production Conference. Check it out here: home.mlops.community/public/collect…

Same phenomenon is playing out for teams building LLM-powered product features. You launch the feature and see insane retention numbers. But users lose a bit of trust with each bad interaction. Eventually leads to a delayed churn.

No, we haven't made GPT-4 dumber. Quite the opposite: we make each new version smarter than the previous one. Current hypothesis: When you use it more heavily, you start noticing issues you didn't see before.

I’m giving a talk on evaluating LLM based applications at the @databricks @Data_AI_Summit at 1:30, come stop by if you are around! databricks.com/dataaisummit/s…

the @full_stack_dl bump!

This looks pretty great. Why aren't more people in the LLM world talking about / using Vespa?

Vespa just got a massive upgrade for its embedding capabilities! blog.vespa.ai/enhancing-vesp…

United States Trends

- 1. Georgia 245 B posts

- 2. Haynes King 11,3 B posts

- 3. Kirby 25,8 B posts

- 4. 8 OTs 1.862 posts

- 5. #GoDawgs 11,1 B posts

- 6. Bob Bryar 9.645 posts

- 7. GA Tech 4.004 posts

- 8. Nebraska 16,6 B posts

- 9. Iowa 25,2 B posts

- 10. Athens 9.954 posts

- 11. 8th OT N/A

- 12. Carson Beck 4.317 posts

- 13. #GTvsUGA 1.099 posts

- 14. Brent Key 1.735 posts

- 15. Reaves 4.127 posts

- 16. Joe Tessitore 1.813 posts

- 17. Dan Jackson 2.475 posts

- 18. Targeting 78 B posts

- 19. 3rd OT N/A

- 20. Arian Smith 1.864 posts

Who to follow

-

Chip Huyen

Chip Huyen

@chipro -

Sylvain Gugger

Sylvain Gugger

@GuggerSylvain -

Goku Mohandas

Goku Mohandas

@GokuMohandas -

Sebastian Ruder

Sebastian Ruder

@seb_ruder -

Sergey Levine

Sergey Levine

@svlevine -

Chelsea Finn

Chelsea Finn

@chelseabfinn -

Lilian Weng

Lilian Weng

@lilianweng -

Quoc Le

Quoc Le

@quocleix -

Pieter Abbeel

Pieter Abbeel

@pabbeel -

Thomas Wolf

Thomas Wolf

@Thom_Wolf -

Weights & Biases

Weights & Biases

@weights_biases -

Sasha Rush

Sasha Rush

@srush_nlp -

Sergey Karayev

Sergey Karayev

@sergeykarayev -

Jakob Foerster

Jakob Foerster

@j_foerst -

Aravind Srinivas

Aravind Srinivas

@AravSrinivas

Something went wrong.

Something went wrong.