Matt Pfeffer

@inIVmaticsInformatics @ Flatiron Health. Yep, my username is a terrible joke

Similar User

@mickytripathi1

@HITpolicywonk

@MikeOnFHIR

@GrahameGrieve

@JoshCMandel

@StueweScott

@hgalvinmd

@Matt_HealthIT

@muhammadc

@voigtscott

I have a couple codes for getting into the other place, in case anyone else is finding X increasingly unpalatable. (I only use feeds here now, but still....) There's only a little health tech and informatics stuff there so far, but you gotta start somewhere, right?

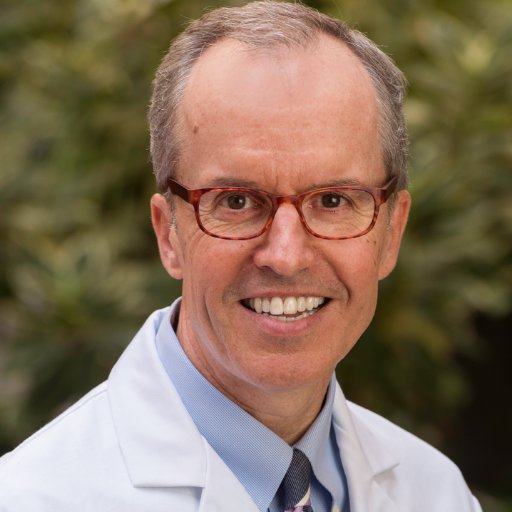

Great thread on Hinton's infamous prediction about AI replacing radiologists: "thinkers have a pattern where they are so divorced from implementation details that applications seem trivial, when in reality, the small details are exactly where value accrues."

I don't talk much about this - I obtained one of the first FDA approvals in ML + radiology and it informs much of how I think about AI systems and their impact on the world. If you're a pure technologist, you should read the following: There's so much to unpack for both why…

I recommend this podcast episode for some thankfully sober assessment of how to use AI in medicine (as a knowledge aid, esp. for less expert MDs, and as an automatic note taker, to start)

Q: What do you call the person who graduated at the bottom of their medical school class? A: A doctor On @CogRev_Podcast, @zakkohane raises the common sense argument of ensuring a higher baseline for doctors everywhere by integrating GPT-4 into clinical medicine.

It obviously matters, because it has implications to how well the models can generalize to never-before-seen inputs and tasks. Serious exacerbation of automation bias can occur if we ascribe reasoning to what is just a minor perturbation of training data

This thread is fascinating. LLMs with RLHF are incredibly effective problem solvers we can assign tasks to. From a practical perspective, if most humans can’t tell whether GPT-4 is memorizing vs. reasoning, does the distinction even matter?

United States Trends

- 1. Browns 101 B posts

- 2. Lakers 53,5 B posts

- 3. Franz 18,7 B posts

- 4. Jameis 51,7 B posts

- 5. Bron 15,4 B posts

- 6. #ThePinkPrintAnniversary 25,6 B posts

- 7. Tomlin 20,8 B posts

- 8. Reaves 7.652 posts

- 9. Pickens 16,7 B posts

- 10. Chris Brown 17,1 B posts

- 11. Pam Bondi 249 B posts

- 12. Anthony Davis 4.050 posts

- 13. #2024MAMAAWARDS 224 B posts

- 14. #PinkprintNIKA 10,8 B posts

- 15. #PITvsCLE 11,8 B posts

- 16. Arctic Tundra 17 B posts

- 17. #TNFonPrime 6.180 posts

- 18. Russ 37,7 B posts

- 19. Myles Garrett 10,1 B posts

- 20. AFC North 8.877 posts

Something went wrong.

Something went wrong.