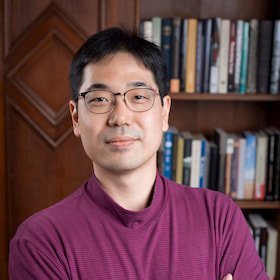

George Papagiannis

@geopgsPhD in Machine learning for Robotics @imperialcollege | MPhil in Advanced CS from @Cambridge_Uni

Similar User

@ic_arl

@Ed__Johns

@robotgradient

@zhousiqi9

@MFlageat

@LucaGrillotti

@vitalisvos19

@hannah_janmo

@IvanKapelyukh

@BendikasRokas

@maytusp

@sethnabarro

@SomeRobot1

Want to teach your robot new tasks from only a single demo? We've just released code for MILES, which we presented at CoRL 2024 last week. Learning is fully automated: you just provide a single demonstration, then sit back and relax! 🍹😴 Code: robot-learning.uk/miles. 🧵👇

Could robots collect and self-label their own data? It would be much easier than manually giving endless demos! New at #CoRL2024: 🦾✌️ MILES: Making Imitation Learning Easy with Self-Supervision Our robot learned to use a key from just one demo! robot-learning.uk/miles More 👇

Can a robot learn a skill with one object grasp, and then perform that skill with an entirely different object grasp? Today at #IROS2024, @geopgs will present our solution based on self-supervised learning! 🤖📷🔨 Oral at 6pm in Room 3. Come along 😀. robot-learning.uk/adapting-skills

If we train a robot to use a grasped object (e.g. a hammer), it's going to fail when the object is grasped in a novel way. In our latest paper, robots can now adapt skills to novel grasps without needing any further demonstrations! #IROS2024 See tinyurl.com/3ye6dyts. More 👇

Amazing stuff by @normandipalo

Excited to introduce Diffusion Augmented Agents (DAAGs)✨. We give an agent control of a diffusion model, so it can create its own *synthetic experience*.🪄 The result is a lifelong agent that can learn new reward detectors and policies, much more efficiently. Here's how. 👇

The future of robotics is in your hands. Literally. Excited to announce our new paper,✨R+X✨. A person records everyday activities while wearing a camera.A robot passively learns those skills. No labels, no training. Here's how. 👇

Come find us at the RSS Workshop on Lifelong Robot Learning to learn more about how we can learn robotic skills directly from a long, unlabelled human video with zero model training or fine tuning by leveraging existing Foundation Models!

🌟New paper!🌟 "R+X: Retrieval and Execution from Everyday Human Videos" By using a VLM for retrieval and in-context IL for execution, robots can now learn from unlabelled videos of humans performing tasks. No need to label and train; just *retrieve* and *execute*! More 👇

🤖 Render and Diffuse (R&D) 🤖 It unifies image and action spaces using virtual renders of the robot and uses a diffusion process to iteratively update them until they represent the desired robot actions. #RSS2024 Paper: arxiv.org/pdf/2405.18196 Project: vv19.github.io/render-and-dif…

✨ Introducing Keypoint Action Tokens. 🤖 We translate visual observations and robot actions into a "language" that off-the-shelf LLMs can ingest and output. This transforms LLMs into *in-context, low-level imitation learning machines*. 🚀 Let me explain. 👇🧵

✨Introducing 🦖🤖DINOBot. DINOBot is an imitation learning method designed explicitly around the strengths of Vision Foundation Models to fully leverage them. Thanks to that, it can learn a series of everyday skills with a single demonstration. Learn more. 👇 (ICRA 2024! 🇯🇵)

Tomorrow I will give a talk at @picampusschool covering some recent research on robotics x foundation models. Will cover topics from letting GPT-4 maneuver a robot, to building robotic foundation models like RT-X. 4PM CET. Online, register below. pischool.link/robotics

Dive into the future of #AI and #Robotics with Pi School's upcoming tech talk, Robotics x Foundation Models! Join us on Dec 14, 2023, at 4:00 PM CET for an illuminating session with @normandipalo, an AI and robotics expert. Discover the potential of merging digital intelligence…

We’ve been showing live demos of our one-shot imitation learning method, DOME, at the #ICRA2023 exhibition. Today is the last chance to catch it, until 5pm, at stand F20. Below is a sneak preview of @GeorgePapagian1 showing how our robot can make toast! robot-learning.uk/dome

United States Trends

- 1. McDonald 63,2 B posts

- 2. $CUTO 7.599 posts

- 3. Packers 12,5 B posts

- 4. Clark Kent 2.903 posts

- 5. #RollWithUs 2.514 posts

- 6. Coke 38,8 B posts

- 7. #AskFFT 1.564 posts

- 8. DeFi 103 B posts

- 9. Mike Johnson 63,1 B posts

- 10. #GoPackGo 1.744 posts

- 11. Go Bills 6.694 posts

- 12. Tillman 2.977 posts

- 13. HFCS N/A

- 14. Big Mac 8.266 posts

- 15. Full PPR 1.349 posts

- 16. #AskZB N/A

- 17. #sundayvibes 10,1 B posts

- 18. Chubb 1.851 posts

- 19. Jennings 5.836 posts

- 20. Schiff 68,2 B posts

Who to follow

-

ICARL

ICARL

@ic_arl -

Edward Johns

Edward Johns

@Ed__Johns -

Julen Urain

Julen Urain

@robotgradient -

SiQi Zhou

SiQi Zhou

@zhousiqi9 -

Manon Flageat

Manon Flageat

@MFlageat -

Luca Grillotti

Luca Grillotti

@LucaGrillotti -

Vitalis Vosylius

Vitalis Vosylius

@vitalisvos19 -

Hannah Janmohamed

Hannah Janmohamed

@hannah_janmo -

Ivan Kapelyukh 🇺🇦

Ivan Kapelyukh 🇺🇦

@IvanKapelyukh -

Rokas Bendikas

Rokas Bendikas

@BendikasRokas -

Maytus Piriya

Maytus Piriya

@maytusp -

Seth Nabarro

Seth Nabarro

@sethnabarro -

SomeRobot

SomeRobot

@SomeRobot1

Something went wrong.

Something went wrong.