Similar User

@YuekunDai

@ziqi_huang_

@zhang_yuanhan

@BoLi68567011

@davmoltisanti

@wjqdev

@xtl994

@ldkong1205

@JiahaoXie3

@GuangcongW

@xinntao

@hongfz16

@yuhangzang

@kelvinckchan

@HaoningTimothy

github.com/Q-Future/A-Ben… “Kindly blind at weird generations?” “Sadly yes.” We demonstrated that LMMs, though widely considered as better T2I evaluators, are in-turn especially less sensitive on common types of T2I-generated errors. Arxiv: arxiv.org/pdf/2406.03070

Our @gradio demo for Q-Align is embedded in our homepage! Visit Q-Align.github.io! It can: - Rate a score for an image or a video. - Predict the probabilities of rating levels!

Q-Instruct + TexForce + SD-Turbo. Making better quality AIGC (latter for each pair) faster.

Improve sd-turbo in one line: PeftModel.from_pretrained(pipe.text_encoder, 'chaofengc/sd-turbo_texforce') Arxiv: arxiv.org/abs/2311.15657 Github: github.com/chaofengc/TexF… huggingface: huggingface.co/chaofengc/sd-t…

The Visual Scorer API from Q-Instruct: github.com/Q-Future/Q-Ins…

The HF space is also updated (corresponding to the newer version): huggingface.co/spaces/teowu/Q… (still the link)

The HF space is also updated (corresponding to the newer version): huggingface.co/spaces/teowu/Q… (still the link)

github.com/Q-Future/Q-Ins… We release a better version of weights (totally reproducible) for Q-Instruct!

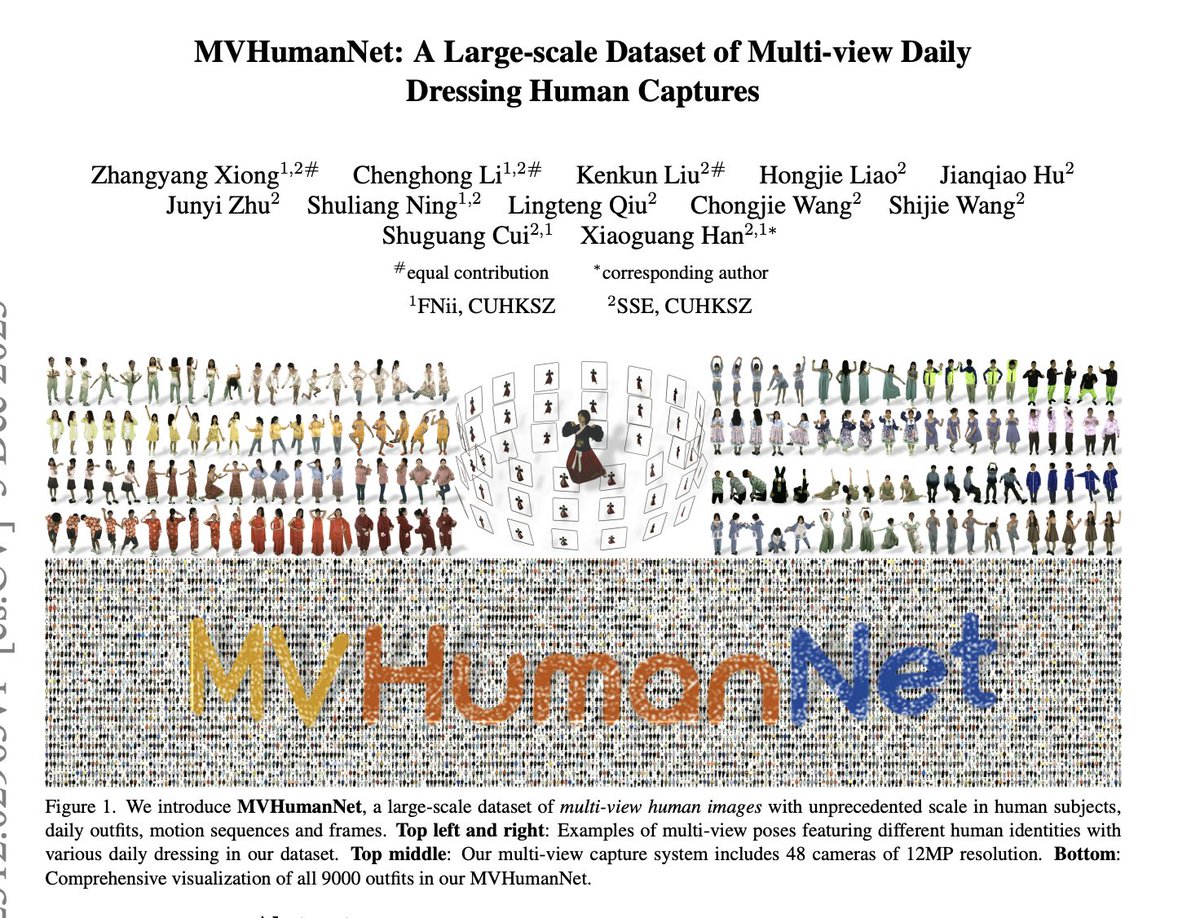

Thanks @_akhaliq for the sharing. Check out our MVHumanNet, the largest-to-date dataset of multi-view human captures, with 4,500 human identities and 9,000 daily dressing. We plan to release it in the next months. Following our MVImgNet, hope it can help.

MVHumanNet: A Large-scale Dataset of Multi-view Daily Dressing Human Captures paper page: huggingface.co/papers/2312.02… In this era, the success of large language models and text-to-image models can be attributed to the driving force of large-scale datasets. However, in the realm of…

Thank you @_akhaliq for sharing our work😄!

Q-Instruct: Improving Low-level Visual Abilities for Multi-modality Foundation Models paper page: huggingface.co/papers/2311.06… Multi-modality foundation models, as represented by GPT-4V, have brought a new paradigm for low-level visual perception and understanding tasks, that can…

Q-Instruct: Improving Low-level Visual Abilities for Multi-modality Foundation Models paper page: huggingface.co/papers/2311.06… Multi-modality foundation models, as represented by GPT-4V, have brought a new paradigm for low-level visual perception and understanding tasks, that can…

Our evaluation datasets for Q-Bench huggingface.co/papers/2309.14… are released on huggingface! Specifically, the datasets are splitted into dev set (fully accessible) and test set (image+questions accessible, correct answer hidden), and the labels will also be released soon!

Our team at Google Research is hiring student researcher for topics related to text-to-image synthesis and editing. Please write to me at kelvinckchan@google.com or drop me a DM if you are interested. (Retweets are welcomed!)

We're pleased to see our #CodeFormer is integrated into #stablediffusion webui 🥳🥳 Try out our demos on github.com/sczhou/CodeFor… to fix your AI-generated arts. #stablediffusionart #aiart #aiartist

If you’re running a local instance of #stablediffusion WebUI, highly recommend you go check this github every few days as it’s constantly updated. For example, it now has both GFPGAN and CodeFormer builtin as face fixing options. github.com/AUTOMATIC1111/…

Our newest system DALL·E 2 can create realistic images and art from a description in natural language. See it here: openai.com/dall-e-2/

United States Trends

- 1. Travis Hunter 15,7 B posts

- 2. Clemson 7.176 posts

- 3. Colorado 70,7 B posts

- 4. Arkansas 28,3 B posts

- 5. Quinn 14,7 B posts

- 6. Dabo 1.216 posts

- 7. Cam Coleman 1.062 posts

- 8. Isaac Wilson N/A

- 9. #HookEm 3.194 posts

- 10. Northwestern 6.838 posts

- 11. #SkoBuffs 4.272 posts

- 12. $CUTO 8.261 posts

- 13. Sean McDonough N/A

- 14. Sark 1.948 posts

- 15. Tulane 2.807 posts

- 16. #NWSL N/A

- 17. Zepeda 1.813 posts

- 18. Mercer 4.278 posts

- 19. Pentagon 94,3 B posts

- 20. #iubb N/A

Who to follow

-

Yuekun Dai

Yuekun Dai

@YuekunDai -

Ziqi Huang

Ziqi Huang

@ziqi_huang_ -

Yuanhan (John) Zhang

Yuanhan (John) Zhang

@zhang_yuanhan -

Li Bo

Li Bo

@BoLi68567011 -

Davide Moltisanti

Davide Moltisanti

@davmoltisanti -

Jiaqi Wang

Jiaqi Wang

@wjqdev -

Xiangtai Li

Xiangtai Li

@xtl994 -

Lingdong Kong

Lingdong Kong

@ldkong1205 -

Jiahao Xie

Jiahao Xie

@JiahaoXie3 -

Guangcong Wang

Guangcong Wang

@GuangcongW -

Xintao Wang

Xintao Wang

@xinntao -

Fangzhou Hong

Fangzhou Hong

@hongfz16 -

Yuhang Zang

Yuhang Zang

@yuhangzang -

Kelvin Chan

Kelvin Chan

@kelvinckchan -

Haoning Wu

Haoning Wu

@HaoningTimothy

Something went wrong.

Something went wrong.