Ansh Shah

@baymax3009Research Associate at RRC, IIIT Hyderabad. Previously undergrad at BITS Pilani | Interested in Robot Learning

Similar User

@_mob_mob_mob_

@AaronTrashman

@Muchindamuhombe

@CharlesWDean1

@sesegere

@Jr74Ski

@marvin_whiteeee

@David55th

@quentin33287364

@mullar_22

@realroshan2001

@CryptoPayel

@Tenrai_44

@Lino_Banks

@Sbbwlover256

Check out @binghao_huang 's new touch censor from CoRL24!

Want to use tactile sensing but not familiar with hardware? No worries! Just follow the steps, and you’ll have a high-resolution tactile sensor ready in 30 mins! It’s as simple as making a sandwich! 🥪 🎥 YouTube Tutorial: youtube.com/watch?v=8eTpFY… 🛠️ Open Source & Hardware…

Excited to finally share Generative Value Learning (GVL), my @GoogleDeepMind project on extracting universal value functions from long-context VLMs via in-context learning! We discovered a simple method to generate zero-shot and few-shot values for 300+ robot tasks and 50+…

Our seminal paper (yes, I do believe this is transformative to the field) "Spatial Cognition from Egocentric Video: Out of Sight not Out of Mind" is accepted @3DVconf #3DV2025 Camera ready soon Congrats 2 great coauthors @plizzari38126 @goelshbhm Toby @JacobChalkie @akanazawa

🆕on ArXiv Out of Sight, Not Out of Mind Spatial Cognition from Egocentric Video. dimadamen.github.io/OSNOM/ arxiv.org/abs/2404.05072 3D tracking active objects using observations captured through egocentric camera. Objects are tracked while in hand, from cupboards and into drawers

Wrote a blogpost on using image and video diffusion models to "draw actions" Summary: - LLMs can model arbitrary sequences, diffusion models can generate arbitrary patterns - Images can serve as a common format across modalities like vision, audio, actions Link below

Very happy to start sharing our work at Pi 🤖❤️ - a 3B pre-trained generalist model trained on 8+ robot platforms - a post-training recipe that allows robots to do dexterous, long-horizon tasks physicalintelligence.company/blog/pi0 What's exciting isn't laundry, but the recipe- a short 🧵

Not every foundation model needs to be gigantic. We trained a 1.5M-parameter neural network to control the body of a humanoid robot. It takes a lot of subconscious processing for us humans to walk, maintain balance, and maneuver our arms and legs into desired positions. We…

Diffusion-based approach beats autoregressive models at solving puzzles and planning 🤖 Original Problem: Autoregressive LLMs struggle with complex reasoning and long-term planning tasks despite their impressive capabilities. They have inherent difficulties maintaining global…

Last Sunday, we competed in the Vision Assistance Race at the @cybathlon 2024—the "cyber Olympics" designed to push the boundaries of assistive technology. In this race, our system guided a blind participant through everyday tasks such as walking along a sidewalk, sorting colors,…

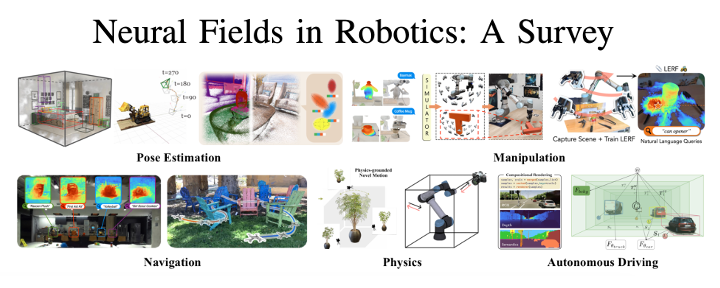

How do we represent 3D world knowledge for spatial intelligence in next-generation robots? We recently wrote an extensive survey paper on this emerging topic, covering recent state-of-the-art! 🦾 🚀 Check it out below. Feedback/Suggestions welcome! 📖arXiv:…

📢 Excited to share our new paper with @fabreetseo: "Beyond Position: How Rotary Embeddings Shape Representations and Memory in Autoregressive Transformers"! arxiv.org/abs/2410.18067 Keep reading to find out how RoPE affects Transformer models beyond just positional encoding 🧵

Pretraining can transform RL, but it might need rethinking how to pretrain with RL on unlabeled data to bootstrap downstream exploration. In our new work, we show how to accomplish this with unsupervised skills and exploration.

Latest work on leveraging prior trajectory data with *no* reward label to accelerate online RL exploration! Our method leverages our prior work (ExPLORe) and skill pretraining to achieve better sample efficiency on a range of spare-reward tasks than all prior approaches!

Sequence models have skyrocketed in popularity for their ability to analyze data & predict what to do next. MIT’s "Diffusion Forcing" method combines the strengths of next-token prediction (like w/ChatGPT) & video diffusion (like w/Sora), training neural networks to handle…

state space models are super neat & interesting, but i have never seen any evidence that they’re *smarter* than transformers - only more efficient any architectural innovation that doesn’t advance the pareto frontier of intelligence-per-parameter is an offramp on the road to AGI

Sirui's new work presents a nice system design with a user-friendly interface, for data collection without a robot. Collecting robot data without robots but with humans only is the right way to go.

How can we collect high-quality robot data without teleoperation? AR can help! Introducing ARCap, a fully open-sourced AR solution for collecting cross-embodiment robot data (gripper and dex hand) directly using human hands. 🌐:stanford-tml.github.io/ARCap/ 📜:arxiv.org/abs/2410.08464

Mechazilla has caught the Super Heavy booster!

A perfect real-world example of equivariance haha

Cats are invariant under SO(3) transformations! 😼

The 3D vision community really hates the bitter lesson. Dust3r is what you get when you take the lesson seriously.

The paper contains many ablation studies on various ways to use the LLM backbone 👇🏻 🦩 Flamingo-like cross-attention (NVLM-X) 🌋 Llava-like concatenation of image and text embeddings to a decoder-only model (NVLM-D) ✨ a hybrid architecture (NVLM-H)

United States Trends

- 1. Mike 1,81 Mn posts

- 2. Serrano 235 B posts

- 3. #NetflixFight 69,9 B posts

- 4. Canelo 15,8 B posts

- 5. #netflixcrash 15,3 B posts

- 6. Father Time 10,6 B posts

- 7. Logan 76,1 B posts

- 8. Rosie Perez 14,5 B posts

- 9. He's 58 22,9 B posts

- 10. Boxing 287 B posts

- 11. Shaq 15,6 B posts

- 12. #buffering 10,7 B posts

- 13. ROBBED 100 B posts

- 14. My Netflix 81,6 B posts

- 15. Roy Jones 7.039 posts

- 16. Ramos 69,9 B posts

- 17. Tori Kelly 5.055 posts

- 18. Cedric 21,4 B posts

- 19. Barrios 50,3 B posts

- 20. Gronk 6.524 posts

Who to follow

-

м о в

м о в

@_mob_mob_mob_ -

Aaron Jetson

Aaron Jetson

@AaronTrashman -

Lennon

Lennon

@Muchindamuhombe -

Charles W. Dean

Charles W. Dean

@CharlesWDean1 -

Oigo©

Oigo©

@sesegere -

JonSkiJr74

JonSkiJr74

@Jr74Ski -

Mr White💯

Mr White💯

@marvin_whiteeee -

David🇨🇦

David🇨🇦

@David55th -

African

African

@quentin33287364 -

Mercy From Above 💫

Mercy From Above 💫

@mullar_22 -

Roshan Kharel

Roshan Kharel

@realroshan2001 -

Payel💐

Payel💐

@CryptoPayel -

Tenrai 天雷

Tenrai 天雷

@Tenrai_44 -

Lino Banks🎲

Lino Banks🎲

@Lino_Banks -

I love big women

I love big women

@Sbbwlover256

Something went wrong.

Something went wrong.