Similar User

@tiantiaf

@zhezeng0908

@litian0331

@chachaachen

@ybnbxb

@YuChicago1234

@YingJin531

@kexun_zhang

@funandgames333

@wzihao12

@lmcui

@cheng_pengyu

@statsCong

@XuxingChen3

@yibophd

I am wondering how much token we need for Meow-Language:)

can a neural network learn to walk as a physical object in a physics simulation? here I train walking neural nets with an evolutionary algorithm. The input nodes/feet are activated by sine waves at learned phases & connections between two neurons extend based on their difference

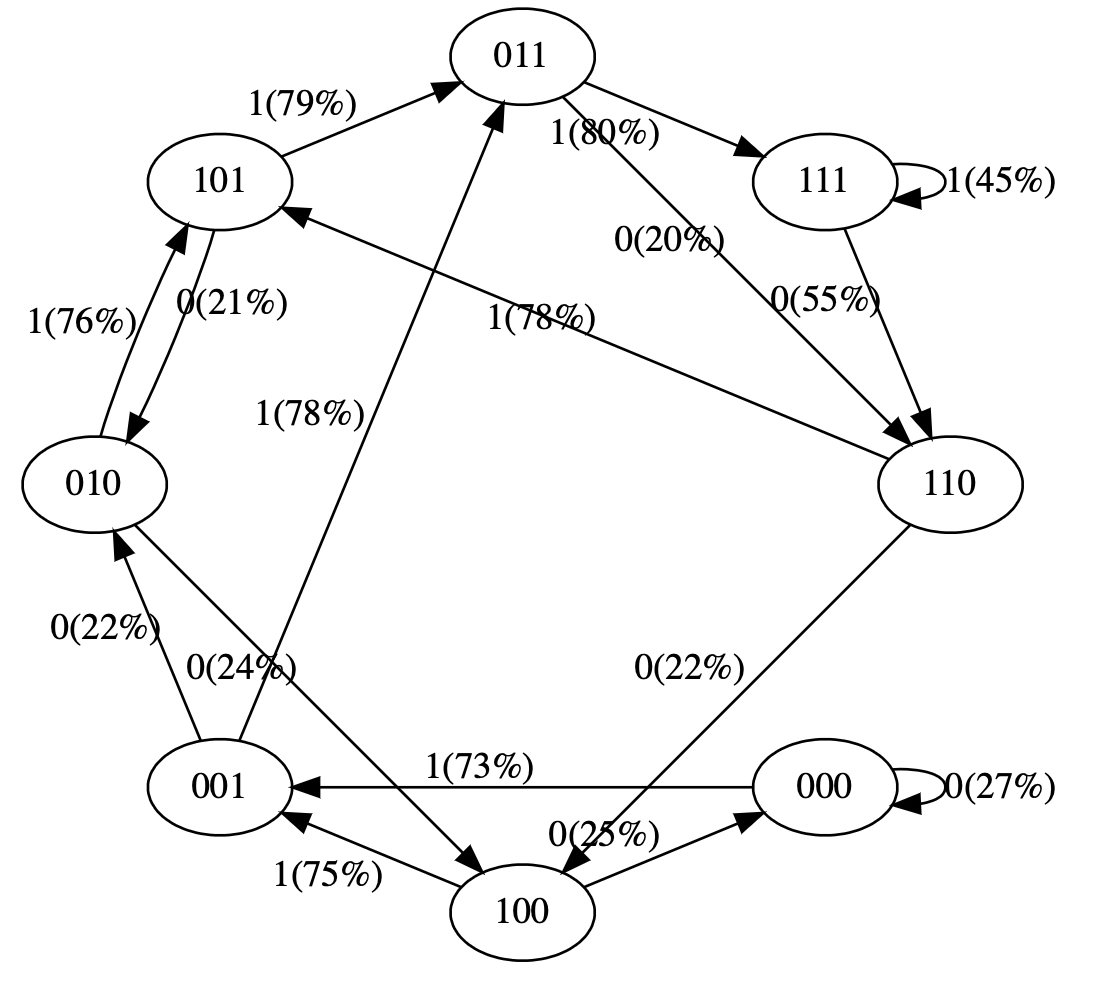

This is a baby GPT with two tokens 0/1 and context length of 3, viewing it as a finite state markov chain. It was trained on the sequence "111101111011110" for 50 iterations. The parameters and the architecture of the Transformer modifies the probabilities on the arrows. E.g. we…

youtube.com/watch?v=GSV5UD… Top Comment: Lesson: don't argue when you became parents, or your kids will install Arch.

Just wondering why all the linux mail clients are so old-fashioned. They are powerful, plugin-enriched, but ugly.

Want a remote Data Science / ML job? Here are 5 jobs that are remote and pay in USD, -- Thread --

📢 A 🧵on the future of NLP model inputs. What are the options and where are we going? 🔭 1. Task-specific finetuning (FT) 2. Zero-shot prompting 3. Few-shot prompting 4. Chain of thought (CoT) 5. Parameter-efficient finetuning (PEFT) 6. Dialog [1/]

The term 'spurious correlations' is often used informally in NLP to denote any undesirable feature-label correlations. But are all spurious features alike? #EMNLP2022 paper tries to address this question through a causal lens - arxiv.org/abs/2210.14011 (w/ Xiang & @hhexiy)

United States Trends

- 1. #GivingTuesday 18,7 B posts

- 2. Good Tuesday 30,5 B posts

- 3. #tuesdayvibe 3.187 posts

- 4. #alieninvasion N/A

- 5. #TuesdayFeeling 1.013 posts

- 6. Elvis 17,3 B posts

- 7. Delaware 72,8 B posts

- 8. #3Dic 2.266 posts

- 9. Jameis 62,9 B posts

- 10. Daniel Penny 83,7 B posts

- 11. Manfred 4.689 posts

- 12. Starfire 1.862 posts

- 13. Lemmy 1.237 posts

- 14. Watson 21,1 B posts

- 15. Golden At-Bat 1.068 posts

- 16. Vermont 16,3 B posts

- 17. Roddy 4.518 posts

- 18. Jeudy 31,8 B posts

- 19. Judges 72 B posts

- 20. Charley 5.448 posts

Who to follow

-

Tiantian Feng

Tiantian Feng

@tiantiaf -

Zhe Zeng

Zhe Zeng

@zhezeng0908 -

Tian Li

Tian Li

@litian0331 -

Chacha Chen

Chacha Chen

@chachaachen -

Lin Gui

Lin Gui

@ybnbxb -

Yu Gui

Yu Gui

@YuChicago1234 -

Ying Jin

Ying Jin

@YingJin531 -

Kexun Zhang✈️NeurIPS 2024

Kexun Zhang✈️NeurIPS 2024

@kexun_zhang -

Florida, yes

Florida, yes

@funandgames333 -

Zihao Wang

Zihao Wang

@wzihao12 -

Limeng Cui

Limeng Cui

@lmcui -

Pengyu Cheng

Pengyu Cheng

@cheng_pengyu -

Cong (Clarence) Jiang

Cong (Clarence) Jiang

@statsCong -

Xuxing Chen

Xuxing Chen

@XuxingChen3 -

Yibo Jiang

Yibo Jiang

@yibophd

Something went wrong.

Something went wrong.