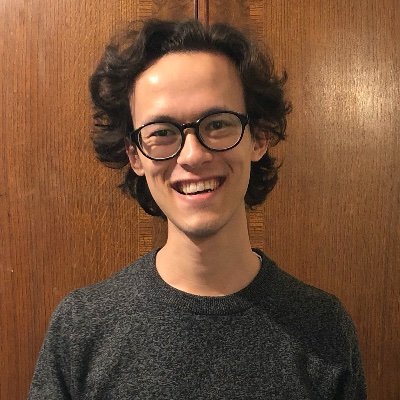

Darren

@SaggitariusAStartup fiend. Co-founder @BeyondMathLtd. Prev: Evi; YouView; Grapeshot; eBay; HomeX. Suit yourself, it's all good. Hello there, Universe.

Similar User

@AhmedHAwadallah

@asaf_amr

@infoseeking1

@jgmorenof

@jiading_fang

In situations where hyperthreading is slower than physical core usage, I'll take the 50.2% CPU usage as an overachievement. #hpc

Understanding the physical world is what I've been working on since the end of 2021. I reckon we're miles ahead of everyone else. It's just not obvious yet how far. We're keeping it on the down low. But not for long...

An article in the Wall Street Journal in which I express my opinion on the limitations of LLMs and on the potential power of new architectures capable of understanding the physical world, have persistent memory, can reason and can plan: four features of intelligent behavior that…

π has infinite numbers in it, so at some point in it, the entirety of π should show up again (well, almost). Right? Random thought to wake up to at 6:28. ^_^

TechCrunch Minute: AI could help design and test F1 cars faster: Formula One teams are looking at a startup called BeyondMath to bring their car construction to the next level. BeyondMath is working in the field of computational fluid… dlvr.it/TCFGwY #AI #AInews #AItips

I sat down with TechCrunch a couple of weeks ago to talk about progress at @BeyondMathLtd

Beyond Math’s ‘digital wind tunnel’ puts a physics-based AI simulation to work on F1 cars tcrn.ch/3MbJ5YH

Congrats on the newborn, to an old friend Bobby Table!

SQL injection-like attack on LLMs with special tokens The decision by LLM tokenizers to parse special tokens in the input string (<s>, <|endoftext|>, etc.), while convenient looking, leads to footguns at best and LLM security vulnerabilities at worst, equivalent to SQL injection…

Yep. But just for context, remember that memorizing the Internet in a way that can be arbitrarily queried is a super useful tool. Let's not gloss over what we have. But like Yann says, it ain't doing magic. It's that person you know who's incredible at pub quizzes, but 100%.

Sometimes, the obvious must be studied so it can be asserted with full confidence: - LLMs can not answer questions whose answers are not in their training set in some form, - they can not solve problems they haven't been trained on, - they can not acquire new skills our…

Slick double stacking during qually by @visacashapprb I had the best seat in the house yesterday. #BritishGP #F1 #Silverstone #RB

Just watch the goddamn video. Don't extrapolate beyond what @geoffreyhinton literally says unless you know something about this tech that he doesn't. Definitely don't extrapolate from what @elonmusk or @GaryMarcus say either. They both say nothing. If you think they do, you're…

There is a *huge* fallacy of extrapolation in the Hinton extract below that @elonmusk is hyping. @geoffreyhinton says we know for sure that if we make LLMs bigger they will get better. We *don't* actually “know” that. It's been almost two years since GPT-4 was trained, nobody…

FC keeping it real.. Keep it real people.

I'm partnering with @mikeknoop to launch ARC Prize: a $1,000,000 competition to create an AI that can adapt to novelty and solve simple reasoning problems. Let's get back on track towards AGI. Website: arcprize.org ARC Prize on @kaggle: kaggle.com/competitions/a…

Cloud providers seem to price their GPUs such that if you pay for dedicated instances, within well under a year you'd have spent the same if you bought the GPU (and supporting HW) and paid to colo them. What's that all about? Seems crazy.

ML is at its core a software engineering exercise, not unlike any other. Sure maybe it relies on /dev/urandom more than most feel comfortable with. Really cool seeing the progress of this.

🔥llm.c update: Our single file of 2,000 ~clean lines of C/CUDA code now trains GPT-2 (124M) on GPU at speeds ~matching PyTorch (fp32, no flash attention) github.com/karpathy/llm.c… On my A100 I'm seeing 78ms/iter for llm.c and 80ms/iter for PyTorch. Keeping in mind this is fp32,…

It's pretty fun seeing Richard Stallman chiming in so much on the xz situation. Not on social media, but on the relevant DLs that only devs who work on the relevant packages are on (or nerds like me who accidentally spent time on build systems). The conventions of OSS…

Wrote my first community note. It didn't spoil the joke. Top comments got better (smarter/funnier). Seen 2.7M+ times in 24 hours. Happy days.

United States Trends

- 1. #OnlyKash 56 B posts

- 2. Starship 190 B posts

- 3. Sweeney 11,7 B posts

- 4. Jaguar 62 B posts

- 5. Nancy Mace 85,7 B posts

- 6. Jim Montgomery 3.991 posts

- 7. Jose Siri 2.786 posts

- 8. Medicare and Medicaid 23,4 B posts

- 9. $MCADE 1.316 posts

- 10. Dr. Phil 8.334 posts

- 11. Monty 11,2 B posts

- 12. Dr. Mehmet Oz 7.356 posts

- 13. Bader 4.236 posts

- 14. Linda McMahon 2.592 posts

- 15. Joe Douglas 12,4 B posts

- 16. #LightningStrikes N/A

- 17. $GARY 1.900 posts

- 18. Enner 2.274 posts

- 19. Cenk 14,2 B posts

- 20. #mnwildfirst N/A

Something went wrong.

Something went wrong.