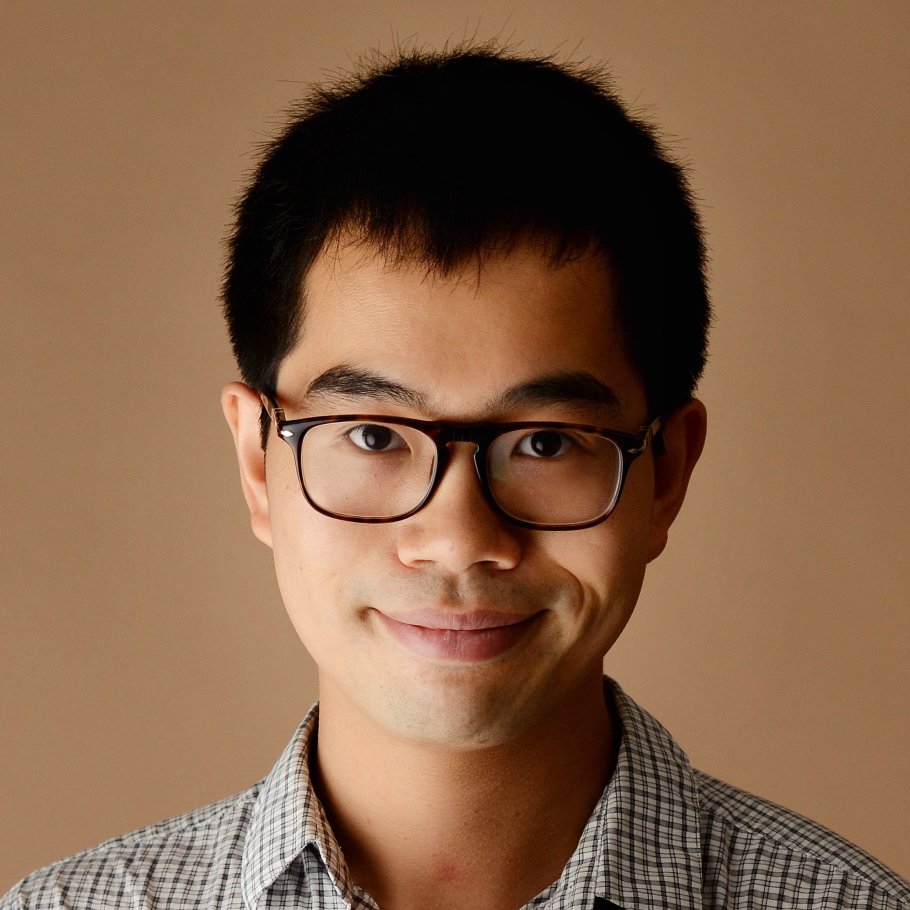

Yingru Li

@RichardYRLiAI, RL, LLMs, Data Science | PhD @cuhksz. | ex @MSFTResearch @TencentGlobal | On Job Market

Similar User

@zhiyuanli_

@2prime_PKU

@lyang36

@Song__Mei

@zhuoran_yang

@zhaoran_wang

@YingJin531

@DongruoZ

@hanzhao_ml

@iampanxu

@LihongLi20

@KaixuanHuang1

@RuoyuSun_UI

@sfrei_

@uuujingfeng

Discover theoretical advancements and applications in #GenAI Reasoning & Agents at #INFORMS2024! 🚀 Sessions on: #LLM Agents, RL, Exploration, Alignment, Gene-Editing, Math Reasoning & more! Oct 20 & 21, Summit-342, Seattle Convention Center. @Zanette_ai @RichardYRLi…

Heading to INFORMS for the first time! On Monday, I will be giving a tutorial in the Applied Probability Society distinguished lecture session on our line of work on the statistical complexity of RL and Decision-Estimation Coefficient. Come say hi if you are around!

I'll be at #INFORMS2024 next week, giving a talk on Sunday and will be around Seattle for a few days afterwards! Hit me up if you'd like to chat :).

Discover theoretical advancements and applications in #GenAI Reasoning & Agents at #INFORMS2024! 🚀 Sessions on: #LLM Agents, RL, Exploration, Alignment, Gene-Editing, Math Reasoning & more! Oct 20 & 21, Summit-342, Seattle Convention Center. @Zanette_ai @RichardYRLi…

I will be giving a talk at #INFORMS2024 (Seattle) about CRISPR-GPT, a semi-automatic LLM multi-agent framework that speeds up CRISPR gene-editing experimental designs. I am extremely passionate about the future of scientific research agents! Check out our v1 preprint at…

Discover theoretical advancements and applications in #GenAI Reasoning & Agents at #INFORMS2024! 🚀 Sessions on: #LLM Agents, RL, Exploration, Alignment, Gene-Editing, Math Reasoning & more! Oct 20 & 21, Summit-342, Seattle Convention Center. @Zanette_ai @RichardYRLi…

Our team's LLM ensemble method ranked first on AlpacaEval 2.0!🚀 joint work with @WenzheLiTHU, Yong Lin, @xiamengzhou More details will be released soon.

1/ 🚀 Exciting news to introduce another amazing open-source project! Introducing Open O1, a powerful alternative to proprietary models like OpenAI's O1! 🤖✨ Our mission is to empower everyone with advanced AI capabilities. Stay tuned for more! Homepage: Open-Source O1…

Excited to share our paper "Why Transformers Need Adam: A Hessian Perspective" accepted at @NeurIPSConf Intriguing question: Adam significantly outpeforms SGD on Transformers, including LLM training (Fig 1). Why? Our explanation: 1) Transformer’s block-Hessians are…

🚀 Excited to share our paper "Why Transformers Need Adam: A Hessian Perspective", which is accepted at #NeurIPS2024! We delve into why SGD lags behind Adam, uncovering the "block heterogeneity" in Transformers' Hessian spectra that Adam navigates more adeptly. 🧠💡 Our…

I gave my first guest lecture today in a grad course on LLMs as an (soon-to-be) adjunct prof at McGill. Putting the slides here, maybe useful to some folks ;) drive.google.com/file/d/1komQ7s…

Visionary

Great keynote by David Silver, arguing that we need to re-focus on RL to get out of the LLM Valley @RL_Conference

Super excited to finally share what I have been working on at OpenAI! o1 is a model that thinks before giving the final answer. In my own words, here are the biggest updates to the field of AI (see the blog post for more details): 1. Don’t do chain of thought purely via…

We're releasing a preview of OpenAI o1—a new series of AI models designed to spend more time thinking before they respond. These models can reason through complex tasks and solve harder problems than previous models in science, coding, and math. openai.com/index/introduc…

Diversity is beneficial for test-time compute😃 Llama-3-8B SFT-tuned models can easily achieve 1) ~90% accuracy on math reasoning task GSM8K 2) ~80% pass-rate on code generation task without domain-specific data! 🔥

DeepSeek-Prover-V1.5: Harnessing Proof Assistant Feedback for Reinforcement Learning and Monte-Carlo Tree Search > New SOTA: 63.5% on miniF2F (high school) & 25.3% on ProofNet (undergrad) > Introduces RMaxTS: Novel MCTS for diverse proof generation > Features RLPAF:…

RLHF training involves both generation (of long sequences) and inference (calculating log-probability). 🧠📝 The straightforward implementation is slow. 🐢 ReaL provides an efficient implementation of RL algorithms like ReMax and PPO. 🚀🔥 github.com/openpsi-projec… This…

I couldn't be prouder of my colleagues at the @UAlberta! The work led by @s_dohare, in collaboration w/ J. F. H.-Garcia, @LanceLan3, @rahman_parash, @rupammahmood, & @RichardSSutton on continual learning and loss of plasticity is now published at @Nature! nature.com/articles/s4158…

The 1st RL conference concluded a week ago, but I think it's still worth sharing a powerful insight from RL pioneer David Silver: While RL may not be as hyped in the LLM era, it could still be the key to achieving superhuman intelligence (Figure 1). Silver emphasized that while…

Now LLaMa Factory incorporates Adam-mini! 27% total memory reduction when fine-tuning QWen-1.5B. To run it immediately: github.com/hiyouga/LLaMA-…

🚀We've integrated the Adam-mini optimizer into LLaMA-Factory, slashing the memory footprint of full-finetuning Qwen2-1.5B from 33GB to 24GB! 🔥 Image source: github.com/zyushun/Adam-m…

I'm a scientist working on creating better reward models for agents, and I disagree with the main point of this post. Not only RL using a reward you can't totally trust is RL, but I would argue it is the RL we should do research on. Yes, without any doubt RL maximally shines…

# RLHF is just barely RL Reinforcement Learning from Human Feedback (RLHF) is the third (and last) major stage of training an LLM, after pretraining and supervised finetuning (SFT). My rant on RLHF is that it is just barely RL, in a way that I think is not too widely…

We’re extremely excited to announce the NeurIPS Workshop on Bayesian Decision-making and Uncertainty: from probabilistic and spatiotemporal modeling to sequential experiment design! This will take place at NeurIPS 2024, in Vancouver, BC, Canada, either on December 14th or 15th.

At #ISMP2024 , today at 2pm in 510C, I will talk about adaptive Barzilai-Borwein method. It is a line-search-free, parameter-free gradient method, a very simple modification to the BB method. We prove the O(1/k) convergence rate for general convex functions.

United States Trends

- 1. #AskShadow 3.894 posts

- 2. Merrill 5.485 posts

- 3. Luis Gil 4.758 posts

- 4. Skenes 9.734 posts

- 5. Sean Duffy 13,7 B posts

- 6. GOTY 49,2 B posts

- 7. Cowser 1.420 posts

- 8. #AskSonic N/A

- 9. Road Rules 1.000 posts

- 10. Real World 73,6 B posts

- 11. Katie Hobbs 14,9 B posts

- 12. Mika 118 B posts

- 13. Secretary of Transportation 11,4 B posts

- 14. Balatro 26,6 B posts

- 15. Mixon 3.276 posts

- 16. #ShootingStar N/A

- 17. NL Rookie of the Year 2.264 posts

- 18. San Marino 49,1 B posts

- 19. Biff Poggi N/A

- 20. Cade Smith N/A

Who to follow

-

Zhiyuan Li

Zhiyuan Li

@zhiyuanli_ -

Yiping Lu

Yiping Lu

@2prime_PKU -

Lin Yang

Lin Yang

@lyang36 -

Song Mei

Song Mei

@Song__Mei -

Zhuoran Yang

Zhuoran Yang

@zhuoran_yang -

Zhaoran Wang

Zhaoran Wang

@zhaoran_wang -

Ying Jin

Ying Jin

@YingJin531 -

Dongruo Zhou

Dongruo Zhou

@DongruoZ -

Han Zhao

Han Zhao

@hanzhao_ml -

Pan Xu

Pan Xu

@iampanxu -

Lihong Li

Lihong Li

@LihongLi20 -

Kaixuan Huang

Kaixuan Huang

@KaixuanHuang1 -

Ruoyu Sun

Ruoyu Sun

@RuoyuSun_UI -

Spencer Frei

Spencer Frei

@sfrei_ -

Jingfeng Wu

Jingfeng Wu

@uuujingfeng

Something went wrong.

Something went wrong.