May Fung

@May_F1_CSE AP @HKUST | Affiliated Appointment @MIT 🦫 (upcoming)

Similar User

@jefffhj

@stingning

@zxlzr

@hengjinlp

@xuandongzhao

@WeijiaShi2

@shangbinfeng

@ManlingLi_

@shizhediao

@acbuller

@taoyds

@yuz9yuz

@fredahshi

@donglixp

@hanjie_chen

How can we better unlock LLM reasoning ability? How does code training steer LLMs to produce structured intermediate steps & self-improve? New Year, New Paper 🚀 Check out our systematic survey: How Code Empowers LLMs to Serve as Intelligent Agents arxiv.org/abs/2401.00812

UIUC-NLP @ EMNLP2024 - it was especially wonderful to see all alums!

Arrived in Miami for #EMNLP2024! 🌴🤩 Excited to present our recent work, 🎆MACAROON🎆 (self-iMaginAtion for ContrAstive pReference OptimizatiON), for enhancing LVLM knowledge boundary awareness and personalization while mitigating hallucination. Data & Code:…

Is your Vision-Language Model really helpful at all times? Can we instruct them to interact with users during conversations to avoid hallucinations or biased responses? 🍰Take some bites of PIE and MACAROON! We present a benchmark to evaluate LVLMs’ proactive engagement…

Working Talk: Speculations on Test-Time Scaling Slides: github.com/srush/awesome-… (A lot of smart folks thinking about this topic, so I would love any feedback.) Public Lecture (tomorrow): simons.berkeley.edu/events/specula…

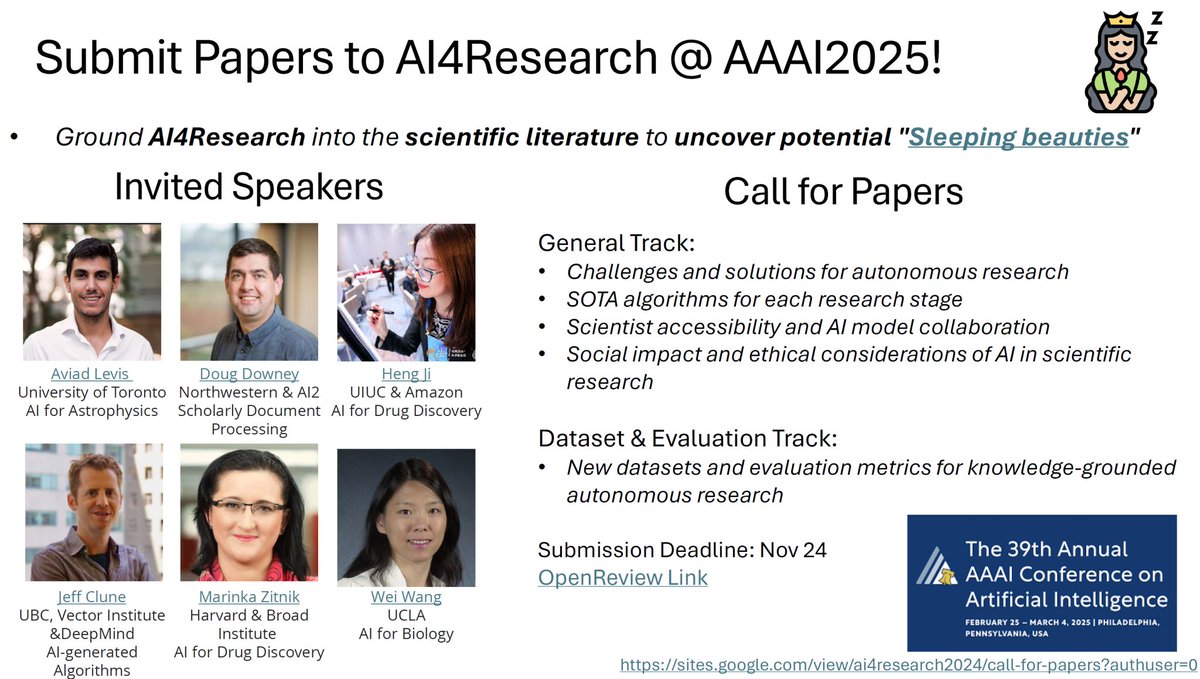

Join our workshop on AI for research! With invited speakers @hengjinlp @_DougDowney @jeffclune @marinkazitnik @WeiWang1973 @aviadlevis

🚀 [CFP] Join Us at AAAI 2025 for the Second AI4Research Workshop! 🧪 Are you exploring cutting-edge research in an AI-assisted scientific research lifecycle? Do you want to uncover potential "hidden gems" and "sleeping beauties" in scientific literature? Our full-day workshop…

Our 75 pages survey paper about Tool Learning with Foundation Models has been accepted by ACM Computing Surveys, led by Dr. Yujia Qin @TsingYoga : arxiv.org/pdf/2304.08354

🚀 [CFP] Join Us at AAAI 2025 for the Second AI4Research Workshop! 🧪 Are you exploring cutting-edge research in an AI-assisted scientific research lifecycle? Do you want to uncover potential "hidden gems" and "sleeping beauties" in scientific literature? Our full-day workshop…

🚀 [CFP] Join Us at AAAI 2025 for the Second AI4Research Workshop! 🧪 Dive into the breakthroughs in AI-assisted research lifecycle. 🗓️Submission Deadline: Nov 24 @RealAAAI #callforpapers #aaai2025 #ai4research Submit your work now! twtr.to/_sqcK

🚀 Introducing Personalized Visual Instruction Tuning (PVIT)! Can your MLLM recognize you? We propose a novel formulation and a data construction framework to create MLLMs that conduct personalized dialogues. 📄 Paper: arxiv.org/pdf/2410.07113 💻 Code: github.com/sterzhang/PVIT

🧐Can we create a navigational agent that can handle thousands of new objects across a wide range of scenes? 🚀 We introduce DivScene bench and NatVLM. DivScene contains houses of 81 types and thousands of target objects. NatVLM is an end-to-end agent based on a Large Vision…

🚀 Launching the Second AI4Research Workshop at AAAI 2025 @RealAAAI! Dive into the interdisciplinary collaboration for breakthroughs in AI-assisted research lifecycle. Submit your research by Nov. 22! twtr.to/jA1sL

Fall is here - Crisp air🌫️, falling leaves🍂, and our new preprint🚨 are all coming together! LLMs can be your best companions that truly know what you want - We train LLMs to ‘interact to align’, essentially cultivating the meta-skill of LLMs to implicitly infer the unspoken…

We're open to industry sponsorships as well (shoot us an email)! Let's grow the AI4Research research community together~ 🌟

🚀 Launching the Second AI4Research Workshop at AAAI 2025 @RealAAAI! Dive into the interdisciplinary collaboration for breakthroughs in AI-assisted research lifecycle. Submit your research by Nov. 22! twtr.to/jA1sL

🎮 Check out the live demo for LM-Steer “Word Embeddings Are Steers for LMs” (Outstanding Paper ACL 2024) at huggingface.co/spaces/Glacioh…, which can: 1.🕹️Steer model generation 2.🔬Discover word embedding dimensions 3.📊Profile sentences & identify keywords #ACL2024 #LLMs #Science4LM

🎖Excited that "LM-Steer: Word Embeddings Are Steers for Language Models" became my another 1st-authored Outstanding Paper #ACL2024 (besides LM-Infinite) We revealed steering roles of word embeddings for continuous, compositional, efficient, interpretable& transferrable control!

United States Trends

- 1. $CUTO 8.012 posts

- 2. Northwestern 5.380 posts

- 3. Carnell Tate N/A

- 4. Denzel Burke N/A

- 5. Sheppard 2.597 posts

- 6. $CATEX N/A

- 7. Jeremiah Smith N/A

- 8. Arkansas 26,3 B posts

- 9. #collegegameday 5.120 posts

- 10. #Buckeyes N/A

- 11. Broden N/A

- 12. Wrigley 3.220 posts

- 13. Ewers N/A

- 14. Jim Knowles N/A

- 15. Jahdae Barron N/A

- 16. Renji 6.274 posts

- 17. #HookEm 2.204 posts

- 18. #Caturday 9.120 posts

- 19. #SkoBuffs 2.661 posts

- 20. DeFi 106 B posts

Who to follow

-

Jie Huang

Jie Huang

@jefffhj -

Ning Ding

Ning Ding

@stingning -

Ningyu Zhang@ZJU

Ningyu Zhang@ZJU

@zxlzr -

Heng Ji

Heng Ji

@hengjinlp -

Xuandong Zhao

Xuandong Zhao

@xuandongzhao -

Weijia Shi

Weijia Shi

@WeijiaShi2 -

Shangbin Feng

Shangbin Feng

@shangbinfeng -

Manling Li

Manling Li

@ManlingLi_ -

Shizhe Diao@EMNLP2024

Shizhe Diao@EMNLP2024

@shizhediao -

Ziniu Hu

Ziniu Hu

@acbuller -

Tao Yu

Tao Yu

@taoyds -

Yu Zhang @ EMNLP 2024

Yu Zhang @ EMNLP 2024

@yuz9yuz -

Freda Shi

Freda Shi

@fredahshi -

Li Dong

Li Dong

@donglixp -

Hanjie Chen

Hanjie Chen

@hanjie_chen

Something went wrong.

Something went wrong.