Mathieu Dagréou

@Mat_DagPh.D. student in at @Inria_Saclay working on Optimization and Machine Learning @matdag.bsky.social

Similar User

@mathusmassias

@PierreAblin

@tomamoral

@Korba_Anna

@jerome_bolte

@Qu3ntinB

@FranckIutzeler

@vaiter

@BenedicteColnet

@RemiGribonval

@m_e_sander

@bleistein_linus

@gowerrobert

@HuraultSamuel

@NicolasDobigeon

📣📣 Preprint alert 📣📣 « A Lower Bound and a Near-Optimal Algorithm for Bilevel Empirical Risk Minimization » w. @tomamoral, @vaiter & @PierreAblin arxiv.org/abs/2302.08766 1/3

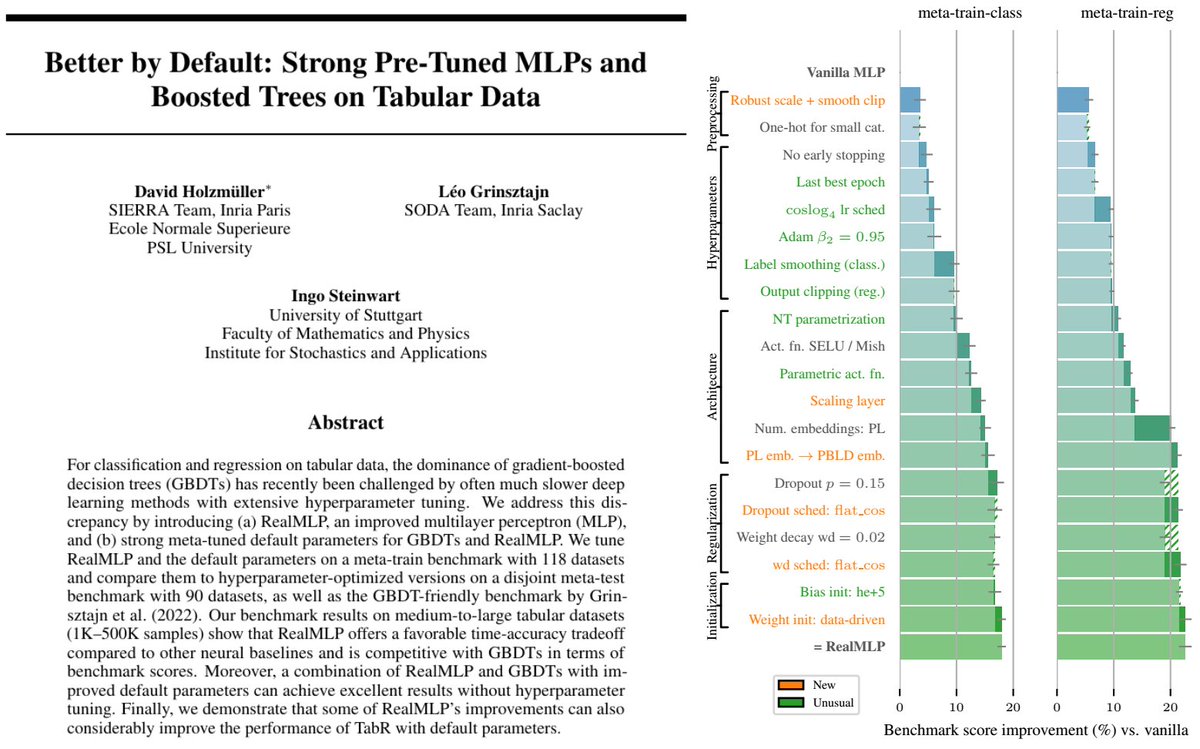

Can deep learning finally compete with boosted trees on tabular data? 🌲 In our NeurIPS 2024 paper, we introduce RealMLP, a NN with improvements in all areas and meta-learned default parameters. Some insights about RealMLP and other models on large benchmarks (>200 datasets): 🧵

New blog post: the Hutchinson trace estimator, or how to evaluate divergence/Jacobian trace cheaply. Fundamental for Continuous Normalizing Flows mathurinm.github.io/hutchinson/

📣Job altertS in Toulouse (Maths department) 📣 There are multiple jobs offers from Master internships to Assistant professor in the mathematics of data science, optimization, statistical fairness and robustness. I will try to regroup them in this thread 🧵

We also released Pixtral Large, a new SOTA vision model. mistral.ai/news/pixtral-l…

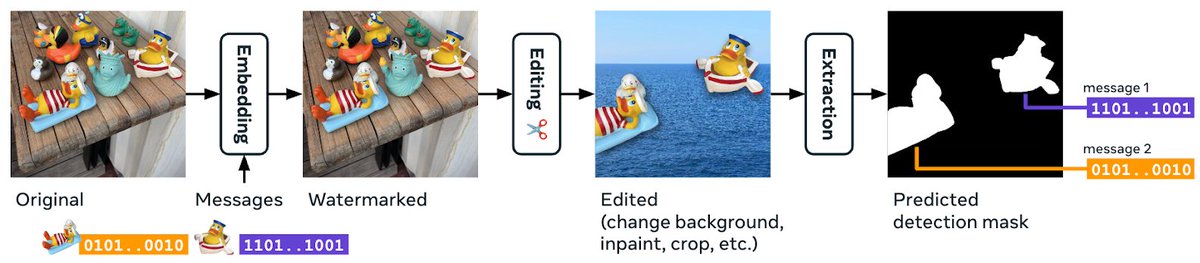

🔒Image watermarking is promising for digital content protection. But images often undergo many modifications—spliced or altered by AI. Today at @AIatMeta, we released Watermark Anything that answers not only "where does the image come from," but "what part comes from where." 🧵

I have multiple openings for M2 internship / PhD / postdoc in Nice (France) on topics related to bilevel optimization, automatic differentiation and safe machine learning. More details on my webpage samuelvaiter.com Contact me by email, and feel free to forward/RT :)

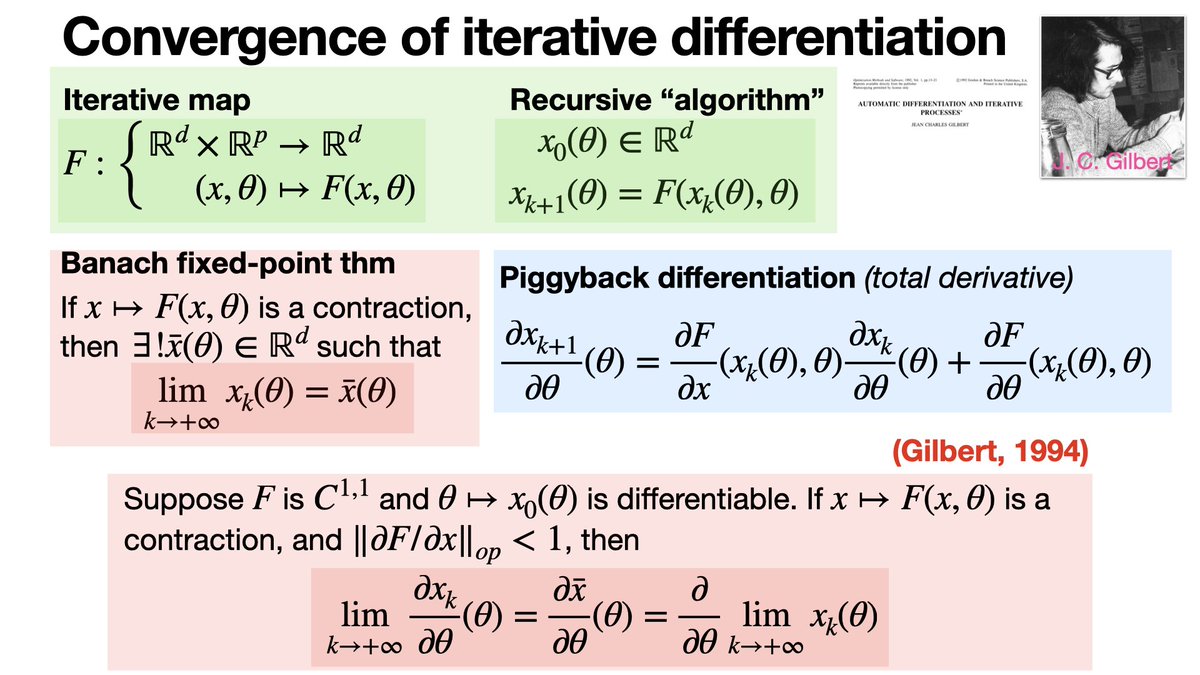

Convergence of iterates does not imply convergence of the derivatives. Nevertheless, Gilbert (1994) proposed an interversion limit-derivative theorem under strong assumption on the spectrum of the derivatives. who.rocq.inria.fr/Jean-Charles.G…

Leader-follower games, also known as Stackelberg games, are models in game theory where one player (the leader) makes a decision first, and the other player (the follower) responds, considering the leader’s action. This is one the first instance of bilevel optimization.

Looking forward to talk about gradient clipping and (geometric) medians next Monday 🖇️

Łojasiewicz inequality provides a way to control how close points are to the zeros of a real analytic function based on the value of the function itself. Extension of this result to semialgebraic or o-minimal functions exist. matwbn.icm.edu.pl/ksiazki/sm/sm1…

#ERCStG 🏆 | Félicitations à @TaylorAdrien, chargé de recherche @inria au centre @inria_paris, membre de l'équipe-projet commune @Sierra_ML_Lab (@CNRS @CNRSinformatics @ENS_ULM), lauréat d'une ERC Starting Grant 👏 Découvrez son portrait et son projet 👉 buff.ly/3YlTBU6

The proximal operator generalizes projection in convex optimization. It converts minimisers to fixed points. It is at the core of nonsmooth splitting methods and was first introduced by Jean-Jacques Moreau in 1965. numdam.org/article/BSMF_1…

Generating images via diffusion is cool, but _not_ generating certain images is vital. E.g., an external set of protected images, or already generated images, to increase diversity. Our new diffusion post-hoc intervention SPELL does that. 🧵 1/6 📖 arxiv.org/abs/2410.06025

Bilevel optimization problems with multiple inner solutions come typically in two flavors: optimistic and pessimistic. Optimistic assumes the inner problem selects the best solution for the outer objective, while pessimistic assumes the worst-case solution is chosen.

🏆Didn't get the Physics Nobel Prize this year, but really excited to share that I've been named one of the #FWIS2024 @FondationLOreal-@UNESCO French Young Talents alongside 34 amazing young researchers! This award recognizes my research on deep learning theory #WomenInScience 👩💻

#FWIS2024 🎖️@SibylleMarcotte, doctorante au département #mathématiques et applications de l'ENS @psl_univ, figure parmi les lauréates du Prix Jeunes Talents France 2024 @FondationLOreal @UNESCO #ForWomenInScience @AcadSciences @4womeninscience Félicitations à elle !!! 👏

Thanks @vaiter, @tomamoral and @PierreAblin for your great support during this journey

Congratulations to Dr. Dagréou @Mat_Dag for a brillant PhD defense! @tomamoral, @PierreAblin and I were lucky to have you as a student.

🎉✨ Our paper "Geodesic Optimization for Predictive Shift Adaptation on EEG data" has been accepted as a Spotlight at @NeurIPSConf! #NeurIPS2024 arxiv.org/abs/2407.03878 @AntoineCollas @sylvcheva @agramfort @dngman 1/7

How fast is gradient descent, *for real*? Some (partial) answers in this new blog post on scaling laws for optimization. francisbach.com/scaling-laws-o…

Our paper on Functional Bilevel Optimization (FBO) is accepted as a spotlight at #NeurIPS2024! TLDR: FBO shifts focus from parameters to the prediction function they represent, offering new algorithms that bridge bilevel optimization and deep learning. arxiv.org/abs/2403.20233

We are thrilled to say that NeurIPS@Paris is back for a 4th edition on the 4th and 5th of December 2024 at @Sorbonne_Univ_ A great occasion to meet + discuss recent advances in ML in central Paris! More info: neuripsinparis.github.io/neurips2024par… Registration: forms.gle/Fc4nbeW6ubahYb…

United States Trends

- 1. Chargers 56,2 B posts

- 2. Ravens 67,6 B posts

- 3. Quentin Johnston 5.808 posts

- 4. Kerr 7.264 posts

- 5. Drake 350 B posts

- 6. #WWERaw 66,7 B posts

- 7. Dayton 3.707 posts

- 8. Lamar 181 B posts

- 9. Canada 8.382 posts

- 10. Herbert 19,6 B posts

- 11. Seth Trimble N/A

- 12. Cadeau 9.101 posts

- 13. Derrick Henry 9.325 posts

- 14. Kofi 16,4 B posts

- 15. #BALvsLAC 9.313 posts

- 16. Nets 15,5 B posts

- 17. Ladd 4.828 posts

- 18. Kings 54,4 B posts

- 19. OG Anunoby 4.877 posts

- 20. #TheFutureIsTeal N/A

Who to follow

-

Mathurin Massias

Mathurin Massias

@mathusmassias -

Pierre Ablin

Pierre Ablin

@PierreAblin -

Moreau Thomas

Moreau Thomas

@tomamoral -

Anna Korba

Anna Korba

@Korba_Anna -

Jérôme Bolte

Jérôme Bolte

@jerome_bolte -

Quentin Bertrand

Quentin Bertrand

@Qu3ntinB -

Franck Iutzeler

Franck Iutzeler

@FranckIutzeler -

Samuel Vaiter

Samuel Vaiter

@vaiter -

Bénédicte Colnet

Bénédicte Colnet

@BenedicteColnet -

Rémi Gribonval

Rémi Gribonval

@RemiGribonval -

Michael Eli Sander

Michael Eli Sander

@m_e_sander -

Linus Bleistein

Linus Bleistein

@bleistein_linus -

Robert M. Gower 🇺🇦

Robert M. Gower 🇺🇦

@gowerrobert -

Samuel Hurault

Samuel Hurault

@HuraultSamuel -

Nicolas Dobigeon

Nicolas Dobigeon

@NicolasDobigeon

Something went wrong.

Something went wrong.