Linjie (Lindsey) Li

@LINJIEFUNresearching @Microsoft, @UW, contributing to https://t.co/a3zper7NJG

Similar User

@zhegan4

@JialuLi96

@yining_hong

@TianlongChen4

@gberta227

@fredahshi

@xwang_lk

@donglixp

@taoyds

@ZhuWanrong

@jw2yang4ai

@hardy_qr

@ChunyuanLi

@shoubin621

@ruizhang_nlp

Sorry to leave out one important detail on this job posting. The research area is multimodal understanding and generation.

We are hiring full-time/part-time research interns all year round. If you are interested, please send your resume to linjli@microsoft.com

🚀🚀Excited to introduce GenXD: Generating Any 3D and 4D Scenes! A joint framework for general 3D and 4D generation, supporting both object-level and scene-level generation. Project Page: gen-x-d.github.io Arxiv: arxiv.org/abs/2411.02319

🎬Meet SlowFast-VGen: an action-conditioned long video generation system that learns like a human brain! 🧠Slow learning builds the world model, while fast learning captures memories - enabling incredibly long, consistent videos that respond to your actions in real-time.…

🌟NEW Benchmark Release Alert🌟 We introduce 📚MMIE, a knowledge-intensive benchmark to evaluate interleaved multimodal comprehension and generation in LVLMs, covering 20K+ examples covering 12 fields and 102 subfields. 🔗 [Explore MMIE here](mmie-benchmark.github.io)

[6/N] Led by: @richardxp888, @lillianwei423, @StephenQS0710, and nice collab w/ @LINJIEFUN, @dingmyu and others. - Paper: arxiv.org/pdf/2410.10139 - Project page: mmie-bench.github.io

I am attending #COLM2024 in Philly! Will present our paper “List Items One by One: A New Data Source and Learning Paradigm for Multimodal LLMs” on Monday morning ⏰ Come and chat if you are interested in multimodal LLMs, synthetic data and training recipes!

This example makes me doubt whether large models like GPT-4o truly have intelligence. Q: Which iron ball will land first, A or B? GPT-4o: Both will land at the same time. I: ??? This example is from our proposed benchmark MM-Vet v2. Paper: huggingface.co/papers/2408.00… Code & data:…

💻We live in the digital era, where screens (PC/Phone) are integral to our lives. 🧐Curious about how AI assistant can help with computer tasks? 🚀Check out the latest progress in repo: github.com/showlab/Awesom… ✨A collection of the up-to-date GUI-related papers and resources.

Recordings of all talks are now available on YouTube and Bilibili. Links are updated on our website. Enjoy!

Interested in vision foundation models like GPT-4o and Sora? Come and join us at our CVPR2024 tutorial on “Recent Advances in Vision Foundation Models” tomorrow (6/17 9:00AM-17:00PM) in Summit 437-439, Seattle Convention Center. Website: vlp-tutorial.github.io #cvpr2024

Afternoon session is starting! Join us in person or online via zoom. For more information, visit vlp-tutorial.github.io

Interested in vision foundation models like GPT-4o and Sora? Come and join us at our CVPR2024 tutorial on “Recent Advances in Vision Foundation Models” tomorrow (6/17 9:00AM-17:00PM) in Summit 437-439, Seattle Convention Center. Website: vlp-tutorial.github.io #cvpr2024

Try joining us online via Zoom if you cannot get into the room. For more information, visit vlp-tutorial.github.io

Interested in vision foundation models like GPT-4o and Sora? Come and join us at our CVPR2024 tutorial on “Recent Advances in Vision Foundation Models” tomorrow (6/17 9:00AM-17:00PM) in Summit 437-439, Seattle Convention Center. Website: vlp-tutorial.github.io #cvpr2024

Happening now at Summit 437-439. For more information, visit vlp-tutorial.github.io

Interested in vision foundation models like GPT-4o and Sora? Come and join us at our CVPR2024 tutorial on “Recent Advances in Vision Foundation Models” tomorrow (6/17 9:00AM-17:00PM) in Summit 437-439, Seattle Convention Center. Website: vlp-tutorial.github.io #cvpr2024

Thanks for sharing! ❓Can AI assistants recreate this animation effects in *PowerPoint*? 🆕We present VideoGUI -- A Benchmark for GUI Automation from Instructional Videos 👉Check it out at showlab.github.io/videogui/

VideoGUI: A Benchmark for GUI Automation from Instructional Videos Presents a novel multi-modal benchmark designed to evaluate GUI assistants on visual-centric GUI tasks like Photoshop and video editing abs: arxiv.org/abs/2406.10227 proj: showlab.github.io/videogui/

Organizers: @ChunyuanLi, @zhegan4, @HaotianZhang4AI, @jw2yang4ai, @LINJIEFUN, @zhengyuan_yang, @linkeyun2, @JianfengGao0217, @lijuanwang

Interested in vision foundation models like GPT-4o and Sora? Come and join us at our CVPR2024 tutorial on “Recent Advances in Vision Foundation Models” tomorrow (6/17 9:00AM-17:00PM) in Summit 437-439, Seattle Convention Center. Website: vlp-tutorial.github.io #cvpr2024

Come join us in Summit 437-439 tomorrow (6/17 9:00AM-5:00PM)! We are excited to host our 5th tutorial “Recent Advances in Vision Foundation Models”!

Interested in vision foundation models like GPT-4o and Sora? Come and join us at our CVPR2024 tutorial on “Recent Advances in Vision Foundation Models” tomorrow (6/17 9:00AM-17:00PM) in Summit 437-439, Seattle Convention Center. Website: vlp-tutorial.github.io #cvpr2024

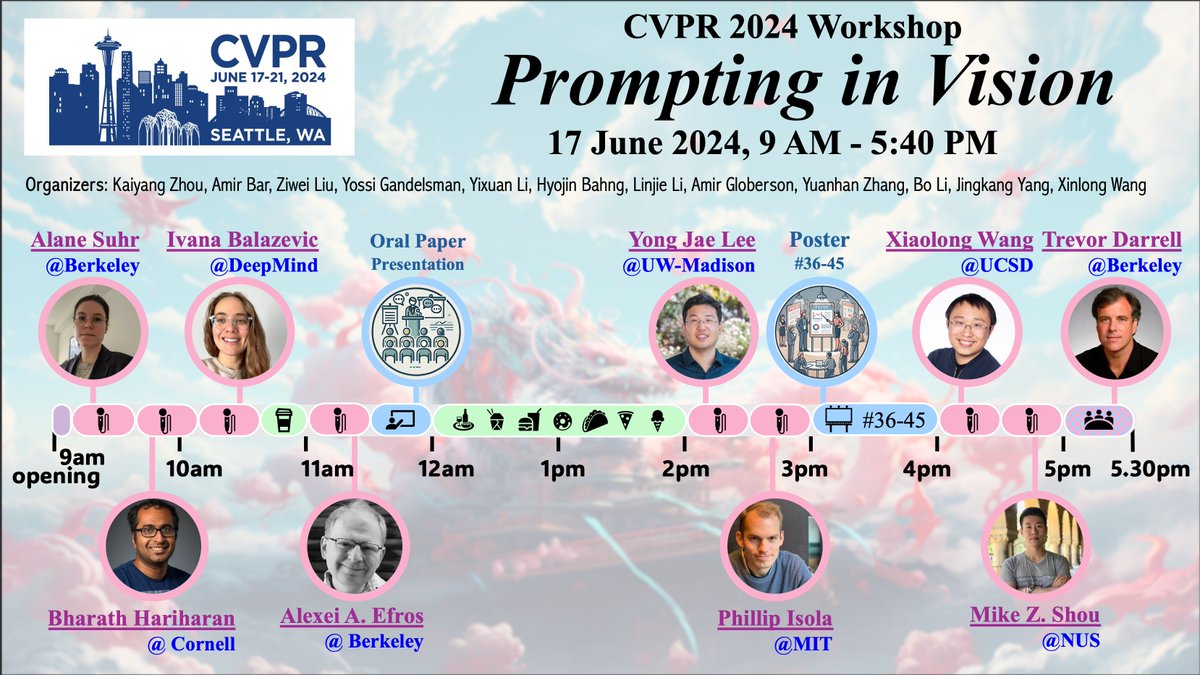

Looking for a good prompt? Join our workshop at #CVPR2024 on June 17! 🚀✨ @CVPR @_amirbar @liuziwei7 @YGandelsman @SharonYixuanLi @hyojinbahng @LINJIEFUN @amirgloberson @zhang_yuanhan @BoLi68567011 @JingkangY

🌟Thrilled to introduce MMWorld, a new benchmark for multi-discipline, multi-faceted multimodal video understanding, towards evaluating the "world modeling" capabilities in Multimodal LLMs. 🔥 🔍 Key Features of MMWorld: - Multi-discipline: 7 disciplines, Art🎨 & Sports🥎,…

MMWorld Towards Multi-discipline Multi-faceted World Model Evaluation in Videos Multimodal Language Language Models (MLLMs) demonstrate the emerging abilities of "world models" -- interpreting and reasoning about complex real-world dynamics. To assess these abilities,

The deadline for paper submission is approaching: **15 Mar 2024**. Join us if you are interested in the emerging prompting-based pardigm. @_amirbar @liuziwei7 @YGandelsman @SharonYixuanLi @hyojinbahng @LINJIEFUN @amirgloberson @zhang_yuanhan @BoLi68567011 @JingkangY

#CVPR2024 Please consider submitting your work to our workshop on Prompting in Vision (Track on Emerging Topics) More details at prompting-in-vision.github.io/index_cvpr24.h…

United States Trends

- 1. Jake Paul 1,06 Mn posts

- 2. #Arcane 247 B posts

- 3. Good Saturday 29 B posts

- 4. #Caturday 5.953 posts

- 5. #SaturdayVibes 3.610 posts

- 6. Jayce 58,7 B posts

- 7. #saturdaymorning 2.398 posts

- 8. Pence 84,1 B posts

- 9. #SaturdayMood 2.144 posts

- 10. AioonMay Limerence 148 B posts

- 11. Serrano 240 B posts

- 12. IT'S GAMEDAY 2.955 posts

- 13. Vander 19,2 B posts

- 14. $WOOPER N/A

- 15. Fetterman 38,7 B posts

- 16. Woop Woop 1.548 posts

- 17. $XRP 89,5 B posts

- 18. He's 58 32,7 B posts

- 19. maddie 23,1 B posts

- 20. John Oliver 15,3 B posts

Who to follow

-

Zhe Gan

Zhe Gan

@zhegan4 -

Jialu Li

Jialu Li

@JialuLi96 -

Yining Hong

Yining Hong

@yining_hong -

Tianlong Chen

Tianlong Chen

@TianlongChen4 -

Gedas Bertasius

Gedas Bertasius

@gberta227 -

Freda Shi

Freda Shi

@fredahshi -

Xin Eric Wang

Xin Eric Wang

@xwang_lk -

Li Dong

Li Dong

@donglixp -

Tao Yu

Tao Yu

@taoyds -

Wanrong Zhu

Wanrong Zhu

@ZhuWanrong -

Jianwei Yang

Jianwei Yang

@jw2yang4ai -

Fangyu Liu

Fangyu Liu

@hardy_qr -

Chunyuan Li

Chunyuan Li

@ChunyuanLi -

Shoubin Yu

Shoubin Yu

@shoubin621 -

Rui Zhang @ EMNLP 2024

Rui Zhang @ EMNLP 2024

@ruizhang_nlp

Something went wrong.

Something went wrong.