Katherine Xu

@KateYXuPhD student @Penn @GRASPlab • @MIT CS '22 🤺 • Research Intern @AdobeResearch

Similar User

@SharonYixuanLi

@MinhyukSung

@dqj5182

@avinab_saha

@JiahuiLei1998

@AlbalakAlon

@weizeming25

@TheCamdar

@MeganJWei

@liyuan_zz

@SpyrosPavlatos

@EChatzipantazis

@aaditya_naik

@shreyahavaldar

@tuanlda78202

Excited to share our #CVPR2024 Highlight paper! 🌟 “Amodal Completion via Progressive Mixed Context Diffusion” Our method fully visualizes occluded objects using pretrained diffusion models (without finetuning!) by overcoming occlusion + co-occurrence k8xu.github.io/amodal

Synthetic data has huge potential to drive new improvements in training and evaluation for computer vision. Interested in learning more about advancements and challenges? Join us at the SynData4CV Workshop at #CVPR2024 tomorrow (June 18)! syndata4cv.github.io

Calling all #CVPR2024 attendees! Join us at the SynData4CV Workshop at @CVPR (Jun 18 full day at Summit 423-425) to learn more about recent advancements in synthetic data! Explore more: syndata4cv.github.io

What do you see in these images? These are called hybrid images, originally proposed by Aude Oliva et al. They change appearance depending on size or viewing distance, and are just one kind of perceptual illusion that our method, Factorized Diffusion, can make.

Had a fun time attending NYC Computer Vision Day + sharing my work on Amodal Completion via Progressive Mixed Context Diffusion #CVPR2024 Project page: k8xu.github.io/amodal/ Event: cs.nyu.edu/~fouhey/NYCVis… Thank you to the organizers and sponsors! 🗽

I’ll be at #NeurIPS2023 next week! Super excited to present our spotlight work, DreamSim, with @xkungfu . Come check out our poster (#127) on Wednesday morning, and please reach out if you want to chat about representation learning, synthetic data, and/or computer vision! (1/2)

We’re releasing a new image similarity metric and dataset! --> DreamSim: a metric which outperforms LPIPS, CLIP, and DINO on similarity and retrieval tasks --> NIGHTS: a dataset of synthetic images with human similarity ratings paper+code+data: dreamsim-nights.github.io 1/n

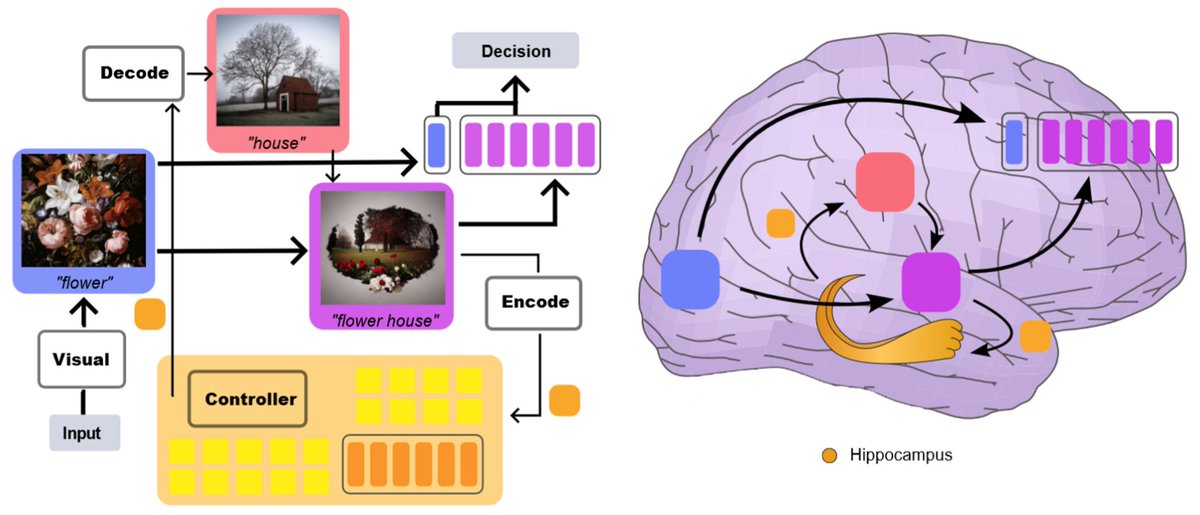

Brain🧠and Deep-nets🤖are massive black boxes, we don’t know how they work. Others use Deep-nets to explain the brain:🤖 ->🧠 We do the opposite:🧠->🤖 𝐁𝐫𝐚𝐢𝐧 𝐃𝐞𝐜𝐨𝐝𝐞𝐬 𝐃𝐞𝐞𝐩 𝐍𝐞𝐭𝐬 arxiv:arxiv.org/abs/2312.01280 webpage&video:huzeyann.github.io/brain-decodes-… read on 🧵

Check out our #ICCV2023 work "NeuS2" (vcai.mpi-inf.mpg.de/projects/NeuS2/), which reconstructs high-quality 3D geometry from multi-view images in <2 minutes. We derived a new analytical formula of the second-order derivatives for ReLU-based MLPs, leading to an efficient CUDA implementation.

0/. Brain is not ViT. we scored 70.8 in the Algonauts 2023 visual brain competition, w/o ensemble we can do 66.8 score. other teams (including me in the past) are struggling with 60. “memory” is the secret. paper: arxiv.org/abs/2308.01175 code&webpage: huzeyann.github.io/mem

In our #ICCV2023 paper, we find the existence of common features we call “Rosetta Neurons” across a variety of models with different architectures, sources of supervision. and training datasets. abs: arxiv.org/abs/2306.09346 proj: yossigandelsman.github.io/rosetta_neuron… 1/n

United States Trends

- 1. Mike 1,79 Mn posts

- 2. Serrano 242 B posts

- 3. Canelo 16,9 B posts

- 4. #NetflixFight 74 B posts

- 5. #arcane2spoilers N/A

- 6. Father Time 10,6 B posts

- 7. Logan 78,8 B posts

- 8. VANDER 5.303 posts

- 9. #netflixcrash 16,4 B posts

- 10. He's 58 27,7 B posts

- 11. Rosie Perez 15,1 B posts

- 12. Boxing 305 B posts

- 13. ROBBED 101 B posts

- 14. Shaq 16,4 B posts

- 15. #buffering 11,1 B posts

- 16. My Netflix 83,8 B posts

- 17. Tori Kelly 5.358 posts

- 18. Roy Jones 7.251 posts

- 19. Ramos 69,3 B posts

- 20. Muhammad Ali 19,5 B posts

Who to follow

-

Sharon Y. Li

Sharon Y. Li

@SharonYixuanLi -

Minhyuk Sung

Minhyuk Sung

@MinhyukSung -

Daniel Sungho Jung

Daniel Sungho Jung

@dqj5182 -

Avinab Saha 🇮🇳

Avinab Saha 🇮🇳

@avinab_saha -

Jiahui

Jiahui

@JiahuiLei1998 -

Alon Albalak

Alon Albalak

@AlbalakAlon -

Zeming Wei

Zeming Wei

@weizeming25 -

Cameron Wong

Cameron Wong

@TheCamdar -

Megan Wei @ ISMIR 2024 🌁

Megan Wei @ ISMIR 2024 🌁

@MeganJWei -

Liyuan Zhu

Liyuan Zhu

@liyuan_zz -

Spyros Pavlatos

Spyros Pavlatos

@SpyrosPavlatos -

Evangelos Chatzipantazis

Evangelos Chatzipantazis

@EChatzipantazis -

Aaditya Naik

Aaditya Naik

@aaditya_naik -

Shreya Havaldar

Shreya Havaldar

@shreyahavaldar -

charles

charles

@tuanlda78202

Something went wrong.

Something went wrong.