James Chang

@strategist922I use data, analysis and visualization to advance understanding, make inferences, and make things better.

Similar User

@mariuskarma

@LoneStarAnalysi

@talkdatatomee

@nikosvg

@jamshaidsohail5

@kstate_bigdata

@jletteboer

@chatmine

@CashbookLtd

@JohnHassman

@Data2Ag

@HugoAlatsal

Wow nvidia just published a 72B model with is ~on par with llama 3.1 405B in math and coding evals and also has vision 🤯

50 AI Tools to Turn Hours of Work into Minutes: 1. Creative Brainstorming - Claude AI - ChatGPT 4 - Bing Chat - Perplexity - Better research 2. Image Creation & Editing - Midjourney - Bing Create - Leap AI - Astira AI - Stable Diffusion 3. Note-Taking & Summarizing -…

(1/7) Physics of LM, Part 2.2 with 8 results on "LLM how to learn from mistakes" now on arxiv: arxiv.org/abs/2408.16293. We explore the possibility to enable models to correct errors immediately after they are made (no multi-round prompting). Check out the slides in this thread.

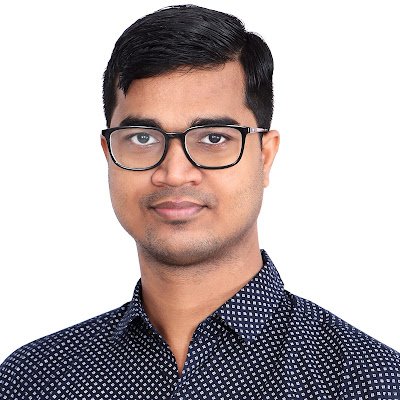

(1/n) Training LLMs can be hindered by out-of-memory, scaling batch size, and seq length. Add one line to boost multi-GPU training throughput by 20% and reduce memory usage by 60%. Introducing Liger-Kernel: Efficient Triton Kernels for LLM Training. github.com/linkedin/Liger…

Hawaii harvesting of tropical fish for aquariums approved reefbuilders.com/2022/10/16/haw… via @reefbuilders

All right, fully open-source code, Apache license for anyone and any company to use freely: github.com/leptonai/searc… Our goal: enable creators and enterprises to build AI applications as easy as possible, like this search application. Happy Friday and have fun!

Building an AI app has never been easier. Over the weekend, we built a demo for conversational search with <500 lines of python, and it's live at search.lepton.run. Give it a shot! Code to be open sourced soon as we clean up all "# temp scaffolds" stuff. (1/x)

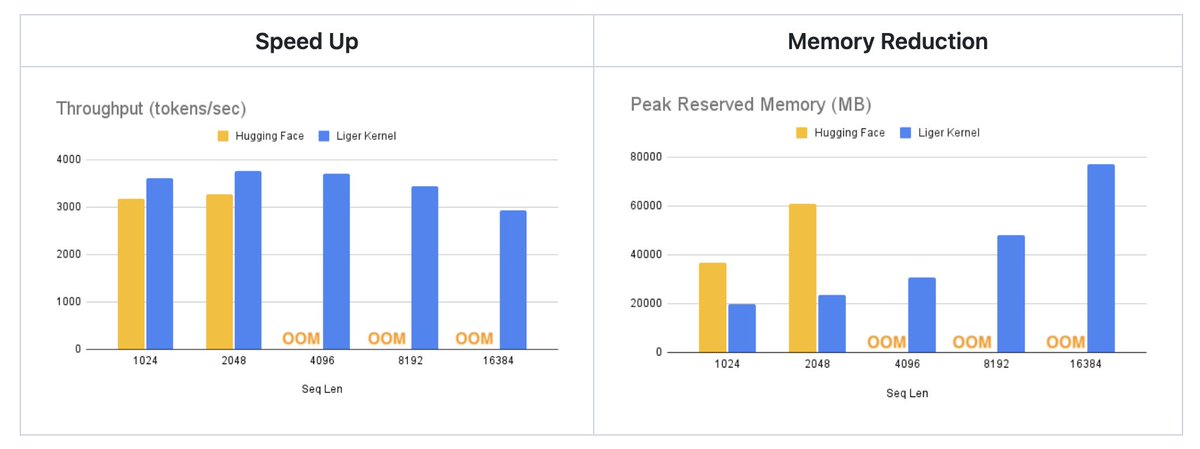

What if I told you that you can simultaneously enhance an LLM's task performance and reduce its size with no additional training? We find selective low-rank reduction of matrices in a transformer can improve its performance on language understanding tasks, at times by 30% pts!🧵

Top 9 Spaces from all time 1. @StabilityAI Stable Diffusion 2.1 2. @huggingface Open LLM Leaderboard 3. @craiyonAI DALL-E Mini 4. @AIatMeta MusicGen 5. @flngr Comic Factory 6. @angrypenguinPNG Illusion Diffusion 7. @pharmapsychotic CLIP Interrogator 8. @Microsoft HuggingGPT 9.…

Discarded Aloe Peels Could Make a Sustainable Agricultural Insecticide, Study Finds ecowatch.com/aloe-peels-ins…

If you think this is a normal driving video, you'd be wrong. This entire video is generated by @wayve_ai's generative AI model, GAIA-1. The model was built to generate realistic driving scenes into the future to improve safety of self-driving cars in the real world.

HoloAssist: A multimodal dataset for next-gen AI copilots for the physical world microsoft.com/en-us/research…

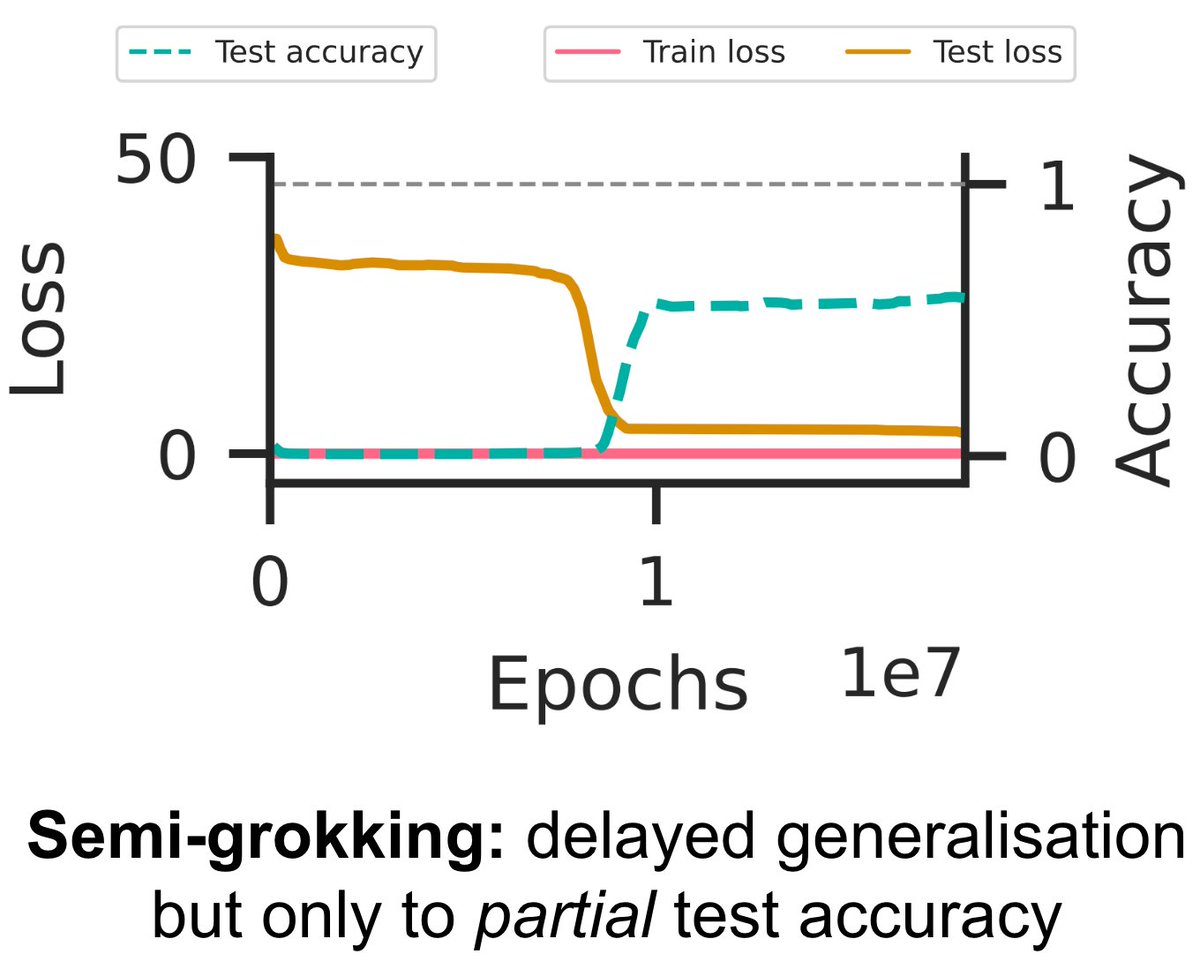

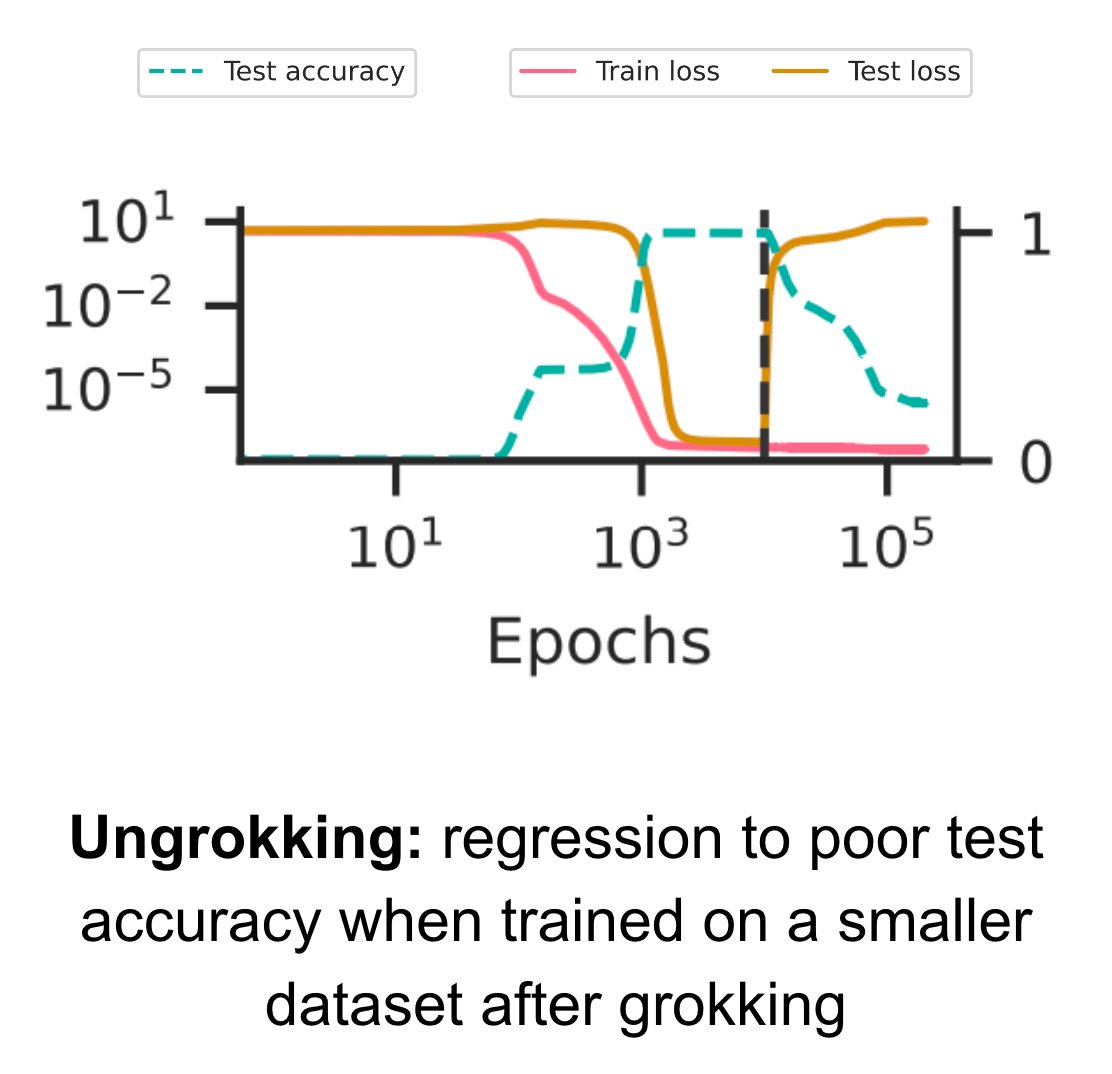

Our latest paper (arxiv.org/abs/2309.02390) provides a general theory explaining when and why grokking (aka delayed generalisation) occurs – a theory so precise that we can predict hyperparameters that lead to partial grokking, and design interventions that reverse grokking! 🧵👇

📣 Today we launched an overhauled NLP course to 600 students in the online MS programs at UT Austin. 98 YouTube videos 🎥 + readings 📖 open to all! cs.utexas.edu/~gdurrett/cour… w/5 hours of new 🎥 on LLMs, RLHF, chain-of-thought, etc! Meme trailer 🎬 youtu.be/DcB6ZPReeuU 🧵

Today I finally read about speculative decoding, and it's a brilliant idea to speed up inference of LLMs! I recommend the amazing blog by @joao_gante if you want to understand how it works (and is already implemented in 🤗): huggingface.co/blog/assisted-…

Full F16 precision 34B Code Llama at >20 t/s on M2 Ultra

Today my Transformers-Tutorials repo hit 2,000 stars on @github! 🤩 Very greatful :) the repo contains many tutorial notebooks on inference + fine-tuning with custom data for Transformers on all kinds of data; text, images, scanned PDFs, videos ⭐ github.com/NielsRogge/Tra…

Cohere Blog: Generative AI with Cohere: Part 5 - Chaining Prompts txt.cohere.com/generative-ai-…

Did you know? 🕷️ The venom of the wandering spider, a.k.a. the banana spider (Phoneutria nigriventer), contains a surprising secret. It's been found to induce erections in males that last longer than 2 hours 😳, earning its reputation as a natural Viagra.

Thrilled to see Vicuna-33B top on the AlpacaEval leaderboard! Nonetheless, it's crucial to recognize that open models are still lagging behind in some areas, such as math, coding, and extraction as per our latest MT-bench study [2, 3]. Plus, GPT-4 may occasionally misjudge,…

Full details in the paper: arxiv.org/abs/2306.11644 Awesome collaboration with our (also awesome) @MSFTResearch team! Cc a few authors with an active twitter account: @EldanRonen (we follow-up on his TinyStories w. Yuanzhi Li!) @JyotiAneja @sytelus @AdilSlm @YiZhangZZZ @xinw_ai

United States Trends

- 1. Kash 739 B posts

- 2. Good Sunday 41,5 B posts

- 3. #sundayvibes 4.679 posts

- 4. #december1st 1.941 posts

- 5. Happy Birthday Nicki N/A

- 6. #WorldAIDSDay 45,4 B posts

- 7. Happy New Month 135 B posts

- 8. #FayeYokoYentertainAwards 274 B posts

- 9. SPOTLIGHT COUPLE FAYEYOKO 277 B posts

- 10. Wray 60,5 B posts

- 11. Advent 68,5 B posts

- 12. McCabe 45,9 B posts

- 13. Bill Barr 10,7 B posts

- 14. #FortniteC6S1鬼Hunters 26,6 B posts

- 15. jeno 321 B posts

- 16. Watergate 7.864 posts

- 17. Fauna 96,2 B posts

- 18. Houston 33,1 B posts

- 19. Clooney 13,6 B posts

- 20. Iowa State 10,5 B posts

Who to follow

-

@mariuskarma

@mariuskarma

@mariuskarma -

Lone Star Analysis

Lone Star Analysis

@LoneStarAnalysi -

Talk Data to Me

Talk Data to Me

@talkdatatomee -

nikosv

nikosv

@nikosvg -

jamshaidsohail

jamshaidsohail

@jamshaidsohail5 -

K-State Data Science

K-State Data Science

@kstate_bigdata -

John Letteboer

John Letteboer

@jletteboer -

Chatmine

Chatmine

@chatmine -

Cashbook Software

Cashbook Software

@CashbookLtd -

John Hassman

John Hassman

@JohnHassman -

Data2Ag

Data2Ag

@Data2Ag -

Hugo Alatrista-Salas

Hugo Alatrista-Salas

@HugoAlatsal

Something went wrong.

Something went wrong.