Piyawat L Kumjorn

@plkumjornResearch Scientist @google; Previously, @arg_cl @imperialcollege. Interested in #NLProc, explainable AI, human-AI collaboration. Opinions are my own.

Similar User

@nsaphra

@kaiwei_chang

@billyuchenlin

@alon_jacovi

@sarahwiegreffe

@TuhinChakr

@royschwartzNLP

@ABosselut

@boknilev

@Lianhuiq

@anmarasovic

@peterbhase

@tsvetshop

@Jiachen_Gu

@gh_marjan

What should the ACL peer review process be like in the future? Please cast your views in this survey: aclweb.org/portal/content… by 4th Nov 2024 #NLProc @ReviewAcl

The research area of interpretability in LLMs grows very fast, and it might be very hard for beginners to know where to start and for researchers to follow the latest research progress. I try to collect all relevant resources here: github.com/ruizheliUOA/Aw…

Thanks to everyone who participated in our tutorial & asked great questions! If you missed it, the recording is now available: tinyurl.com/explanation-tu… Slides: explanation-llm.github.io

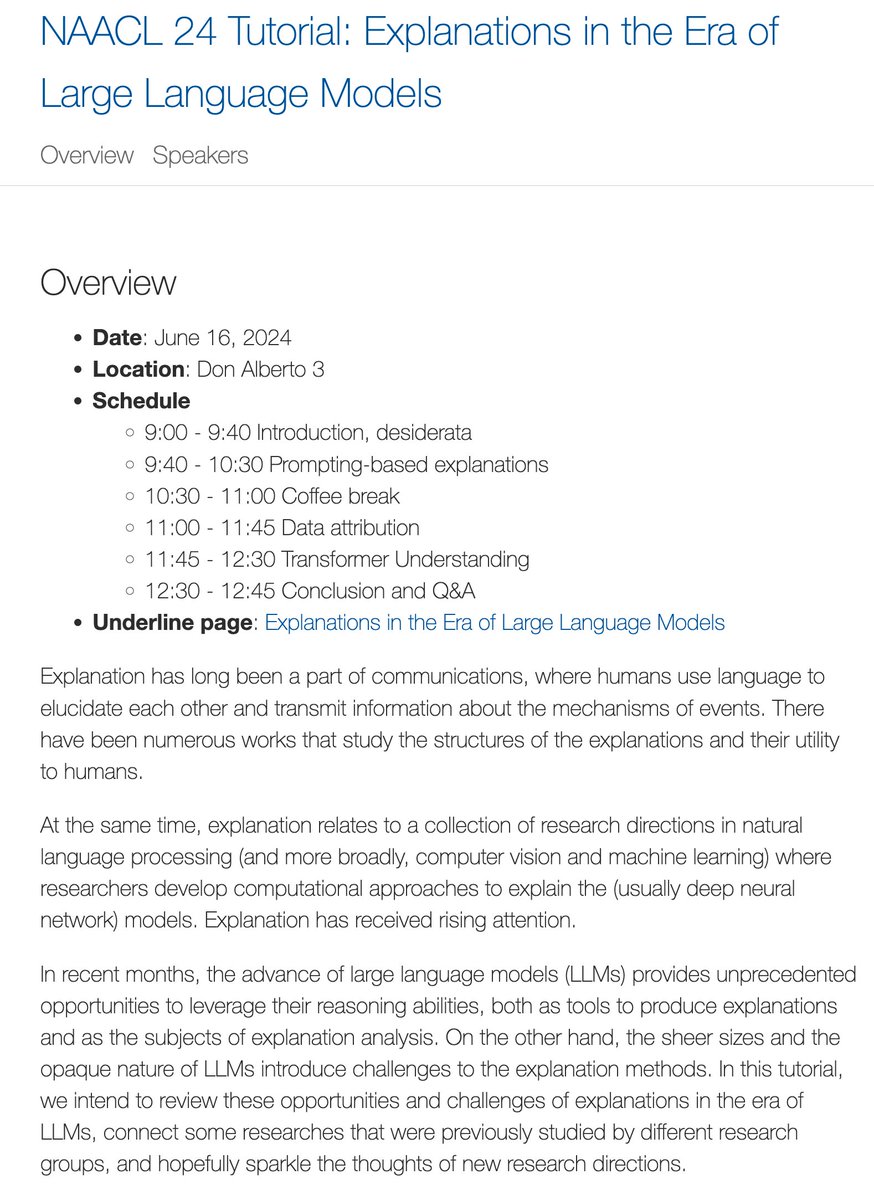

Our NAACL 24 tutorial "Explanation in the Era of Large Language Models" will be presented on June 16 morning at Don Alberto 3! The tutorial website is at explanation-llm.github.io w/ @hanjie_chen @xiye_nlp @ChenhaoTan @anmarasovic @sarahwiegreffe @VeronicaLyu

Our NAACL 24 tutorial "Explanation in the Era of Large Language Models" will be presented on June 16 morning at Don Alberto 3! The tutorial website is at explanation-llm.github.io w/ @hanjie_chen @xiye_nlp @ChenhaoTan @anmarasovic @sarahwiegreffe @VeronicaLyu

I’m joining the Columbia Computer Science faculty as an assistant professor in fall 2025, and hiring my first students this upcoming cycle!! There’s so much to understand and improve in neural systems that learn from language — come tackle this with me!

TACL News: transacl.org/index.php/tacl… TACL has always emphasized the balance of high quality review and a fast cycle reviewing process. Starting on the May 1, 2024 submission cycle, we are revising the review process with the aim

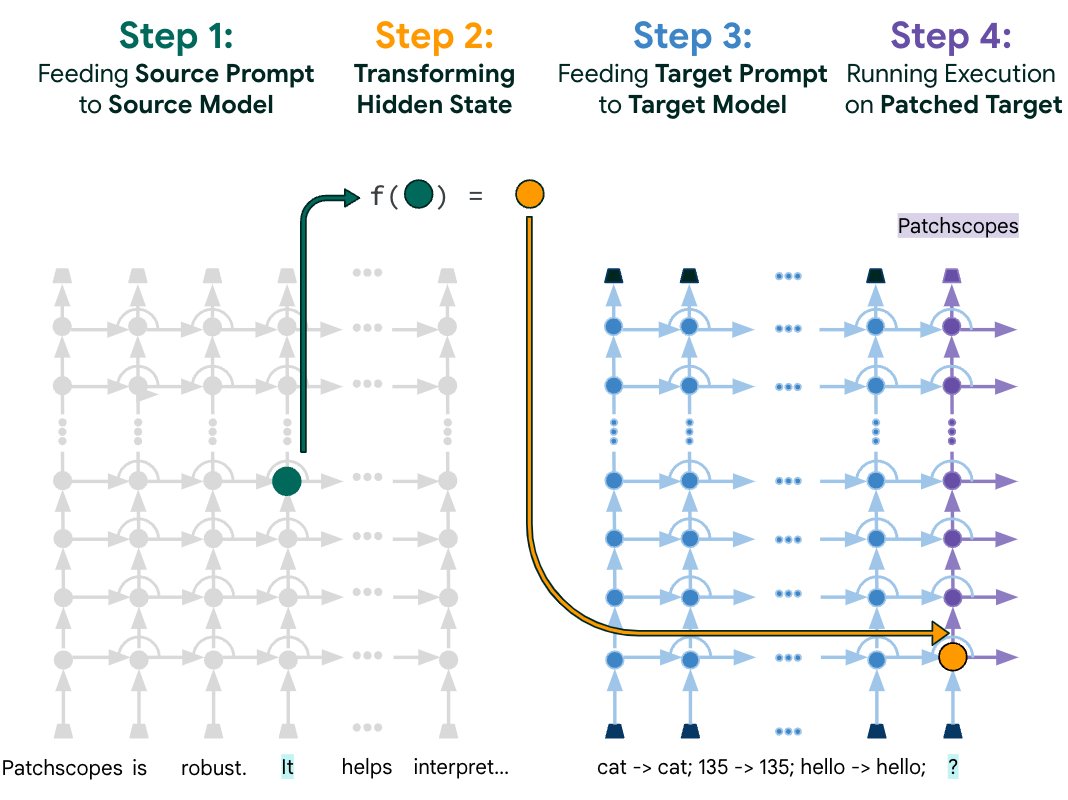

Being able to interpret an #ML model’s hidden representations is key to understanding its behavior. Today we introduce Patchscopes, an approach that trains #LLMs to provide natural language explanations of their own hidden representations. Learn more → goo.gle/4aS5epd

Super excited for the Gemma model release, and with it a new debugging tool we built on 🔥LIT - use gradient-based salience to debug and refine complex LLM prompts! ai.google.dev/responsible/mo…

Explore Gemma model’s behavior with The Learning Interpretability Tool (LIT), an open-source platform for debugging AI/ML models. ➡️ Improve prompts using saliency methods ➡️ Test hypotheses to improve model behavior ➡️ Democratize access to ML debugging goo.gle/3wsfh5F

Crazy AF. Paper studies @_akhaliq and @arankomatsuzaki paper tweets and finds those papers get 2-3x higher citation counts than control. They are now influencers 😄 Whether you like it or not, the TikTokification of academia is here! arxiv.org/abs/2401.13782

Many previous work of mine and others hinted ‘something fishy’ about saliency-based methods. But we never had a rigorous proof of what we saw. This work “Impossibility Theorems for Feature Attribution", now published in PNAS, to me marks a point of new beginnings.

Excited to finally share that "Impossibility Theorems for Feature Attribution" is published in PNAS. TL;DR Methods like SHAP and IG can provably fail to beat random guessing. w/ @natashajaques @PangWeiKoh @_beenkim PNAS: pnas.org/doi/10.1073/pn… arXiv: arxiv.org/abs/2212.11870

Introducing Gemini 1.0, our most capable and general AI model yet. Built natively to be multimodal, it’s the first step in our Gemini-era of models. Gemini is optimized in three sizes - Ultra, Pro, and Nano Gemini Ultra’s performance exceeds current state-of-the-art results on…

Introducing COLM (colmweb.org) the Conference on Language Modeling. A new research venue dedicated to the theory, practice, and applications of language models. Submissions: March 15 (it's pronounced "collum" 🕊️)

ACL org announcement: 📢 The list of accepted tutorials at EACL, ACL, NAACL and EMNLP in 2024 is out 🎉 #NLProc Please help spread the word. Retweeting w/ references, especially with instructors information etc is very much appreciated - thanks! 😊

I don't know the truth and don't want to believe in disturbing gossips. But, it's a failure of the lab, the institute, and the research community. If your student/postdoc commits suicides, there's something terrible wrong. Please investigate and take actions. @CVL_ETH @ETH_en

OKAYYY finally have a first version of slides ready, 18h before my talk! Here's a sneak peek. See you tomorrow for those that are at #DeepLearningIndaba #DLI2023 docs.google.com/presentation/d…

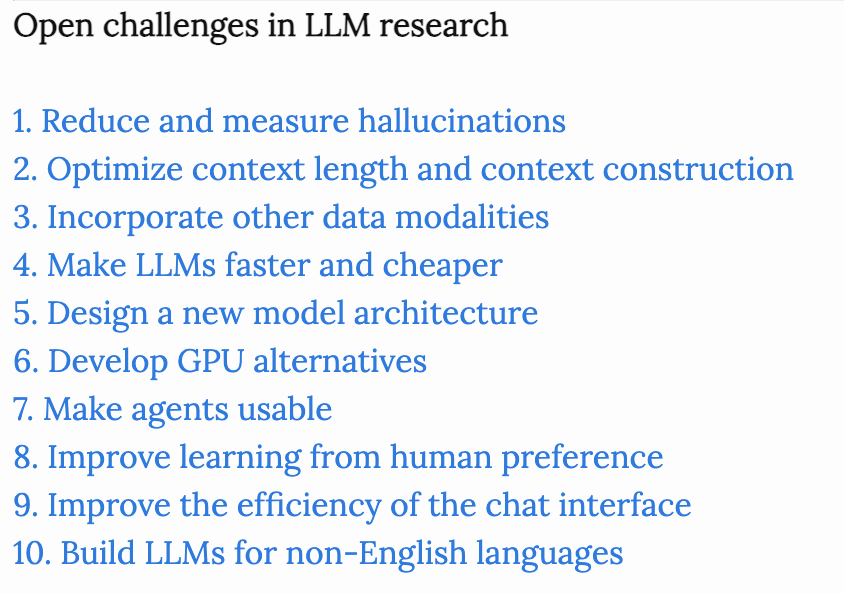

Open challenges in LLM research The first two challenges, hallucinations and context learning, are probably the most talked about today. I’m the most excited about 3 (multimodality), 5 (new architecture), and 6 (GPU alternatives). Number 5 and number 6, new architectures and…

Super excited to announce the first edition of our Regulatable ML workshop at #NeurIPS2023! Our workshop brings together AI/ML researchers, social scientists, and policy makers to discuss burning questions at the intersection of AI/ML and Policy. regulatableml.github.io [1/n]

📢📢📢 We have released the description of Eval4NLP's shared task on "Prompting LLMs as Explainable Evaluation Metrics" (for MT & summarization). Dev phase: Aug. 7 Test phase: Sep. 18 System Submission Deadline: Sep. 23 More details: eval4nlp.github.io/2023/shared-ta… 🚀🚀🚀

Prof. Gil Strang's last lecture is ... Monday! Watch it via live stream: grinfeld.org/strang/

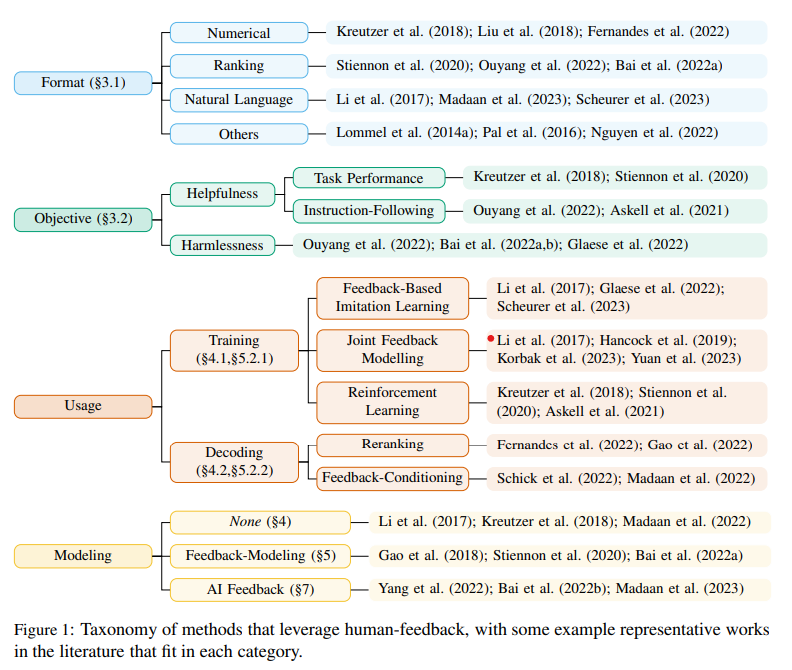

*Human feedback* was the necessary secret sauce in making #chatgpt so human-like But what exactly is feedback? And how can we leverage it to improve our models? Check out our new survey on the use of (human) feedback in Natural Language Generation! arxiv.org/abs/2305.00955 1/16

United States Trends

- 1. Thanksgiving 1,7 Mn posts

- 2. $TURKEY 2.545 posts

- 3. Thankful 312 B posts

- 4. #IDEGEN N/A

- 5. #Gratitude 11,2 B posts

- 6. $CUTO 6.944 posts

- 7. Kylie Minogue N/A

- 8. #Grateful 6.185 posts

- 9. Al Roker N/A

- 10. Optimus 26,4 B posts

- 11. Hoda 1.382 posts

- 12. The Fed 54,4 B posts

- 13. Feliz Día de Acción de Gracias 3.610 posts

- 14. Gobble Gobble 18,4 B posts

- 15. Go Lions 2.308 posts

- 16. Vindman 104 B posts

- 17. Ariana Madix N/A

- 18. #GiveThanks 2.086 posts

- 19. Fight Song 1.459 posts

- 20. #AskZB N/A

Who to follow

-

Naomi Saphra 🧈🪰

Naomi Saphra 🧈🪰

@nsaphra -

Kai-Wei Chang

Kai-Wei Chang

@kaiwei_chang -

Bill Yuchen Lin 🤖

Bill Yuchen Lin 🤖

@billyuchenlin -

Alon Jacovi

Alon Jacovi

@alon_jacovi -

Sarah Wiegreffe (on faculty job market!)

Sarah Wiegreffe (on faculty job market!)

@sarahwiegreffe -

Tuhin Chakrabarty

Tuhin Chakrabarty

@TuhinChakr -

Roy Schwartz

Roy Schwartz

@royschwartzNLP -

Antoine Bosselut

Antoine Bosselut

@ABosselut -

Yonatan Belinkov

Yonatan Belinkov

@boknilev -

Lianhui Qin

Lianhui Qin

@Lianhuiq -

Ana Marasović

Ana Marasović

@anmarasovic -

Peter Hase

Peter Hase

@peterbhase -

tsvetshop

tsvetshop

@tsvetshop -

Jia-Chen Gu

Jia-Chen Gu

@Jiachen_Gu -

Marjan Ghazvininejad

Marjan Ghazvininejad

@gh_marjan

Something went wrong.

Something went wrong.