Yufan Feng @ NeurIPS

@joyce_xxzMSc @UCalgaryML | Previously @ShanghaiTechUni

Similar User

@JerryYin777

@Evens1sen

@_MattJiang_

@murezsy_

@Albertc40248219

@xuefen19

1/3 Today, an anecdote shared by an invited speaker at #NeurIPS2024 left many Chinese scholars, myself included, feeling uncomfortable. As a community, I believe we should take a moment to reflect on why such remarks in public discourse can be offensive and harmful.

I'm proud that the @UCalgaryML lab will have 6 different works being presented by 6 students across #NeurIPS2024, in workshops (@unireps, @WiMLworkshop, MusiML) and the main conference! 🎉 Hope to see you at our posters/talks 🤓, full schedule at calgaryml.com 🧵(1/4)

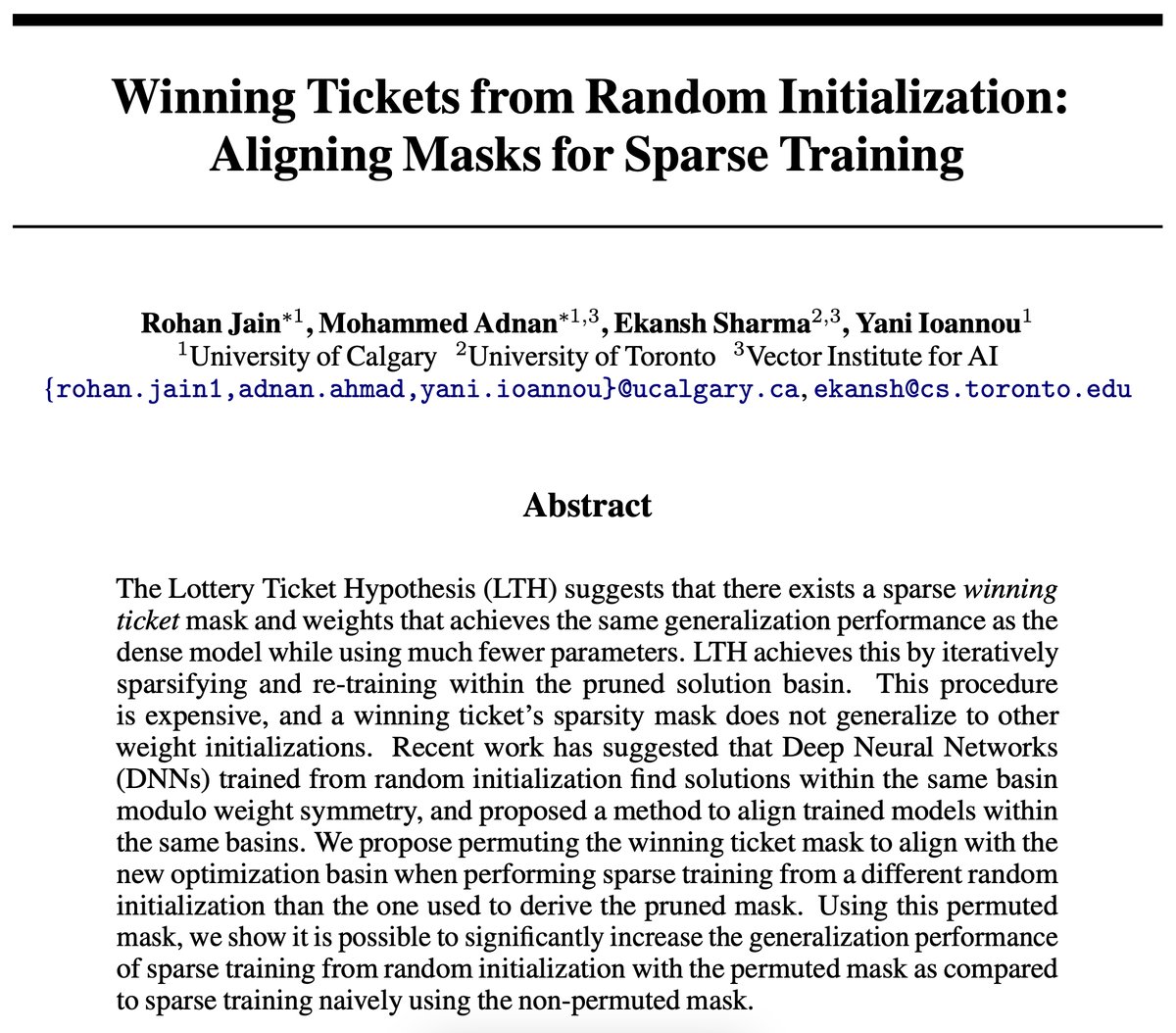

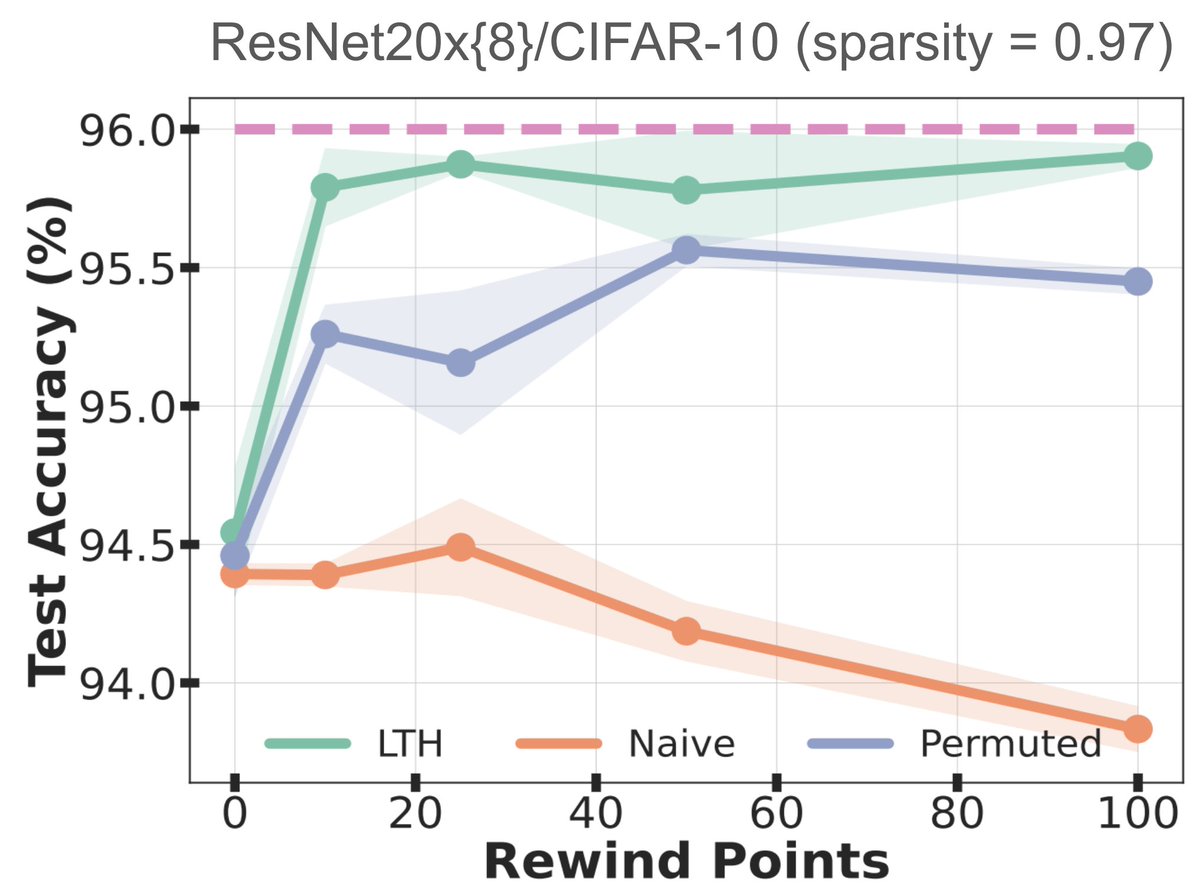

✨Our new @unireps paper tries to answer why the Lottery Ticket Hypothesis (LTH) fails to work for different random inits through the lens of weight-space symmetry. We improve the transferability of LTH masks to new random inits leveraging weight symmetries. 🧵(1/6)

Knowledge #distillation is a widely used model compression method. We explore the nuanced impact of temperature on distilled models' #fairness ⚖️. Our findings show distilled students are less fair than their teachers at typical temps, but can be more fair in some instances…🧵👇

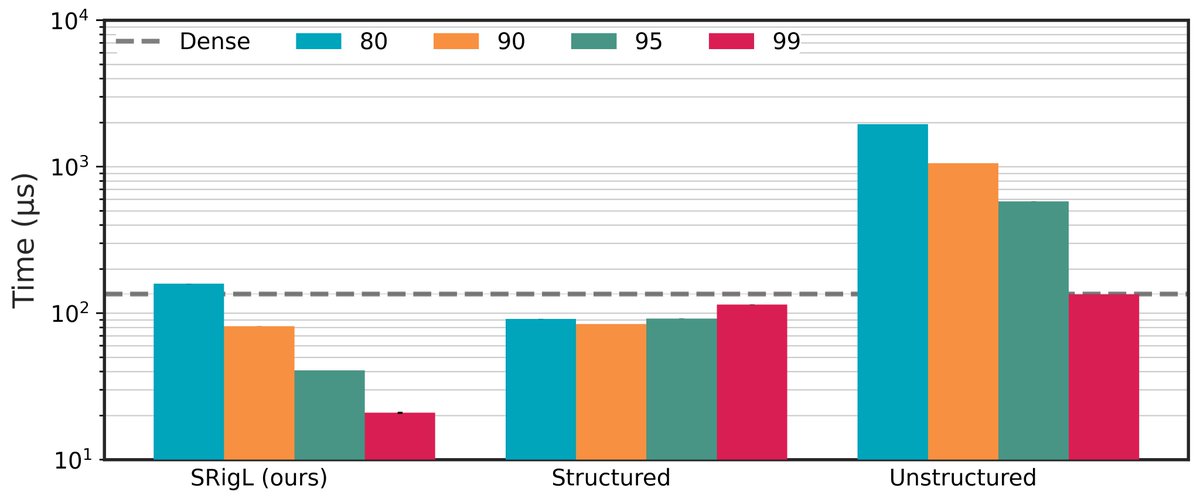

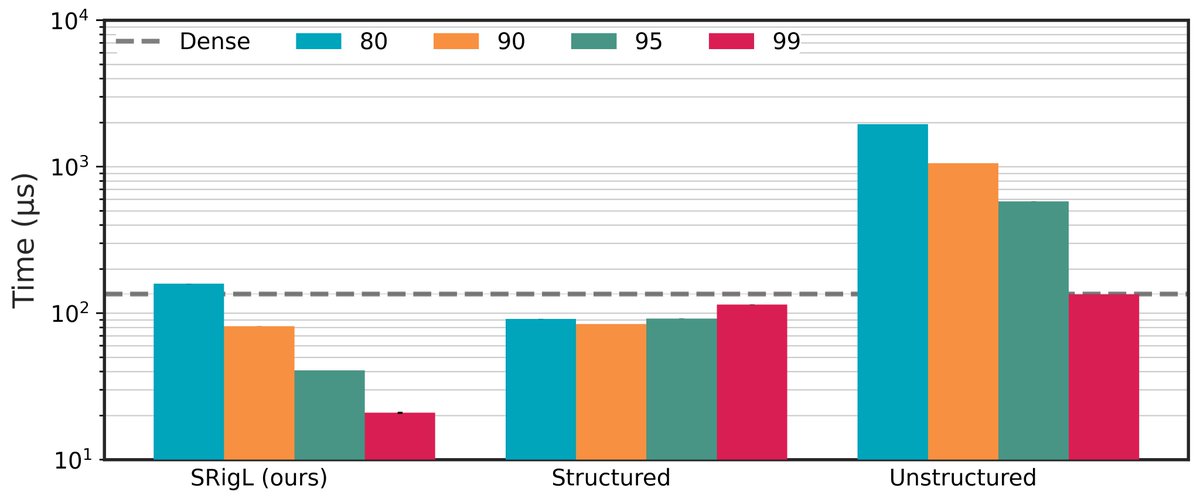

Come chat with us about our work, Dynamic Sparse Training with Structured Sparsity, tomorrow at #ICLR2024 from 4:30-6:30PM in Hall B #47. Not in Vienna? No problem. Check out our poster and a short video describing the work here: iclr.cc/virtual/2024/p… More info in 🧵

"Dynamic Sparse Training with Structured Sparsity" (openreview.net/forum?id=kOBkx…) was accepted at ICLR 2024! DST methods learn state-of-the-art sparse masks, but accelerating DNNs with unstructured masks is difficult. SRigL learns structured masks, improving real-world CPU/GPU timings

"Dynamic Sparse Training with Structured Sparsity" (openreview.net/forum?id=kOBkx…) was accepted at ICLR 2024! DST methods learn state-of-the-art sparse masks, but accelerating DNNs with unstructured masks is difficult. SRigL learns structured masks, improving real-world CPU/GPU timings

Text to video is here. And it is at the demonic phase.

United States Trends

- 1. Cooper Rush 2.105 posts

- 2. Lamar 26,5 B posts

- 3. Mac Jones 2.550 posts

- 4. Bateman 10,8 B posts

- 5. Browns 21,9 B posts

- 6. Terry 27,4 B posts

- 7. Will Levis 1.272 posts

- 8. Tim Boyle N/A

- 9. Sam Hubbard 2.307 posts

- 10. Amad 218 B posts

- 11. Dolphins 16,8 B posts

- 12. Texans 12,1 B posts

- 13. Manchester 321 B posts

- 14. Myles Garrett 1.586 posts

- 15. Titans 20,3 B posts

- 16. Joe Burrow 9.032 posts

- 17. #RaiseHail 6.724 posts

- 18. #ChiefsKingdom 4.060 posts

- 19. Gmail 19,3 B posts

- 20. #RavensFlock 2.376 posts

Something went wrong.

Something went wrong.