Jifeng Wu

@gulistaniabbasiComputer Science Masters’ student at UBC in the Software Practices Lab

Similar User

@gracelessfaller

@lalitmaurya47

@delacruzovich

@DJI37148345

@hussainzahrani3

@yukselselinayy

@Taaak0_hand

@Shaurya_patel

@ciciling07

@RosieR888

@owo7744

@seda__sanlier

@hendeknisin

What I like about Beethoven is a grand, masculine, noble battle spirit embedded with musicial sophistication that is close to absent in the works of many other Western musicians, both past and contemporary.

The only path forward for the West is an exuberant love of life and beauty. Replacing the overwhelming fear of any struggle or suffering with sheer desire to live and thrive. Simplistic return to the past is a dead end. So is drab Puritan workism, and imitation of "closed"…

I've said it before, the one thing that truly defines the West against all other cultures is that profound openness and abstract interest in things. The iconic Westerner learns Sanskrit. He discovers a new beetle species in New Guinea. He goes to source of Nile.

Anywhere you can find some forgotten artifact, rare text, or dying language, a Westerner is studying it. The first critical edition of the Masnawi was done by a Briton (Nicholson).

The reason the Space Race captures the imagination of so many Americans is because for a short period of time it reopened the Frontier. The Frontier had always been a defining thing in the American mythos, but with the closing of the Frontier that spirit died off. For a brief…

"dataset is all you need"

With long-context LLMs, the ROI on documenting your life has gone massively up. You can load up your diary, photos, and even emails and texts and write all sorts of useful software to find patterns, do reflections, ask the LLM for advice, or just have an "ask my life" app.

i’m a strong believer in the power of “granny hobbies” to improve your mental health (and detox from digital everything) reading, knitting, gardening, crafting, cooking, baking, board games, sewing, birdwatching, playing cards, drinking tea …

"LARP" is what we are, always has been. Every great man, and every great civilization, has emulated the greatness that came before.

"LARP" is a powerful critique, but sometimes it's not a terrible thing. Knew a girl in highschool, very vapid, very shallow. Had careerist ambitions. Got married and got into head coverings and trad memes. LARP? I'm helping her husband and five kids slaughter hogs this weekend.

💯

I’m 25 and tomorrow is the first day of my new career. After an unnecessarily hectic year in tech/ML, I’m out. I’ve decided that the industry is on track to become something I don’t want to participate in. Labor will be squeezed between LLMs, increasing amounts of insider…

💯

Your average CTO is commenting “nit fix: add space” and refusing to code because he’s too senior My CTO is reviewing PRs while standing in line at Chipotle

10x word marshalling efficiency, 10x throwaway scripting efficiency, barely any changes in writing novel code

Great piece of history: why Linux utilities tend to run poorly on Windows. github.com/microsoft/WSL/…

I see `git clone` is not exactly a speed demon on Windows or am I doing it wrong?

using a telephoto lens and focusing on the painting with the people in front of you out of focus would make a great composition with a great sense of space

This is what it's like to visit the Mona Lisa. But why is it so famous and what makes it such a masterpiece? 1. It was the product of years of meticulous iteration. It was Leonardo da Vinci's pièce de résistance - for 16 years he carried it with him and improved it, adding…

woah > There’s an old book, Etudes for Programmers by Charles Wetherell that is similar to this. Projects include compiler, interpreter, loader, compression, game AI, symbolic algebra, etc. I think Knuth or Norvig recommended it, hard to find a physical copy though.

i still have that and office 2007 on a windows xp qemu virtual machine, could possibly run forever😀

Funsearch paper admits that the external verifier is critical. This reinforces our point that LLMs are great idea generators--but can't verify anything themselves. They have to be used in an LLM-Modulo fashion working in conjunction with verifiers. From Fermat to Ramanujan,…

Awesome Latent Space Visualization

The landscape of the Machine Learning section of ArXiv.

I feel like programming is ~60% developing the right mental model, ~40% debugging and testing, and <1% writing code. At least when you're solving a novel problem.

Ayn Rand, whose writing I generally don't have much time for, saw the problem in 1969 clearly courses.aynrand.org/works/apollo-a…

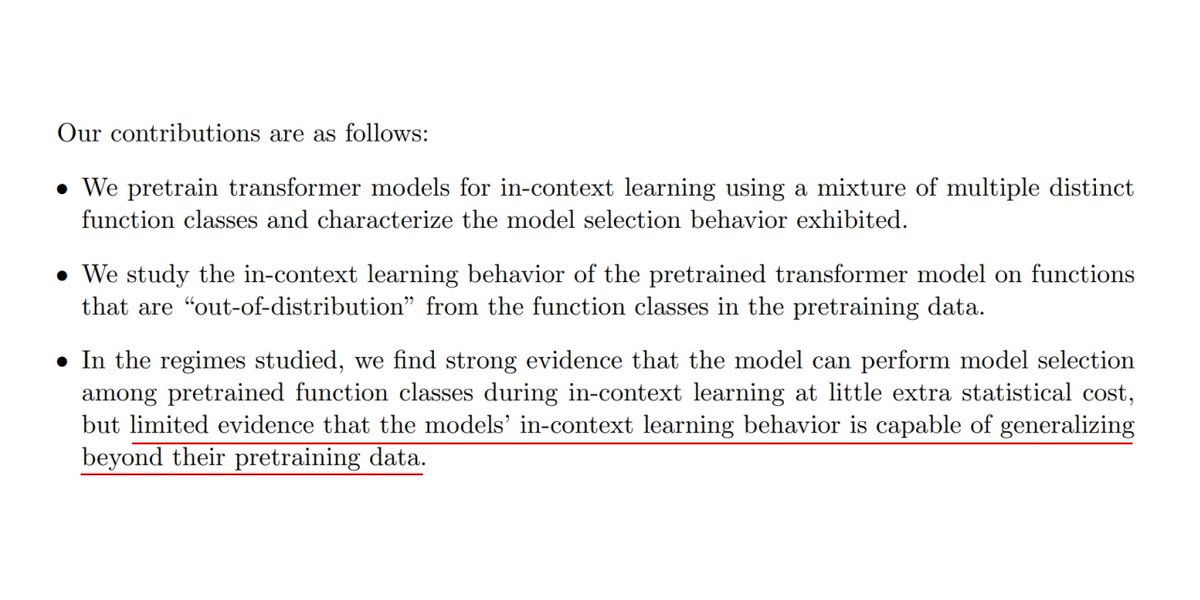

Ummm ... why is this a surprise? Transformers are not elixirs. Machine learning 101: gotta cover the test distribution in training! LLMs work so well because they are trained on (almost) all text distribution of tasks that we care about. That's why data quality is number 1…

New paper by Google provides evidence that transformers (GPT, etc) cannot generalize beyond their training data

totally agree! on the other hand, deep learning may not be the elixir to every problem out there.

Deep learning lessons (known in 2016 but starkly confirmed since): 1. Scalable ideas > clever ideas 2. Improving the dataset > improving the model 3. Engineering chops > academic chops

United States Trends

- 1. $AROK 4.927 posts

- 2. Ohio State 21,7 B posts

- 3. Wayne 118 B posts

- 4. Indiana 31,2 B posts

- 5. #daddychill 2.479 posts

- 6. Ryan Day 3.397 posts

- 7. Hoosiers 6.692 posts

- 8. Gus Johnson N/A

- 9. $MOOCAT 6.778 posts

- 10. DJ Lagway 1.453 posts

- 11. Howard 20,8 B posts

- 12. Buckeyes 5.705 posts

- 13. #iufb 3.496 posts

- 14. Carnell Tate N/A

- 15. Chip Kelly N/A

- 16. Neil 32,6 B posts

- 17. Maddison 9.867 posts

- 18. Surgeon General 102 B posts

- 19. UMass 2.258 posts

- 20. $MXNBC 1.164 posts

Who to follow

-

Chris

Chris

@gracelessfaller -

Lalit Maurya

Lalit Maurya

@lalitmaurya47 -

Nikolai Delacruzovich

Nikolai Delacruzovich

@delacruzovich -

DJI

DJI

@DJI37148345 -

hussain

hussain

@hussainzahrani3 -

Selinay Yüksel

Selinay Yüksel

@yukselselinayy -

taak0

taak0

@Taaak0_hand -

shaurya patel

shaurya patel

@Shaurya_patel -

Cici Ling

Cici Ling

@ciciling07 -

RosieR

RosieR

@RosieR888 -

₍ᵔ.˛.ᵔ₎🪽

₍ᵔ.˛.ᵔ₎🪽

@owo7744 -

Seda

Seda

@seda__sanlier -

Talha🌈

Talha🌈

@hendeknisin

Something went wrong.

Something went wrong.