alewkowycz

@alewkowyczMember of Technical Staff at @inflectionAI. Former Research Scientist @Google. In a previous life, I did String Theory. Language models and Conversational AI.

Similar User

@tri_dao

@janleike

@srush_nlp

@barret_zoph

@SebastienBubeck

@jluan

@_beenkim

@bneyshabur

@sirbayes

@giffmana

@hila_chefer

@RichardSSutton

@DaniYogatama

@poolio

@markchen90

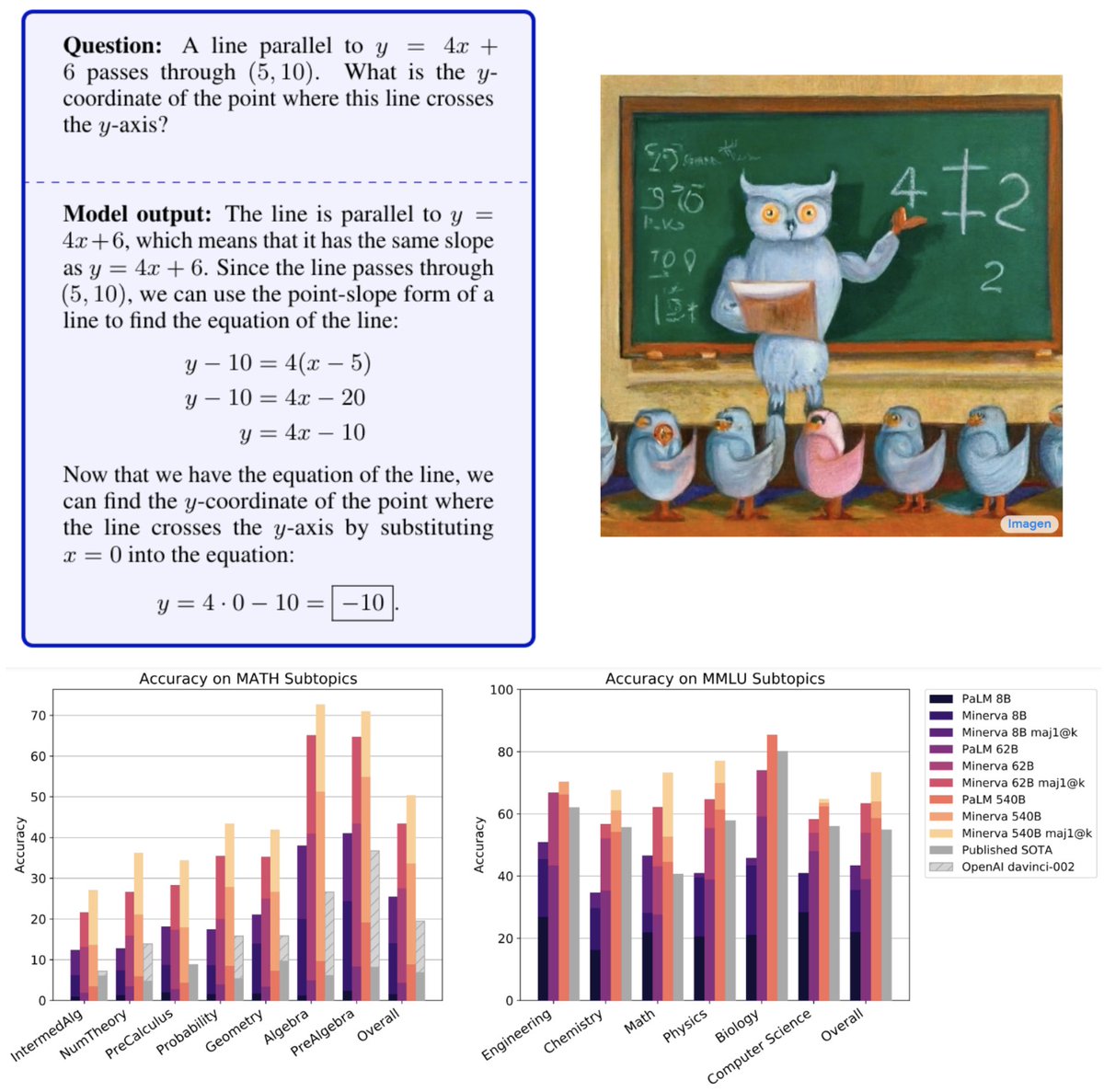

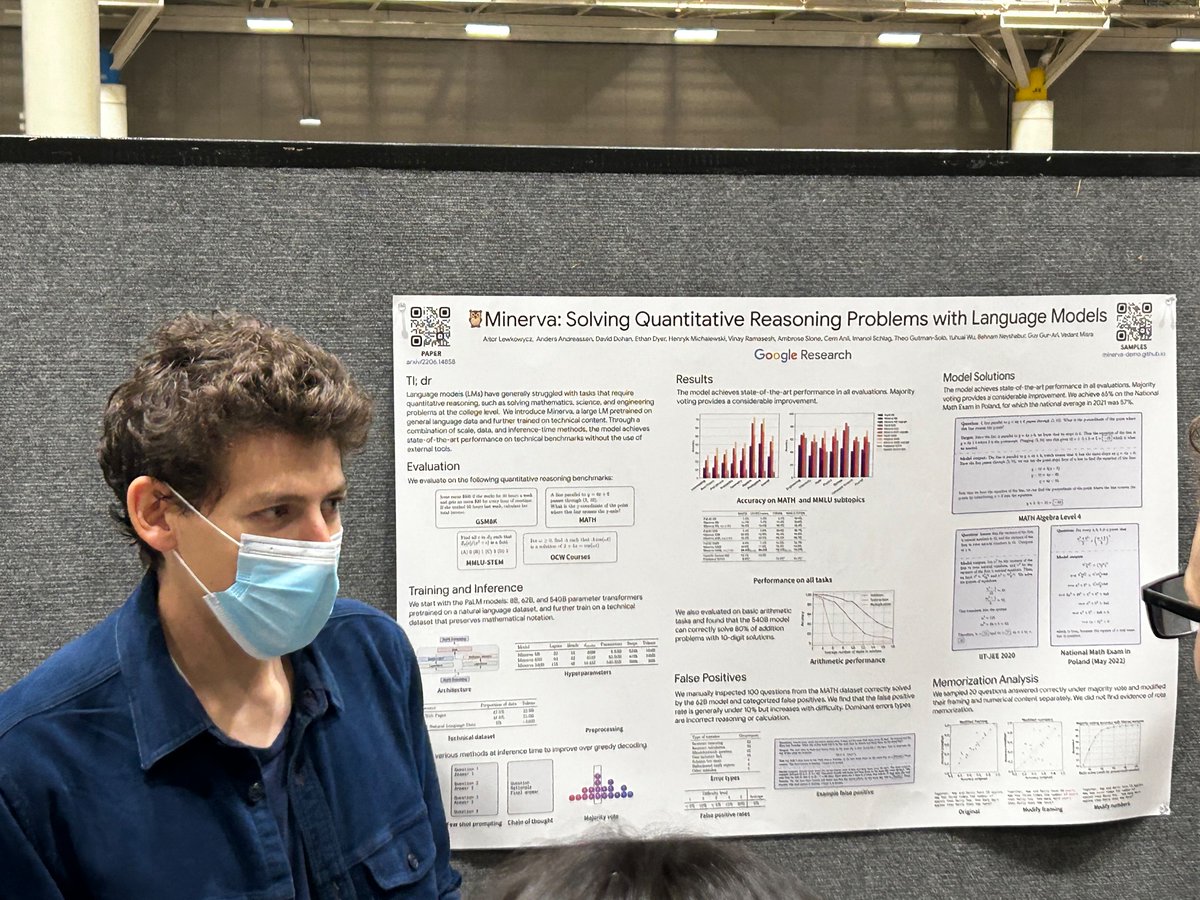

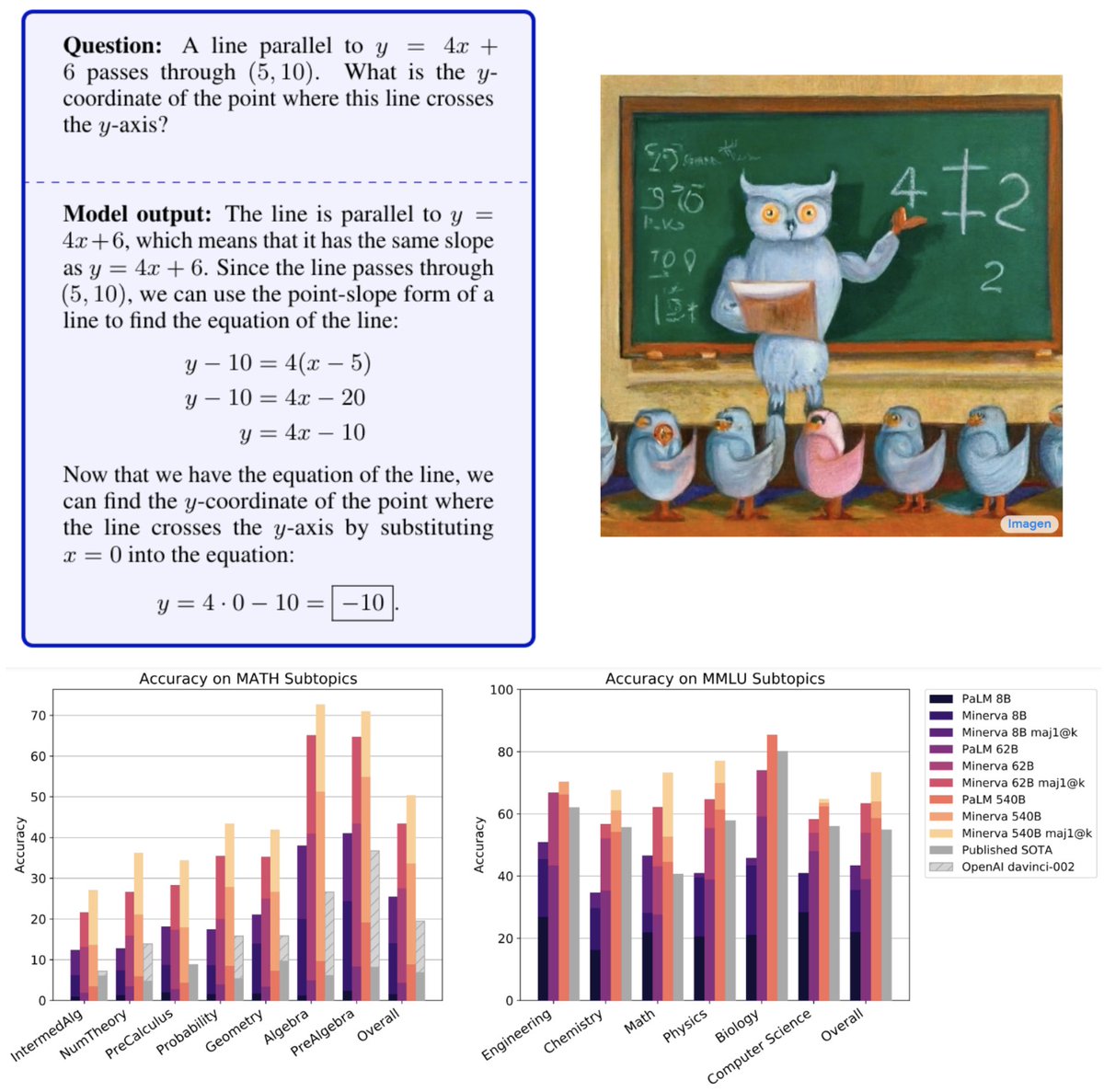

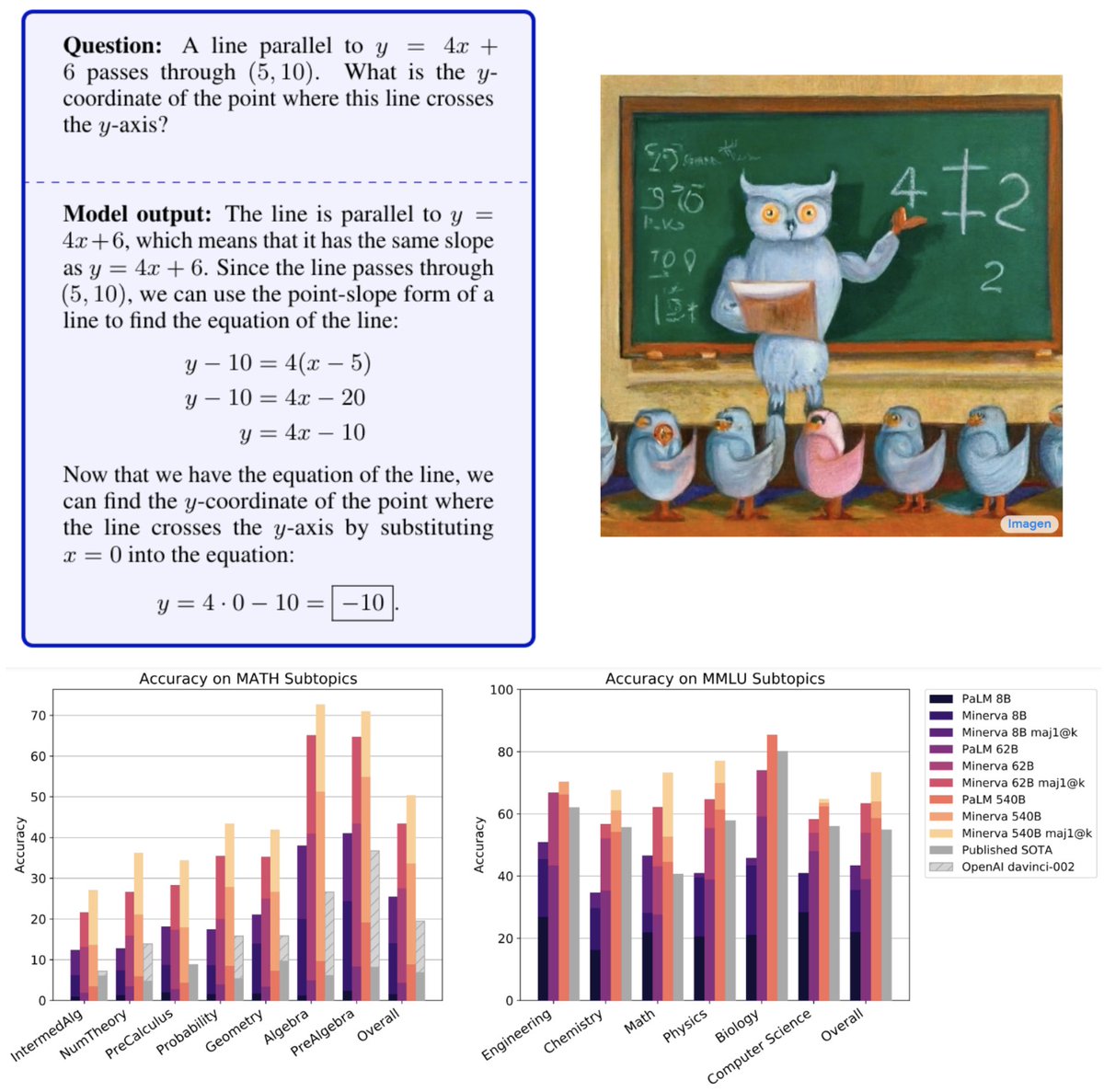

Very excited to present Minerva🦉: a language model capable of solving mathematical questions using step-by-step natural language reasoning. Combining scale, data and others dramatically improves performance on the STEM benchmarks MATH and MMLU-STEM. goo.gle/3yGpTN7

I’ve joined @Microsoft AI to advance the frontier of large scale multimodal AI research and to build products for people to achieve meaningful goals and dreams. The MAI team is small, but well resourced and ambitious. We are now looking for exceptional ICs, who like to ship. If…

Will be joining Microsoft AI to build the next generation of consumer AI!!! 🚀🚀

Welcome to Microsoft, @mustafasuleyman Thrilled to have you lead Microsoft AI as we build consumer AI, like Copilot, that is loved by and benefits people around the world.

Today at Inflection we are announcing some important updates. A new phase for the company begins now. Read more here: inflection.ai/the-new-inflec…

Really proud of the team 🚀🚀

🎉 Introducing Inflection-2, the 2nd best LLM in the world! Get ready to experience the future of AI with us. bit.ly/3TaUpcD

Excited about the future of @inflectionAI !

Excited to announce that we’ve raised $1.3B to build one of the largest clusters in the world and turbocharge the creation of Pi, your personal AI. forbes.com/sites/alexkonr…

We have amazing results to announce! Inflection-1 is our new best-in-class LLM powering Pi, outperforming GPT-3.5, Llama and PALM-540B on major benchmarks commonly used for comparing LLMs. inflection.ai/inflection-1

We are hiring! We develop our own LLMs entirely in house. Our models are currently at SOTA across a very wide range of tasks. If you want to work on some of the best and largest in-production AI systems in the world, just get in touch... inflection.ai/careers

Happy to share Pi with the world! Grateful to be part of such an amazing team!

Minerva author on AI solving math: - IMO gold by 2026 seems reasonable - superhuman math in 2026 not crazy - auto-formalizing is unimpressive to mathematicians as most important theorems are hard to formalize

Happy to release our work on Language Model Cascades. Read on to learn how we can unify existing methods for interacting models (scratchpad/chain of thought, verifiers, tool-use, …) in the language of probabilistic programming. paper: arxiv.org/abs/2207.10342

@ ICML workshops til Sunday! Come by beyond-bayes.github.io workshop Friday @ 9:40am for our talk, with posters @ 5pm. You'll learn how probabilistic programming lets us formalize models talking to models ("model cascades"), unifying many approaches to prompting and inference.

After almost three great years at Google, I decided to move on to my next adventure at @inflectionAI to work on conversational AI. Thanks to everyone at Blueshift and Brain for such great times!

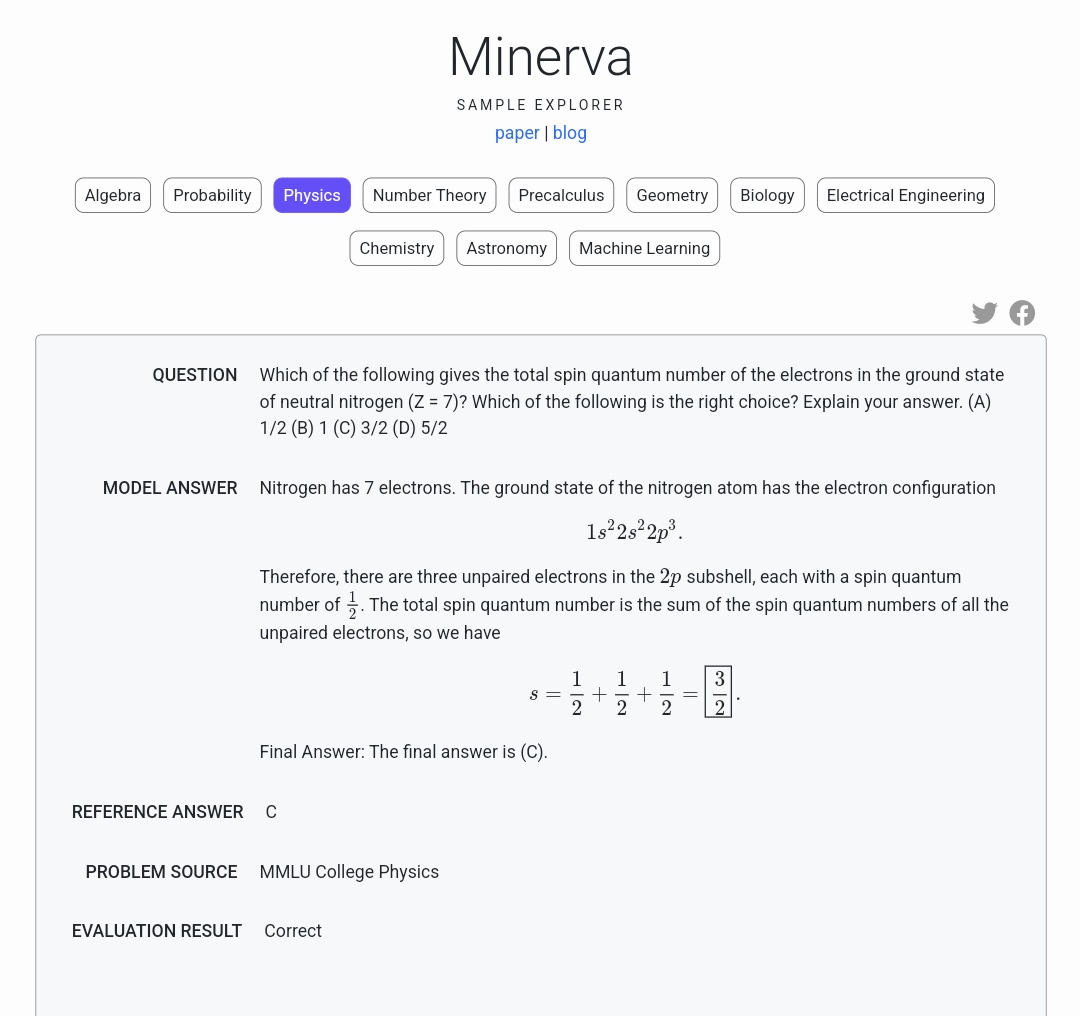

✨🧮 Am getting a kick out of reviewing the examples in Minerva's sample explorer: minerva-demo.github.io/#category=Phys… Anybody want to take bets on how long it will take to have an automated Physics, Chemistry, or Calculus homework checker as a service? 😂 📄: ai.googleblog.com/2022/06/minerv…

AI lawyer (student?) coming soon?

Pile of Law: Learning Responsible Data Filtering from the Law and a 256GB Open-Source Legal Dataset abs: arxiv.org/abs/2207.00220 ∼256GB dataset of open-source English-language legal and administrative data, covering court opinions, contracts, administrative rules, etc

Interestingly, forecasters' biggest miss was on the MATH dataset, where @alewkowycz @ethansdyer and others set a record of 50.3% on the very last day of June! One day made a huge difference.

With current evaluations, the closest application seems education. Really curious what these models can do in terms of knowledge generation. Even if these models are interpolating in a large space, there are many holes to fill in the scientific knowledge graph!

The paper focuses on quantifiable problem solving, but 🦉does great at explaining technical concepts. It has read all of the arXiv after all. Curious about REINFORCE? Just prompt it to write a paper on it: `\section{A derivation of the score function gradient estimator}`

The paper focuses on quantifiable problem solving, but 🦉does great at explaining technical concepts. It has read all of the arXiv after all. Curious about REINFORCE? Just prompt it to write a paper on it: `\section{A derivation of the score function gradient estimator}`

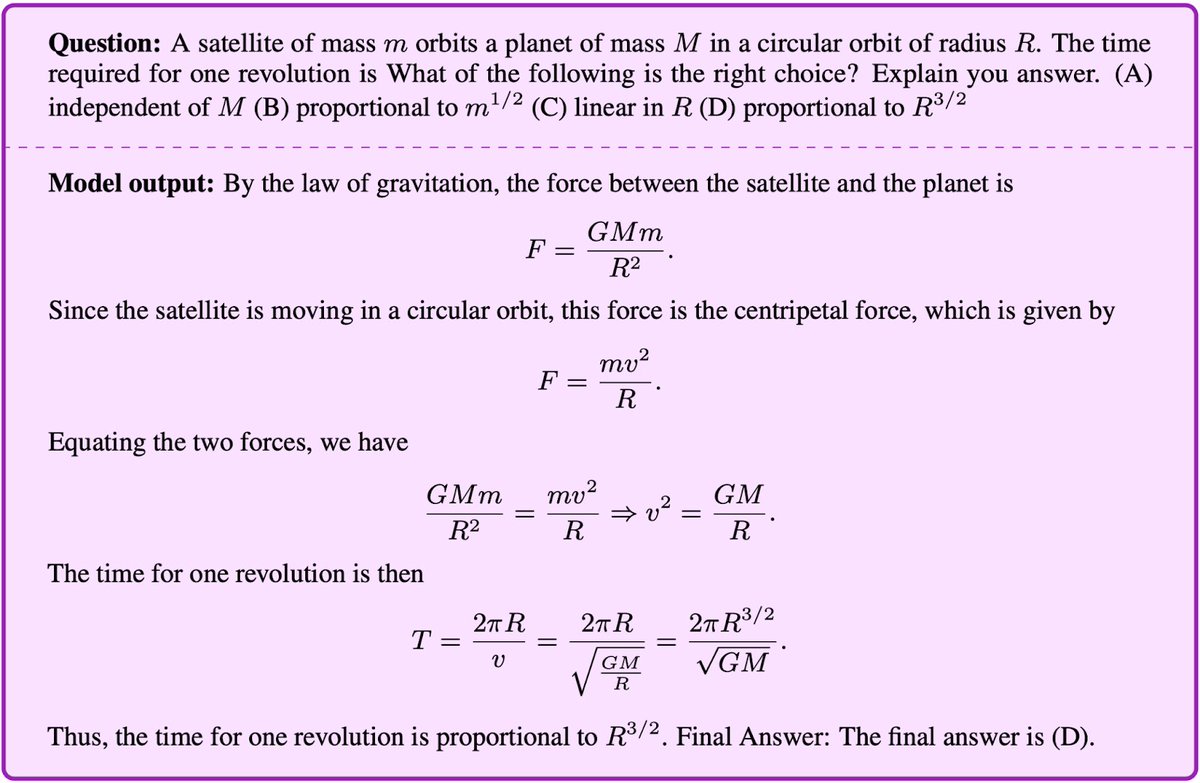

1/ Super excited to introduce #Minerva 🦉(goo.gle/3yGpTN7). Minerva was trained on math and science found on the web and can solve many multi-step quantitative reasoning problems.

Very excited to present Minerva🦉: a language model capable of solving mathematical questions using step-by-step natural language reasoning. Combining scale, data and others dramatically improves performance on the STEM benchmarks MATH and MMLU-STEM. goo.gle/3yGpTN7

My buddy @alewkowycz's ultimate revenge. Leave physics and then work on putting me out of a job as well!

Very excited to present Minerva🦉: a language model capable of solving mathematical questions using step-by-step natural language reasoning. Combining scale, data and others dramatically improves performance on the STEM benchmarks MATH and MMLU-STEM. goo.gle/3yGpTN7

Large language models continuing their bit surprisingly rapid advances, here in solving math/STEM problems, without substantial architecture modifications or paradigm shifts. "The main novelty of this paper is a large training dataset", and fine-tuning on top of PaLM 540B.

Very excited to present Minerva🦉: a language model capable of solving mathematical questions using step-by-step natural language reasoning. Combining scale, data and others dramatically improves performance on the STEM benchmarks MATH and MMLU-STEM. goo.gle/3yGpTN7

United States Trends

- 1. #UFCMacau 20,4 B posts

- 2. #ArcaneSeason2 164 B posts

- 3. Wayne 91,8 B posts

- 4. Jayce 70,6 B posts

- 5. Ekko 66,5 B posts

- 6. Good Saturday 23,5 B posts

- 7. Leicester 34,5 B posts

- 8. #saturdaymorning 3.146 posts

- 9. Nicolas Jackson 5.954 posts

- 10. Wang Cong 1.346 posts

- 11. SEVENTEEN 1,43 Mn posts

- 12. Jinx 192 B posts

- 13. Maddie 21,3 B posts

- 14. Nico Jackson N/A

- 15. Gabriella Fernandes N/A

- 16. #LEICHE 17,1 B posts

- 17. #SaturdayVibes 4.571 posts

- 18. Ulberg 2.237 posts

- 19. Shi Ming 1.967 posts

- 20. Volkan 3.946 posts

Who to follow

-

Tri Dao

Tri Dao

@tri_dao -

Jan Leike

Jan Leike

@janleike -

Sasha Rush

Sasha Rush

@srush_nlp -

Barret Zoph

Barret Zoph

@barret_zoph -

Sebastien Bubeck

Sebastien Bubeck

@SebastienBubeck -

David Luan

David Luan

@jluan -

Been Kim

Been Kim

@_beenkim -

Behnam Neyshabur

Behnam Neyshabur

@bneyshabur -

Kevin Patrick Murphy

Kevin Patrick Murphy

@sirbayes -

Lucas Beyer (bl16)

Lucas Beyer (bl16)

@giffmana -

Hila Chefer

Hila Chefer

@hila_chefer -

Richard Sutton

Richard Sutton

@RichardSSutton -

Dani Yogatama

Dani Yogatama

@DaniYogatama -

Ben Poole

Ben Poole

@poolio -

Mark Chen

Mark Chen

@markchen90

Something went wrong.

Something went wrong.