Varun Kapoor

@__setitem__Staff ML engineer at Kietzmann Lab, founder of non-profit @kapoorlabs

Similar User

@__init_self

@DirkGutlin

@PhilipSulewski

@AnthesDaniel

@vkakerbeck

@RowanSommers

@ThirzaDado

@MathisPink

@LuChunYeh

@andropar

@levandyck

@florianmahner

@StalenhoefLaura

@MaelleLerebourg

We are announcing that PyTorch will stop publishing Anaconda packages on PyTorch’s official anaconda channels. For more information, please refer to the following post on dev-discuss: dev-discuss.pytorch.org/t/pytorch-depr…

The 'ResNet Strikes Back' paper is so good. We should try to create better (and simpler) training procedures more often. Our paper 'Simplifying neural network training under class imbalance' was an attempt to do it for imbalanced training. arxiv.org/abs/2312.02517

I released timm 1.0.8 last night. The recent MobileNet baseline weights are part of the release, check out my previous tweet. A small update w/ several new MobileNet-v4 anti-aliased and in12k models & weights in there. Additionally more flexibility for vit & swin image size...

Python in Neuroscience and Academic Labs #python talkpython.fm/episodes/show/…

🐍🎧 Constraint Programming & Exploring Python's Built-in Functions What are discrete optimization problems? How do you solve them with constraint programming in Python? @cltrudeau is back bringing another batch of PyCoder's Weekly articles and projects. realpython.com/podcasts/rpp/2…

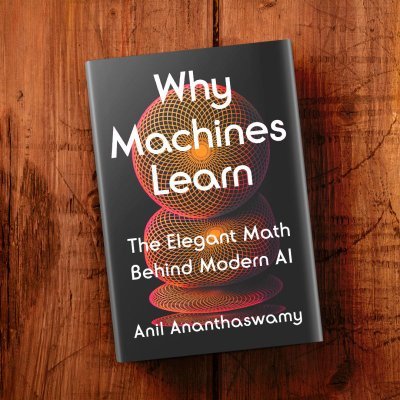

As you read this, both your phone’s software and your brain’s neural networks are operating in predictable ways even though the electrons’ trajectories and neuronal firing patterns are never exactly the same. Scientists are working out how this is possible.quantamagazine.org/the-new-math-o…

Awesome feeling to exercise brain muscles in tandem with other muscles. Todays topic of choice: deep gaze I

Excited to announce the second iteration of NEAT (Neuro-AI-Talks), which will take place September 2nd-3rd 2024 in Osnabrück. kietzmannlab.org/neat2024 Never heard of it? Let me tell you what this is about 🧵

I swear if i train a model I'm just going to use @pytorch and @LightningAI ... every other lib is so unserious

🤝 Solve tasks and develop AI solutions with the help of automated workflows, where humans and AI models work together.

aMUSEd's small size opens up doors to local hacking. Just chugged my local M2 and it went like a breeze with a batch size of 4 and 12 steps. Check out aMUSEd here 👉 huggingface.co/blog/amused

KerasCV now includes a Segment Anything implementation, which enables you to do image segmentation using only "prompt points". No training data needed. With the JAX backend, it runs ~5x faster on GPU than the original PyTorch implementation. Told you JAX was fast. Guide:…

This is one of the hidden secrets of scalable research labs: creating template experimental codebases that allow fast testing of wild research ideas. You need your experiment, data, compute, and model abstractions just right 1/

it also follows that the returns to making simple codebases to test research ideas must be really high and also that the returns to making ai engineering tools are enormously high

#NEAT2023 was a blast, thanks to everyone joining us in Osnabrück for a day of interaction, collaboration and neuroAI

Inside the Matrix: Use 3D to visualize matrix multiplication expressions, attention heads with real weights, and more. ⚡ Read our latest post on visualizing matrix multiplication, attention and beyond: hubs.ly/Q0230BWD0

NEW PAPER 🧵: Deep neural networks are complicated, but looking inside them ought to be simple. In this paper we introduce TorchLens, a package for extracting all activations and metadata from any #PyTorch model, and visualizing its structure, in as little as one line of code. 1/

As some of you have asked, here is a link to my CCN keynote from this year. Main topic: how we use ANNs as a language to describe, implement, and test theories of brain computations. #neuroconnectionism youtube.com/watch?v=pwFh_b…

Folks using @weights_biases with @pytorchlightnin using our WandbLogger, faces the misalignment issue. The `global_step` is not the same as wandb's internal `step`. This makes comparing a training run with a training+validation run. The solution is simple. Check out this video:

United States Trends

- 1. $DUCK 7.647 posts

- 2. Bill Belichick 30,4 B posts

- 3. $PLUR N/A

- 4. Iran 268 B posts

- 5. Wray 147 B posts

- 6. Casas 30,3 B posts

- 7. West Point 73 B posts

- 8. Vatican 11,2 B posts

- 9. #4YearsOfEvermore 7.514 posts

- 10. Rich Rod 1.615 posts

- 11. New Jersey 140 B posts

- 12. Hartline 1.745 posts

- 13. Ferran 53 B posts

- 14. Beal 1.317 posts

- 15. Chapel Bill 4.939 posts

- 16. Pentagon 52 B posts

- 17. Sinema 19,2 B posts

- 18. Montana 21,9 B posts

- 19. Man City 54,4 B posts

- 20. F-35 9.517 posts

Who to follow

-

Victoria Bosch

Victoria Bosch

@__init_self -

Dirk Gütlin

Dirk Gütlin

@DirkGutlin -

Philip Sulewski

Philip Sulewski

@PhilipSulewski -

Daniel Anthes

Daniel Anthes

@AnthesDaniel -

Viviane Clay

Viviane Clay

@vkakerbeck -

Rowan Sommers

Rowan Sommers

@RowanSommers -

Thirza Dado

Thirza Dado

@ThirzaDado -

Mathis Pink

Mathis Pink

@MathisPink -

Lu-Chun Yeh

Lu-Chun Yeh

@LuChunYeh -

Johannes Roth

Johannes Roth

@andropar -

Lenny van Dyck

Lenny van Dyck

@levandyck -

Florian Mahner

Florian Mahner

@florianmahner -

Laura Stalenhoef

Laura Stalenhoef

@StalenhoefLaura -

Maëlle Lerebourg

Maëlle Lerebourg

@MaelleLerebourg

Something went wrong.

Something went wrong.