Similar User

@xuhaiya2483846

@BrainFosterTech

@YeasirAhmad

@Uzimaempower1

@Rhett_Route

@hulldaniel67

@DPedrisco2

@EngrDrAkhabue

@Crispy_Catto_AD

@joonkim_cs

@mimiskookie

@JiacenXu

@BkWireless2

@A1lis

@BenceHalpern

How do LLMs learn to reason from data? Are they ~retrieving the answers from parametric knowledge🦜? In our new preprint, we look at the pretraining data and find evidence against this: Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢 🧵⬇️

Model Garden by Google Cloud is badass, just be careful with your API key security lmao You can register for free and get $300 worth of credits. That's a lot to play with and they have all models as shown in the picture below. I recommend getting the free trial, no payment.

🚀 Big news! We’re thrilled to announce the launch of Llama 3.2 Vision Models & Llama Stack on Together AI. 🎉 Free access to Llama 3.2 Vision Model for developers to build and innovate with open source AI. api.together.ai/playground/cha… ➡️ Learn more in the blog…

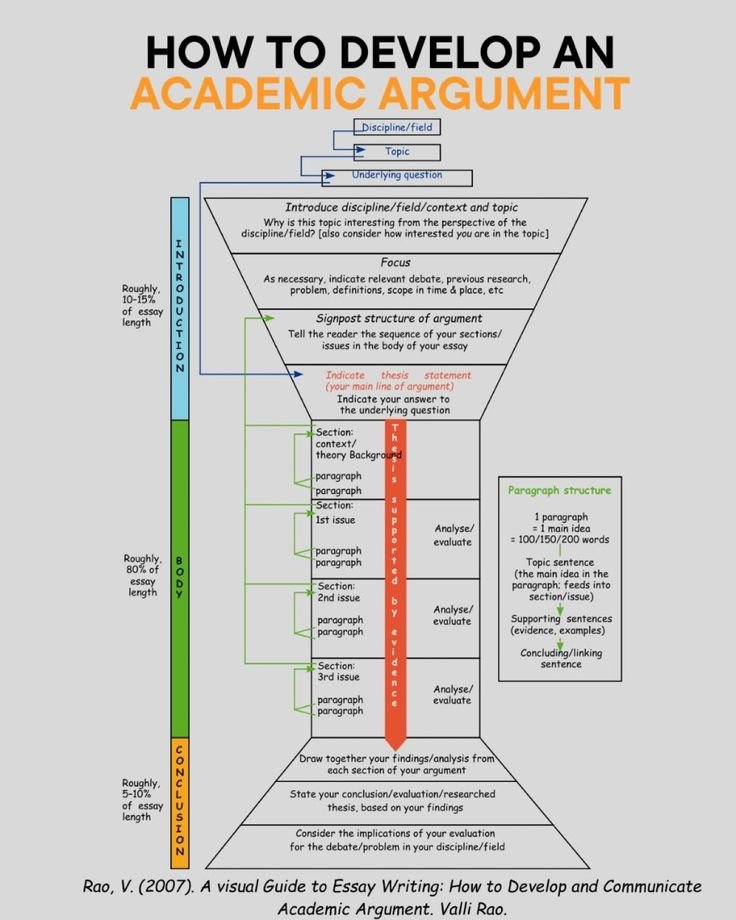

How to write a Research Proposal (1/4)

What is a 𝗩𝗲𝗰𝘁𝗼𝗿 𝗗𝗮𝘁𝗮𝗯𝗮𝘀𝗲? With the rise of Foundational Models, Vector Databases skyrocketed in popularity. The truth is that a Vector Database is also useful outside of a Large Language Model context. When it comes to Machine Learning, we often deal with Vector…

Writing a scientific article: A step-by-step guide for beginners (1/7)

我研发了一款沉浸式英语跟读应用,通过 AI 声音检测实现了全新的跟读体验。用户跟读完一句后,系统会自动播放下一句。真正做到一气呵成、沉浸跟读。在过去的 20 个小时里,我持续打磨细节、修复 Bug,终于网站成功上线!希望大家多多评论、转发,您的支持是我不断迭代动力!@blackanger

Run evals—directly from the OpenAI dashboard. Use your test data to compare model performance, iterate on prompts, and improve outputs. platform.openai.com/docs/guides/ev… Here's a quick walkthrough:

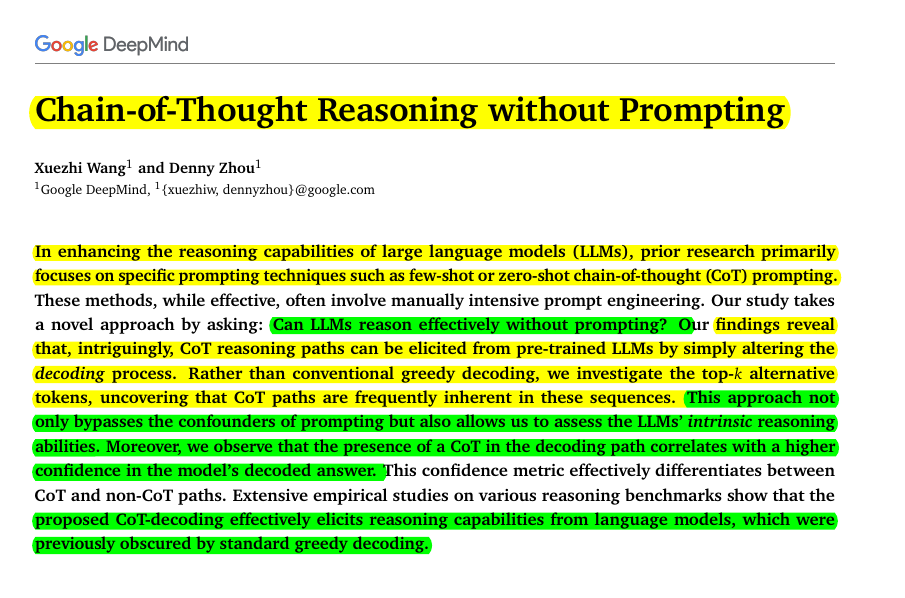

Can LLMs reason effectively without prompting? Great paper by @GoogleDeepMind By considering multiple paths during decoding, LLMs show improved reasoning without special prompts. It reveals LLMs' natural reasoning capabilities. LLMs can reason better by exploring multiple…

在 GitHub 上发现一款强大可离线的开源 AI 桌面应用:ScreenPipe。 它能够对你的电脑进行 24 小时监控,通过屏幕录制、OCR、音频输入和转录收集信息,并保存到本地数据库。 GitHub:github.com/mediar-ai/scre… 最后,利用 LLMs 直接对话、总结、回顾,你所在电脑上做过的事情。有点猛呀!

最近电脑代理工具都换 Mihomo Party 了,强烈推荐下。 1. 开源免费,支持 Windows、macOS 和 Linux,下载直接给你中文版本选择,对新手非常友好 2. 基于 Clash 二次开发,无缝支持 Clash 配置文件 3. 界面美观易用,打开就有非常详细的引导 4. 内置 Sub-Store 官网下载:mihomo.party

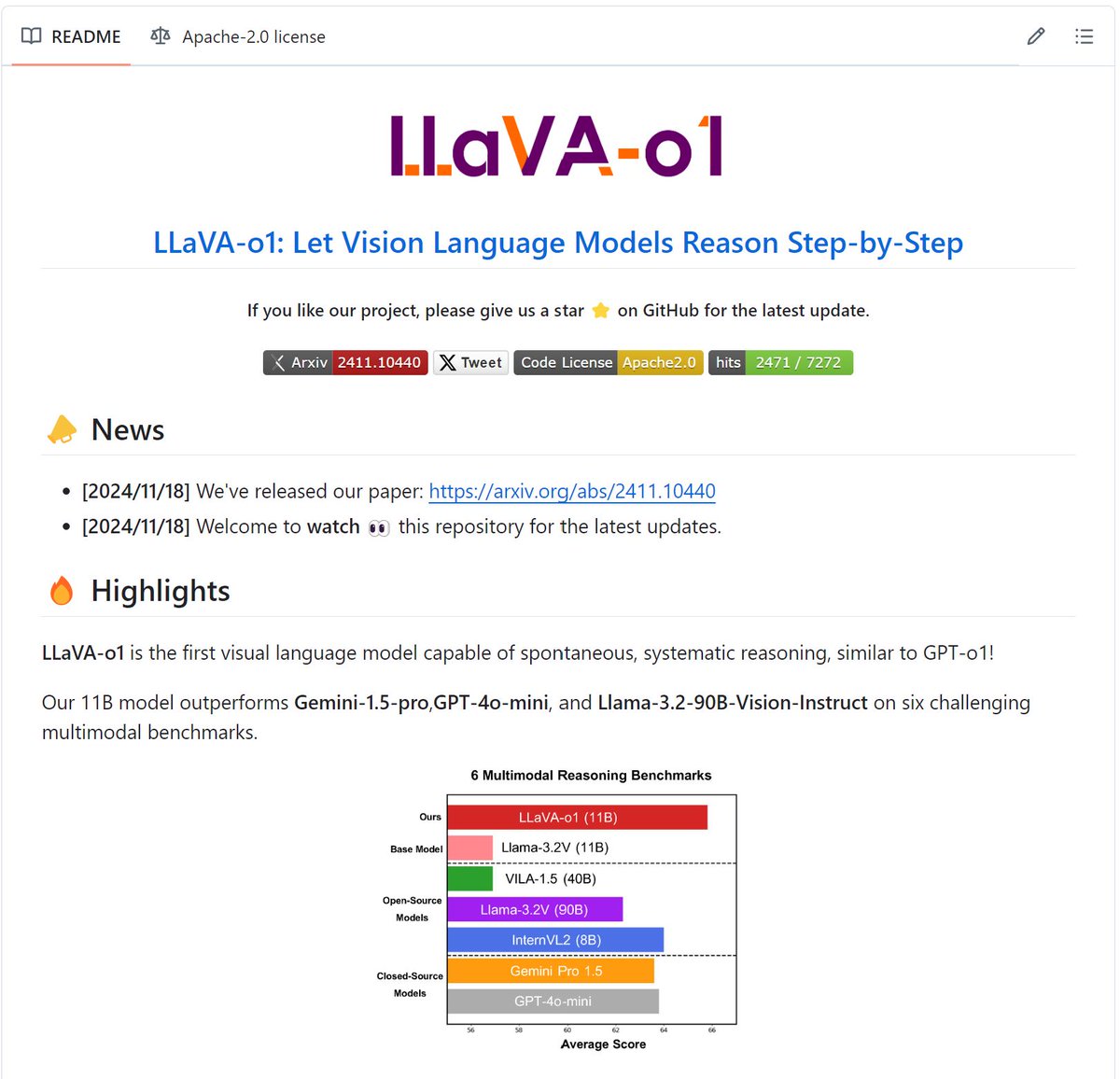

LLaVA-o1 is the first visual language model capable of spontaneous, systematic reasoning, similar to GPT-o1! 🤯 11B model outperforms Gemini-1.5-pro,GPT-4o-mini, and Llama-3.2-90B-Vision-Instruct on six multimodal benchmarks.

Transformer by hand ✍️ in Excel ~ I just released my first-ever "Full-Stack" implementation of the Transformer model. 👇Download xlsx to give it a try!

语音理解性能逼近GPT-4o的一款开源多模态实时语音模型:Ultravox v0.4.1,直接理解文本和人类语音,无需单独的ASR,目前支持文本输出 首次响应时间150毫秒,生成速度约60token/秒 基于Llama3.1-8B、whisper构建 github:github.com/fixie-ai/ultra… @Gradio huggingface.co/spaces/freddya… #AI实时语音…

Audio LMs scene is heating up! 🔥 @FixieAI Ultravox 0.4.1 - 8B model approaching GPT4o level, pick any LLM, train an adapter with Whisper as Audio Encoder, profit 💥 Bonus: MIT licensed checkpoints > Pre-trained on Llama3.1-8b/ 70b backbone as well as the encoder part of…

United States Trends

- 1. Browns 92 B posts

- 2. Jameis 43,6 B posts

- 3. Tomlin 19,6 B posts

- 4. #ThePinkPrintAnniversary 16,6 B posts

- 5. Pickens 14,5 B posts

- 6. #PITvsCLE 11,4 B posts

- 7. #TNFonPrime 5.400 posts

- 8. Pam Bondi 229 B posts

- 9. Russ 36,5 B posts

- 10. #PinkprintNIKA 8.493 posts

- 11. #DawgPound 7.079 posts

- 12. Myles Garrett 8.779 posts

- 13. Fields 51,7 B posts

- 14. AFC North 7.680 posts

- 15. Nick Chubb 5.486 posts

- 16. TJ Watt 7.564 posts

- 17. Chris Brown 13,7 B posts

- 18. Arthur Smith 3.018 posts

- 19. Pittsburgh 16,7 B posts

- 20. Njoku 7.349 posts

Who to follow

-

xuhaiyang-mPLUG

xuhaiyang-mPLUG

@xuhaiya2483846 -

Brainfoster Tech Private Limited

Brainfoster Tech Private Limited

@BrainFosterTech -

Ahmad Jarif Yeasir

Ahmad Jarif Yeasir

@YeasirAhmad -

Uzima_empowerment

Uzima_empowerment

@Uzimaempower1 -

Rhett Route

Rhett Route

@Rhett_Route -

Daniel Hull

Daniel Hull

@hulldaniel67 -

Mikasa

Mikasa

@DPedrisco2 -

Akhabue Ehichoya Odianosen

Akhabue Ehichoya Odianosen

@EngrDrAkhabue -

Cris.py_AD

Cris.py_AD

@Crispy_Catto_AD -

Hyojoon (Joon) Kim

Hyojoon (Joon) Kim

@joonkim_cs -

beeツ

beeツ

@mimiskookie -

Jiacen Xu

Jiacen Xu

@JiacenXu -

BkWireless2

BkWireless2

@BkWireless2 -

XiV(えくしぶ)

XiV(えくしぶ)

@A1lis -

Bence Halpern

Bence Halpern

@BenceHalpern

Something went wrong.

Something went wrong.